Stefan Fichna

Evaluation of Virtual Acoustic Environments with Different Acoustic Level of Detail

Jun 29, 2023Abstract:Virtual acoustic environments enable the creation and simulation of realistic and ecologically valid daily-life situations with applications in hearing research and audiology. Hereby, reverberant indoor environments play an important role. For real-time applications, simplifications in the room acoustics simulation are required, however, it remains unclear what acoustic level of detail (ALOD) is necessary to capture all perceptually relevant effects. This study investigates the effect of varying ALOD in the simulation of three different real environments, a living room with a coupled kitchen, a pub, and an underground station. ALOD was varied by generating different numbers of image sources for early reflections, or by excluding geometrical room details specific for each environment. The simulations were perceptually evaluated using headphones in comparison to binaural room impulse responses measured with a dummy head in the corresponding real environments. The study assessed the perceived overall difference for a pink pulse, and a speech token. Furthermore, plausibility and externalization were evaluated. The results show that a strong reduction in ALOD is possible while obtaining similar plausibility and externalization as with dummy head recordings. The number and accuracy of early reflections appear less relevant, provided diffuse late reverberation is appropriately accounted for.

Effect of acoustic scene complexity and visual scene representation on auditory perception in virtual audio-visual environments

Jun 30, 2021

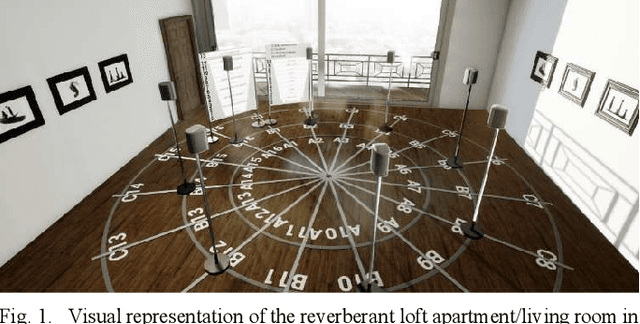

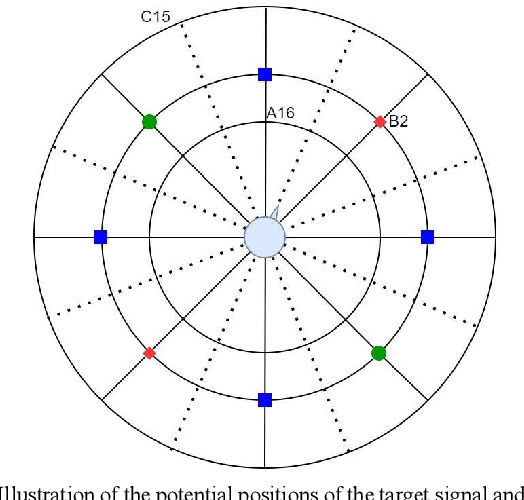

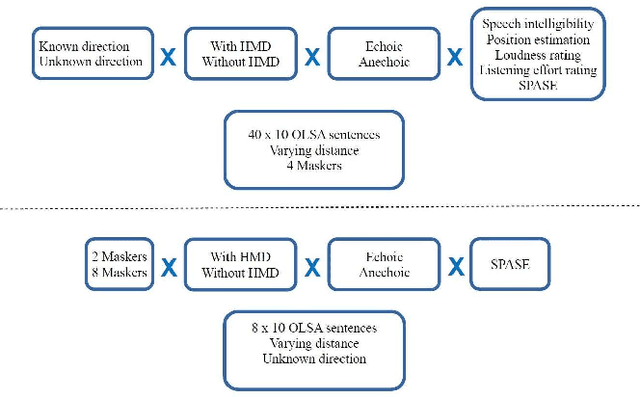

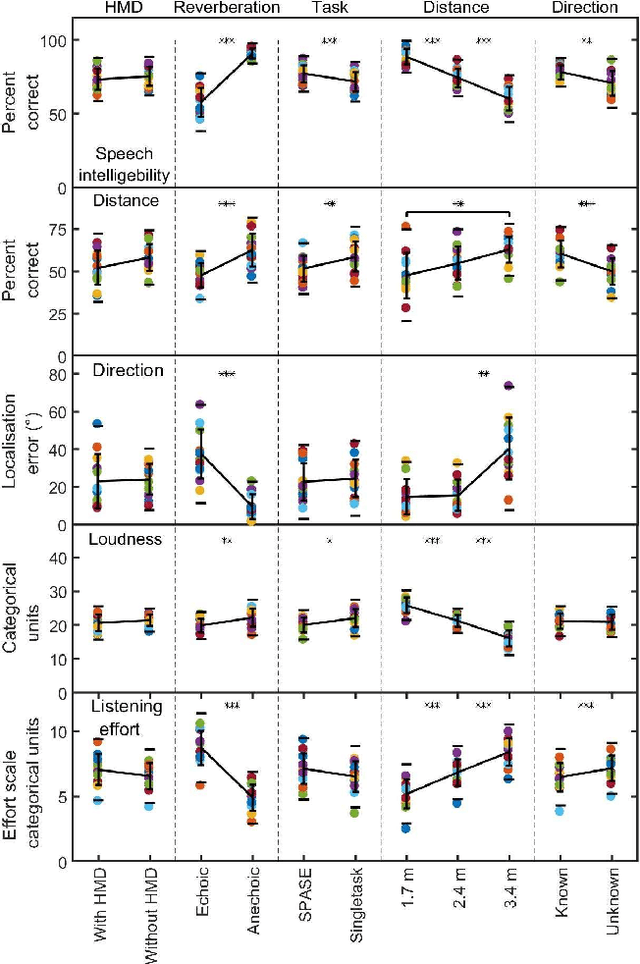

Abstract:In daily life, social interaction and acoustic communication often take place in complex acoustic environments (CAE) with a variety of interfering sounds and reverberation. For hearing research and evaluation of hearing systems simulated CAEs using virtual reality techniques have gained interest in the context of ecologically validity. In the current study, the effect of scene complexity and visual representation of the scene on psychoacoustic measures like sound source location, distance perception, loudness, speech intelligibility, and listening effort in a virtual audio-visual environment was investigated. A 3-dimensional, 86-channel loudspeaker array was used to render the sound field in combination with or without a head-mounted display (HMD) to create an immersive stereoscopic visual representation of the scene. The scene consisted of a ring of eight (virtual) loudspeakers which played a target speech stimulus and non-sense speech interferers in several spatial conditions. Either an anechoic (snowy outdoor scenery) or echoic environment (loft apartment) with a reverberation time (T60) of about 1.5 s was simulated. In addition to varying the number of interferers, scene complexity was varied by assessing the psychoacoustic measures in isolated consecutive measurements or simultaneously. Results showed no significant effect of wearing the HMD on the data. Loudness and distance perception showed significantly different results when they were measured simultaneously instead of consecutively in isolation. The advantage of the suggested setup is that it can be directly transferred to a corresponding real room, enabling a 1:1 comparison and verification of the perception experiments in the real and virtual environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge