Kristin I. Bracklo

Prediction of tone detection thresholds in interaurally delayed noise based on interaural phase difference fluctuations

Jul 01, 2021

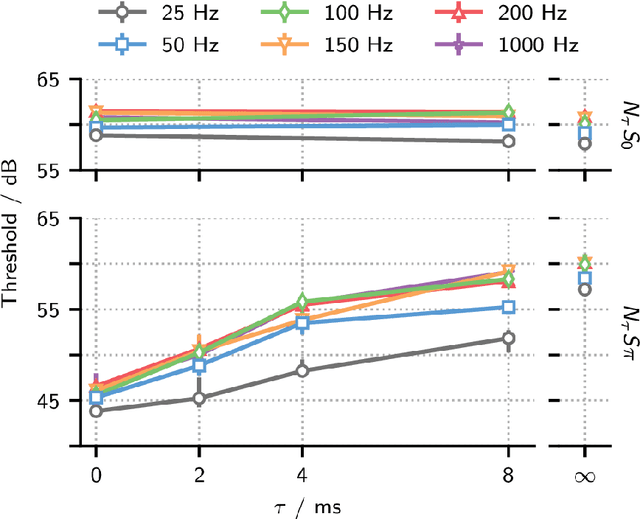

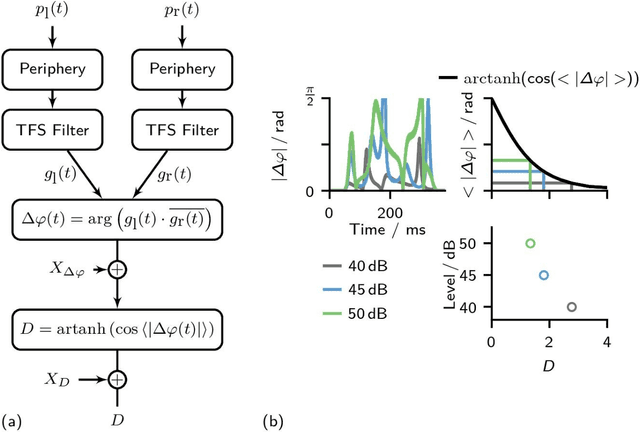

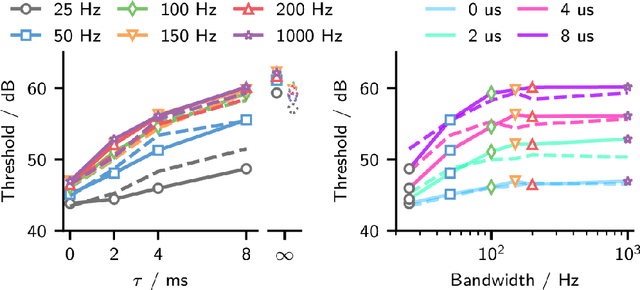

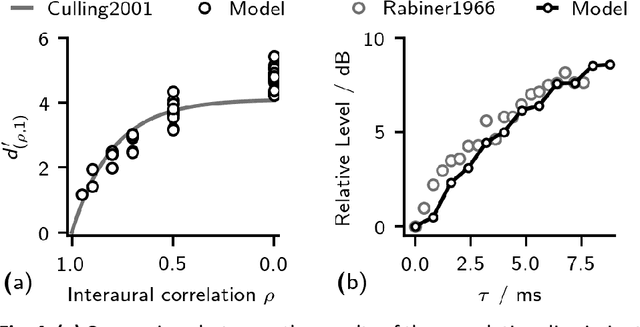

Abstract:Differences between the interaural phase of a noise and a target tone improve detection thresholds. The maximum masking release is obtained for detecting an antiphasic tone (S$\pi$) in diotic noise (N0). It has been shown in several studies that this benefit gradually declines as an interaural delay is applied to the N0S$\pi$ complex. This decline has been attributed to the reduced interaural coherence of the noise. Here, we report detection thresholds for a 500 Hz tone in masking noise with up to 8 ms interaural delay and bandwidths from 25 to 1000 Hz. When reducing the noise bandwidth from 100 to 50 and 25 Hz, the masking release at 8 ms delay increases, as expected for increasing temporal coherence with decreasing bandwidth. For bandwidths of 100 to 1000 Hz, no significant difference was observed and detection thresholds with these noises have a delay dependence that is fully described by the temporal coherence imposed by the typical monaurally determined auditory filter bandwidth. A minimalistic binaural model is suggested based on interaural phase difference fluctuations without the assumption of delay lines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge