Stanislav Dereka

Unveiling Empirical Pathologies of Laplace Approximation for Uncertainty Estimation

Dec 16, 2023Abstract:In this paper, we critically evaluate Bayesian methods for uncertainty estimation in deep learning, focusing on the widely applied Laplace approximation and its variants. Our findings reveal that the conventional method of fitting the Hessian matrix negatively impacts out-of-distribution (OOD) detection efficiency. We propose a different point of view, asserting that focusing solely on optimizing prior precision can yield more accurate uncertainty estimates in OOD detection while preserving adequate calibration metrics. Moreover, we demonstrate that this property is not connected to the training stage of a model but rather to its intrinsic properties. Through extensive experimental evaluation, we establish the superiority of our simplified approach over traditional methods in the out-of-distribution domain.

Diversifying Deep Ensembles: A Saliency Map Approach for Enhanced OOD Detection, Calibration, and Accuracy

May 19, 2023

Abstract:Deep ensembles achieved state-of-the-art results in classification and out-of-distribution (OOD) detection; however, their effectiveness remains limited due to the homogeneity of learned patterns within the ensemble. To overcome this challenge, our study introduces a novel approach that promotes diversity among ensemble members by leveraging saliency maps. By incorporating saliency map diversification, our method outperforms conventional ensemble techniques in multiple classification and OOD detection tasks, while also improving calibration. Experiments on well-established OpenOOD benchmarks highlight the potential of our method in practical applications.

Deep Image Retrieval is not Robust to Label Noise

May 23, 2022

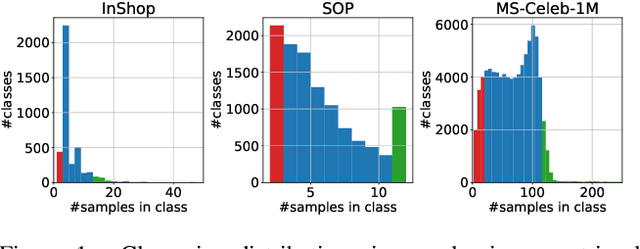

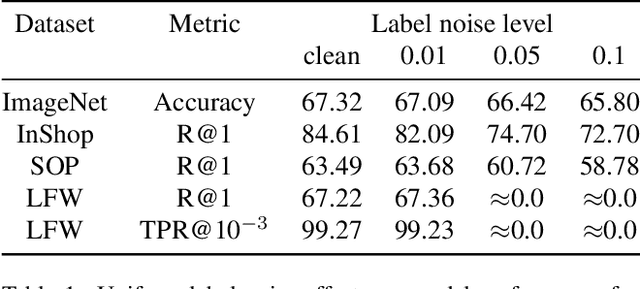

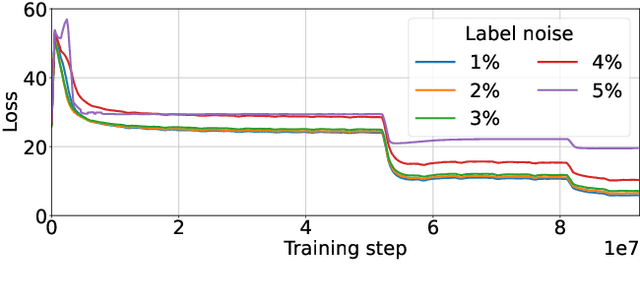

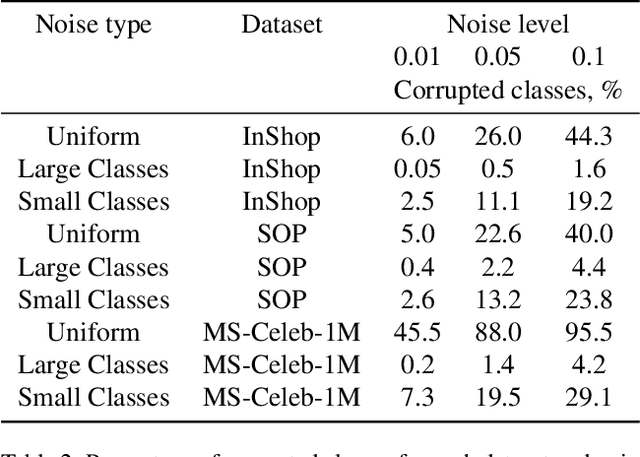

Abstract:Large-scale datasets are essential for the success of deep learning in image retrieval. However, manual assessment errors and semi-supervised annotation techniques can lead to label noise even in popular datasets. As previous works primarily studied annotation quality in image classification tasks, it is still unclear how label noise affects deep learning approaches to image retrieval. In this work, we show that image retrieval methods are less robust to label noise than image classification ones. Furthermore, we, for the first time, investigate different types of label noise specific to image retrieval tasks and study their effect on model performance.

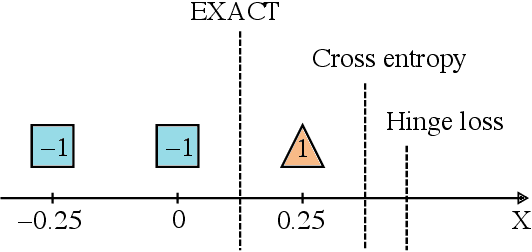

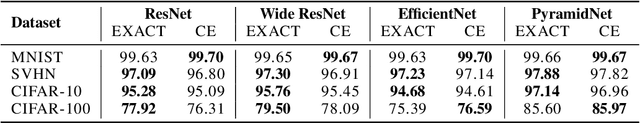

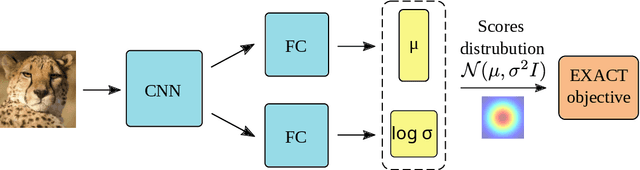

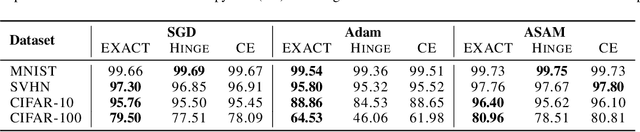

EXACT: How to Train Your Accuracy

May 19, 2022

Abstract:Classification tasks are usually evaluated in terms of accuracy. However, accuracy is discontinuous and cannot be directly optimized using gradient ascent. Popular methods minimize cross-entropy, Hinge loss, or other surrogate losses, which can lead to suboptimal results. In this paper, we propose a new optimization framework by introducing stochasticity to a model's output and optimizing expected accuracy, i.e. accuracy of the stochastic model. Extensive experiments on image classification show that the proposed optimization method is a powerful alternative to widely used classification losses.

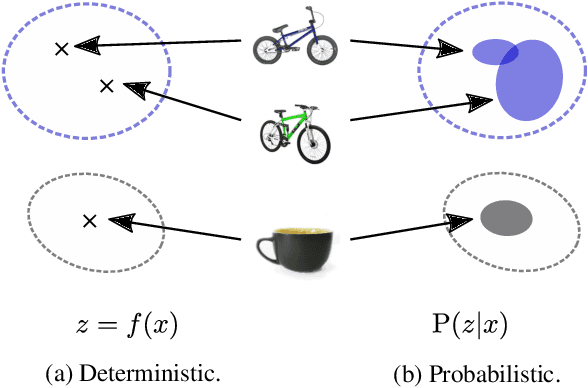

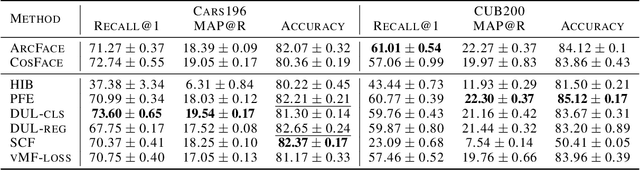

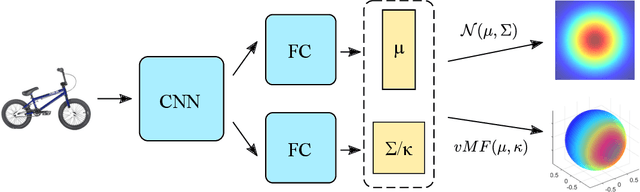

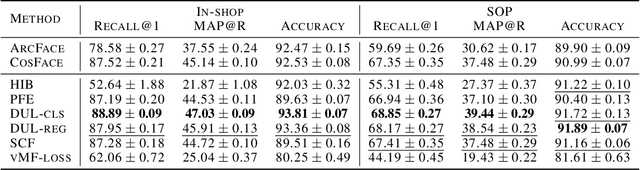

Probabilistic Embeddings Revisited

Feb 14, 2022

Abstract:In recent years, deep metric learning and its probabilistic extensions achieved state-of-the-art results in a face verification task. However, despite improvements in face verification, probabilistic methods received little attention in the community. It is still unclear whether they can improve image retrieval quality. In this paper, we present an extensive comparison of probabilistic methods in verification and retrieval tasks. Following the suggested methodology, we outperform metric learning baselines using probabilistic methods and propose several directions for future work and improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge