Spiros Mancoridis

Towards Type Agnostic Cyber Defense Agents

Dec 02, 2024Abstract:With computing now ubiquitous across government, industry, and education, cybersecurity has become a critical component for every organization on the planet. Due to this ubiquity of computing, cyber threats have continued to grow year over year, leading to labor shortages and a skills gap in cybersecurity. As a result, many cybersecurity product vendors and security organizations have looked to artificial intelligence to shore up their defenses. This work considers how to characterize attackers and defenders in one approach to the automation of cyber defense -- the application of reinforcement learning. Specifically, we characterize the types of attackers and defenders in the sense of Bayesian games and, using reinforcement learning, derive empirical findings about how to best train agents that defend against multiple types of attackers.

The Price of Pessimism for Automated Defense

Sep 28, 2024Abstract:The well-worn George Box aphorism ``all models are wrong, but some are useful'' is particularly salient in the cybersecurity domain, where the assumptions built into a model can have substantial financial or even national security impacts. Computer scientists are often asked to optimize for worst-case outcomes, and since security is largely focused on risk mitigation, preparing for the worst-case scenario appears rational. In this work, we demonstrate that preparing for the worst case rather than the most probable case may yield suboptimal outcomes for learning agents. Through the lens of stochastic Bayesian games, we first explore different attacker knowledge modeling assumptions that impact the usefulness of models to cybersecurity practitioners. By considering different models of attacker knowledge about the state of the game and a defender's hidden information, we find that there is a cost to the defender for optimizing against the worst case.

Simulation of Attacker Defender Interaction in a Noisy Security Game

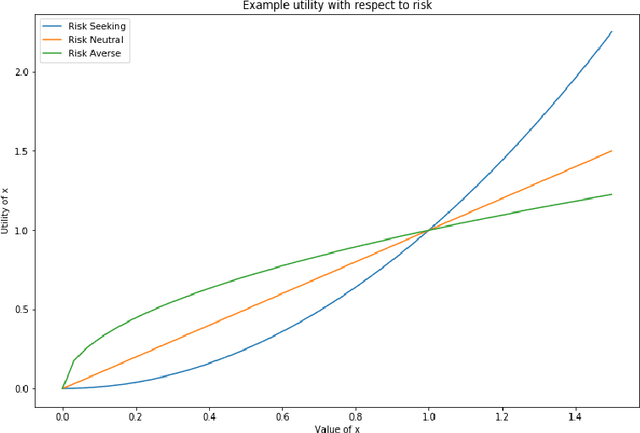

Dec 08, 2022Abstract:In the cybersecurity setting, defenders are often at the mercy of their detection technologies and subject to the information and experiences that individual analysts have. In order to give defenders an advantage, it is important to understand an attacker's motivation and their likely next best action. As a first step in modeling this behavior, we introduce a security game framework that simulates interplay between attackers and defenders in a noisy environment, focusing on the factors that drive decision making for attackers and defenders in the variants of the game with full knowledge and observability, knowledge of the parameters but no observability of the state (``partial knowledge''), and zero knowledge or observability (``zero knowledge''). We demonstrate the importance of making the right assumptions about attackers, given significant differences in outcomes. Furthermore, there is a measurable trade-off between false-positives and true-positives in terms of attacker outcomes, suggesting that a more false-positive prone environment may be acceptable under conditions where true-positives are also higher.

Evaluation of an Anomaly Detector for Routers using Parameterizable Malware in an IoT Ecosystem

Oct 29, 2021

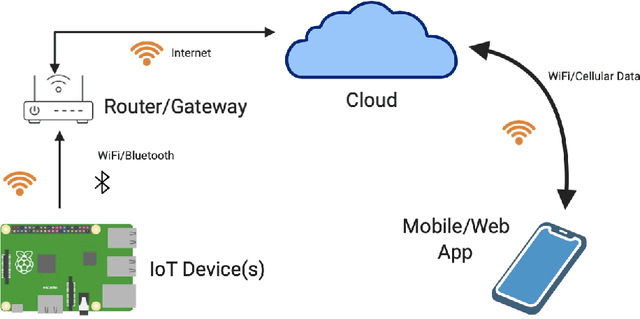

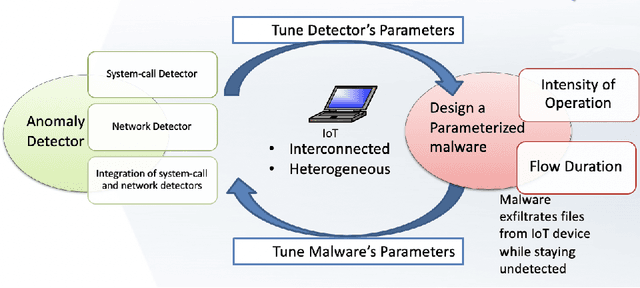

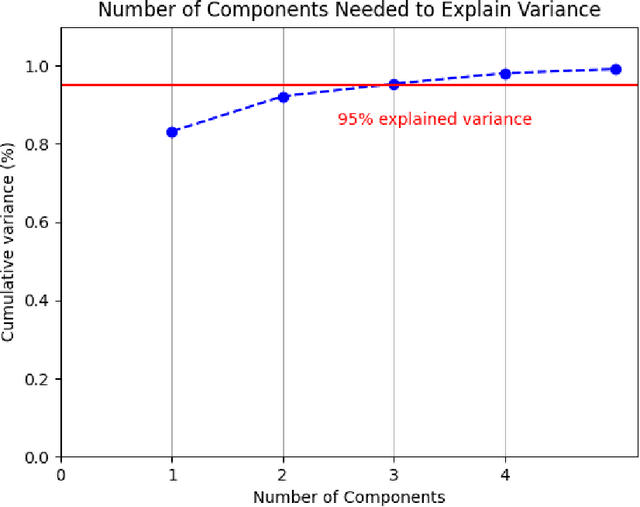

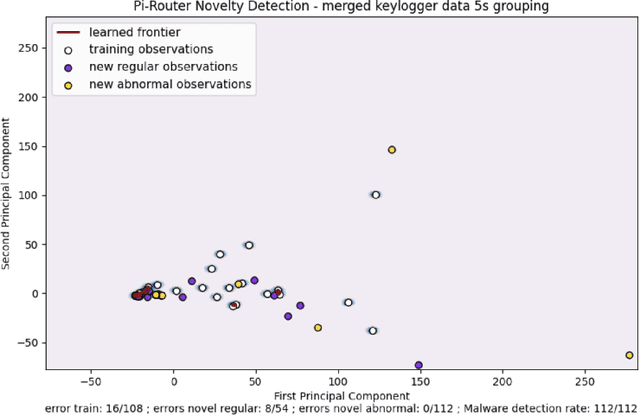

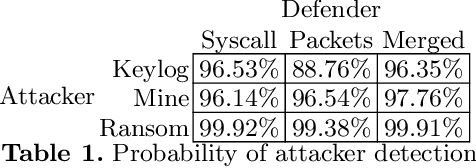

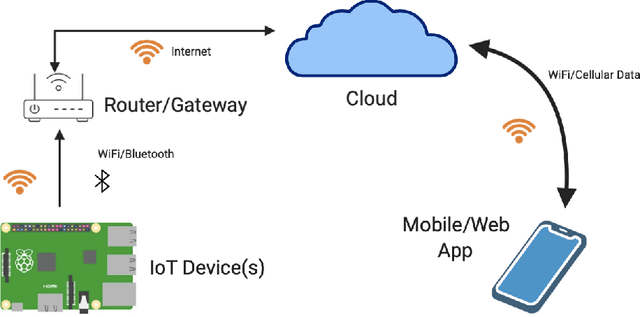

Abstract:This work explores the evaluation of a machine learning anomaly detector using custom-made parameterizable malware in an Internet of Things (IoT) Ecosystem. It is assumed that the malware has infected, and resides on, the Linux router that serves other devices on the network, as depicted in Figure 1. This IoT Ecosystem was developed as a testbed to evaluate the efficacy of a behavior-based anomaly detector. The malware consists of three types of custom-made malware: ransomware, cryptominer, and keylogger, which all have exfiltration capabilities to the network. The parameterization of the malware gives the malware samples multiple degrees of freedom, specifically relating to the rate and size of data exfiltration. The anomaly detector uses feature sets crafted from system calls and network traffic, and uses a Support Vector Machine (SVM) for behavioral-based anomaly detection. The custom-made malware is used to evaluate the situations where the SVM is effective, as well as the situations where it is not effective.

Evaluating Attacker Risk Behavior in an Internet of Things Ecosystem

Sep 23, 2021

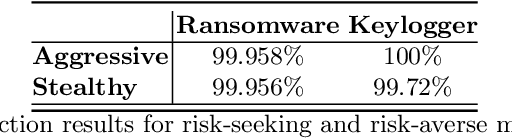

Abstract:In cybersecurity, attackers range from brash, unsophisticated script kiddies and cybercriminals to stealthy, patient advanced persistent threats. When modeling these attackers, we can observe that they demonstrate different risk-seeking and risk-averse behaviors. This work explores how an attacker's risk seeking or risk averse behavior affects their operations against detection-optimizing defenders in an Internet of Things ecosystem. Using an evaluation framework which uses real, parametrizable malware, we develop a game that is played by a defender against attackers with a suite of malware that is parameterized to be more aggressive and more stealthy. These results are evaluated under a framework of exponential utility according to their willingness to accept risk. We find that against a defender who must choose a single strategy up front, risk-seeking attackers gain more actual utility than risk-averse attackers, particularly in cases where the defender is better equipped than the two attackers anticipate. Additionally, we empirically confirm that high-risk, high-reward scenarios are more beneficial to risk-seeking attackers like cybercriminals, while low-risk, low-reward scenarios are more beneficial to risk-averse attackers like advanced persistent threats.

Behavioral Malware Classification using Convolutional Recurrent Neural Networks

Nov 19, 2018

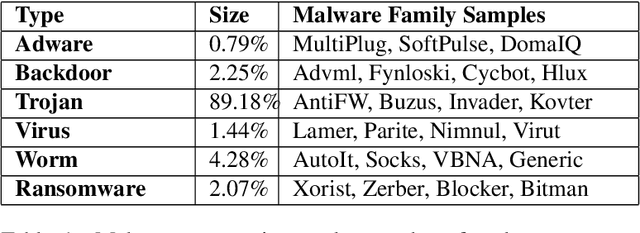

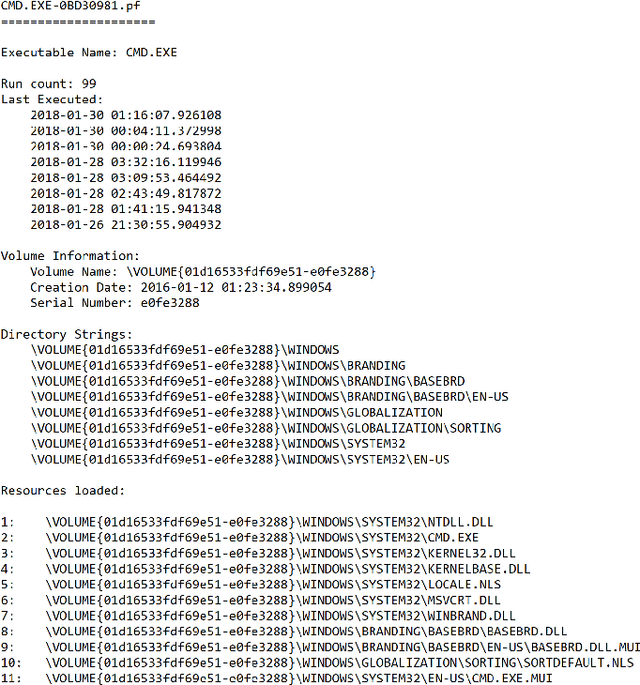

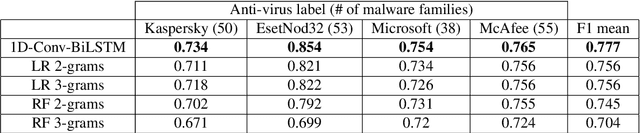

Abstract:Behavioral malware detection aims to improve on the performance of static signature-based techniques used by anti-virus systems, which are less effective against modern polymorphic and metamorphic malware. Behavioral malware classification aims to go beyond the detection of malware by also identifying a malware's family according to a naming scheme such as the ones used by anti-virus vendors. Behavioral malware classification techniques use run-time features, such as file system or network activities, to capture the behavioral characteristic of running processes. The increasing volume of malware samples, diversity of malware families, and the variety of naming schemes given to malware samples by anti-virus vendors present challenges to behavioral malware classifiers. We describe a behavioral classifier that uses a Convolutional Recurrent Neural Network and data from Microsoft Windows Prefetch files. We demonstrate the model's improvement on the state-of-the-art using a large dataset of malware families and four major anti-virus vendor naming schemes. The model is effective in classifying malware samples that belong to common and rare malware families and can incrementally accommodate the introduction of new malware samples and families.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge