Sourav Sengupta

Performance Modeling and Workload Analysis of Distributed Large Language Model Training and Inference

Jul 19, 2024

Abstract:Aligning future system design with the ever-increasing compute needs of large language models (LLMs) is undoubtedly an important problem in today's world. Here, we propose a general performance modeling methodology and workload analysis of distributed LLM training and inference through an analytical framework that accurately considers compute, memory sub-system, network, and various parallelization strategies (model parallel, data parallel, pipeline parallel, and sequence parallel). We validate our performance predictions with published data from literature and relevant industry vendors (e.g., NVIDIA). For distributed training, we investigate the memory footprint of LLMs for different activation re-computation methods, dissect the key factors behind the massive performance gain from A100 to B200 ($\sim$ 35x speed-up closely following NVIDIA's scaling trend), and further run a design space exploration at different technology nodes (12 nm to 1 nm) to study the impact of logic, memory, and network scaling on the performance. For inference, we analyze the compute versus memory boundedness of different operations at a matrix-multiply level for different GPU systems and further explore the impact of DRAM memory technology scaling on inference latency. Utilizing our modeling framework, we reveal the evolution of performance bottlenecks for both LLM training and inference with technology scaling, thus, providing insights to design future systems for LLM training and inference.

Neural Network Influence in Group Technology: A Chronological Survey and Critical Analysis

Dec 21, 2012Abstract:This article portrays a chronological review of the influence of Artificial Neural Network in group technology applications in the vicinity of Cellular Manufacturing Systems. The research trend is identified and the evolvement is captured through a critical analysis of the literature accessible from the very beginning of its practice in the early 90's till the 2010. Analysis of the diverse ANN approaches, spotted research pattern, comparison of the clustering efficiencies, the solutions obtained and the tools used make this study exclusive in its class.

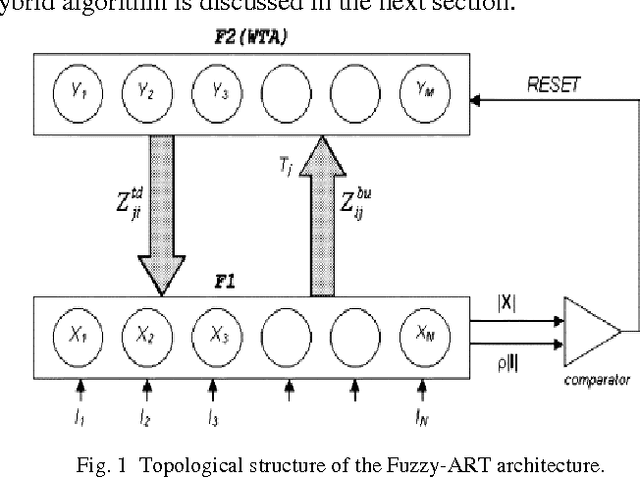

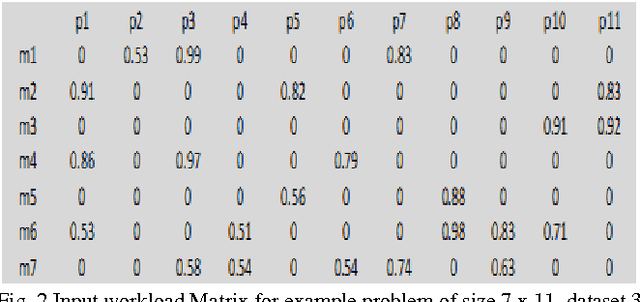

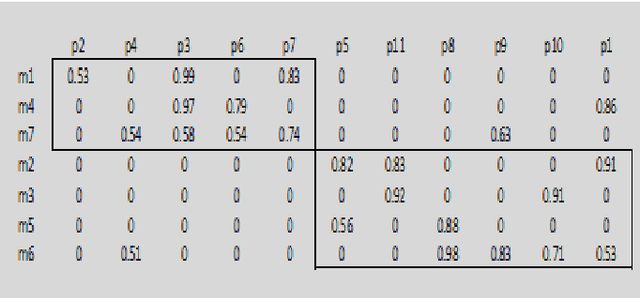

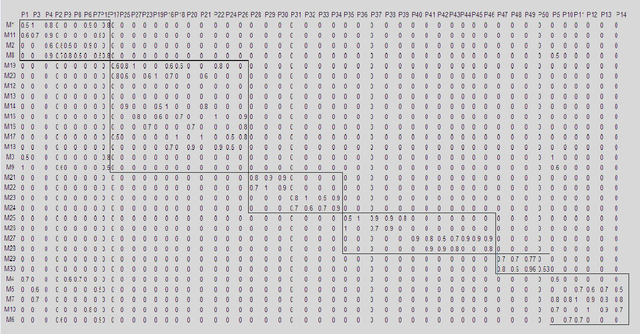

Hybrid Fuzzy-ART based K-Means Clustering Methodology to Cellular Manufacturing Using Operational Time

Dec 20, 2012

Abstract:This paper presents a new hybrid Fuzzy-ART based K-Means Clustering technique to solve the part machine grouping problem in cellular manufacturing systems considering operational time. The performance of the proposed technique is tested with problems from open literature and the results are compared to the existing clustering models such as simple K-means algorithm and modified ART1 algorithm using an efficient modified performance measure known as modified grouping efficiency (MGE) as found in the literature. The results support the better performance of the proposed algorithm. The Novelty of this study lies in the simple and efficient methodology to produce quick solutions for shop floor managers with least computational efforts and time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge