Sourav Dey

CityLearn: Standardizing Research in Multi-Agent Reinforcement Learning for Demand Response and Urban Energy Management

Dec 18, 2020

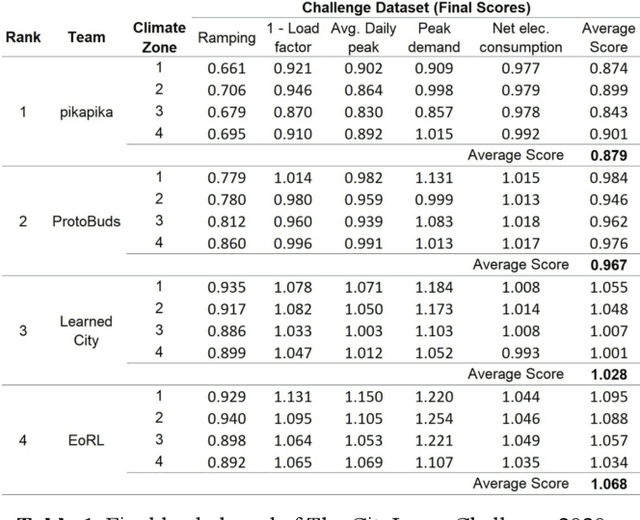

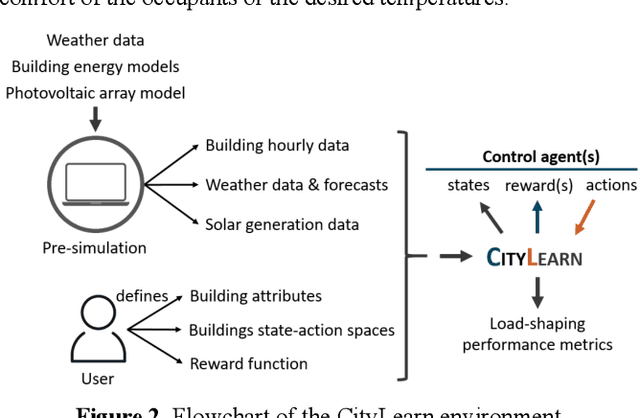

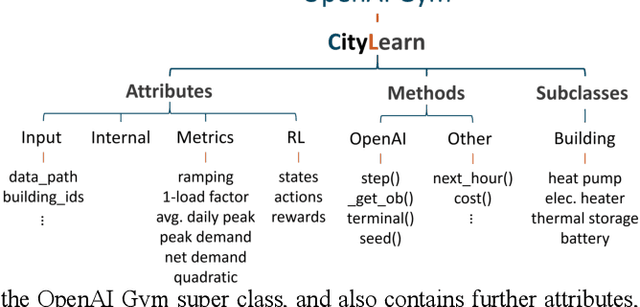

Abstract:Rapid urbanization, increasing integration of distributed renewable energy resources, energy storage, and electric vehicles introduce new challenges for the power grid. In the US, buildings represent about 70% of the total electricity demand and demand response has the potential for reducing peaks of electricity by about 20%. Unlocking this potential requires control systems that operate on distributed systems, ideally data-driven and model-free. For this, reinforcement learning (RL) algorithms have gained increased interest in the past years. However, research in RL for demand response has been lacking the level of standardization that propelled the enormous progress in RL research in the computer science community. To remedy this, we created CityLearn, an OpenAI Gym Environment which allows researchers to implement, share, replicate, and compare their implementations of RL for demand response. Here, we discuss this environment and The CityLearn Challenge, a RL competition we organized to propel further progress in this field.

Deep Residual Auto-Encoders for Expectation Maximization-based Dictionary Learning

Apr 18, 2019

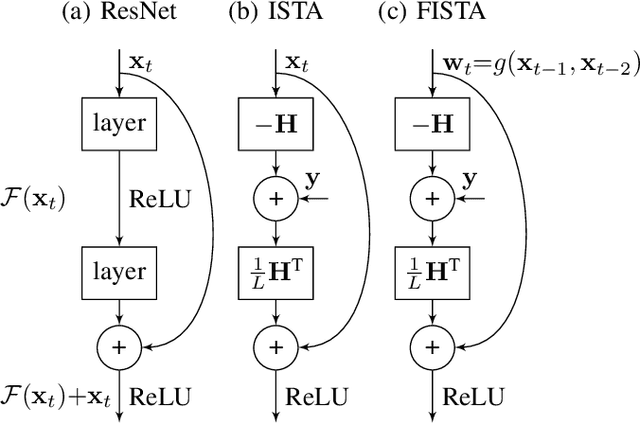

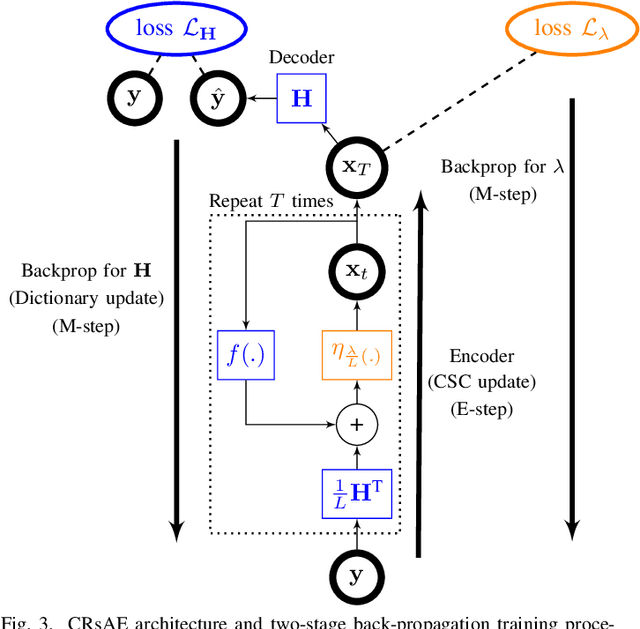

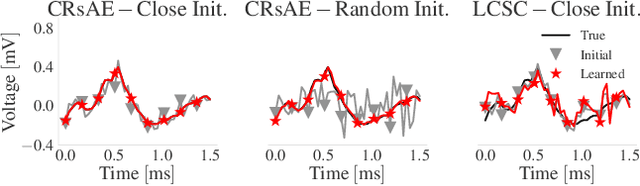

Abstract:Convolutional dictionary learning (CDL) has become a popular method for learning sparse representations from data. State-of-the-art algorithms perform dictionary learning (DL) through an optimization-based alternating-minimization procedure that comprises a sparse coding and a dictionary update step respectively. Here, we draw connections between CDL and neural networks by proposing an architecture for CDL termed the constrained recurrent sparse auto-encoder (CRsAE). We leverage the interpretation of the alternating-minimization algorithm for DL as an Expectation-Maximization algorithm to develop auto-encoders (AEs) that, for the first time, enable the simultaneous training of the dictionary and regularization parameter. The forward pass of the encoder, which performs sparse coding, solves the E-step using an encoding matrix and a soft-thresholding non-linearity imposed by the FISTA algorithm. The encoder in this regard is a variant of residual and recurrent neural networks. The M-step is implemented via a two-stage back-propagation. In the first stage, we perform back-propagation through the AE formed by the encoder and a linear decoder whose parameters are tied to the encoder. This stage parallels the dictionary update step in DL. In the second stage, we update the regularization parameter by performing back-propagation through the encoder using a loss function that includes a prior on the parameter motivated by Bayesian statistics. We leverage GPUs to achieve significant computational gains relative to state-of-the-art optimization-based approaches to CDL. We apply CRsAE to spike sorting, the problem of identifying the time of occurrence of neural action potentials in recordings of electrical activity from the brain. We demonstrate on recordings lasting hours that CRsAE speeds up spike sorting by 900x compared to notoriously slow classical algorithms based on convex optimization.

Scalable Convolutional Dictionary Learning with Constrained Recurrent Sparse Auto-encoders

Jul 12, 2018

Abstract:Given a convolutional dictionary underlying a set of observed signals, can a carefully designed auto-encoder recover the dictionary in the presence of noise? We introduce an auto-encoder architecture, termed constrained recurrent sparse auto-encoder (CRsAE), that answers this question in the affirmative. Given an input signal and an approximate dictionary, the encoder finds a sparse approximation using FISTA. The decoder reconstructs the signal by applying the dictionary to the output of the encoder. The encoder and decoder in CRsAE parallel the sparse-coding and dictionary update steps in optimization-based alternating-minimization schemes for dictionary learning. As such, the parameters of the encoder and decoder are not independent, a constraint which we enforce for the first time. We derive the back-propagation algorithm for CRsAE. CRsAE is a framework for blind source separation that, only knowing the number of sources (dictionary elements), and assuming sparsely-many can overlap, is able to separate them. We demonstrate its utility in the context of spike sorting, a source separation problem in computational neuroscience. We demonstrate the ability of CRsAE to recover the underlying dictionary and characterize its sensitivity as a function of SNR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge