Deep Residual Auto-Encoders for Expectation Maximization-based Dictionary Learning

Paper and Code

Apr 18, 2019

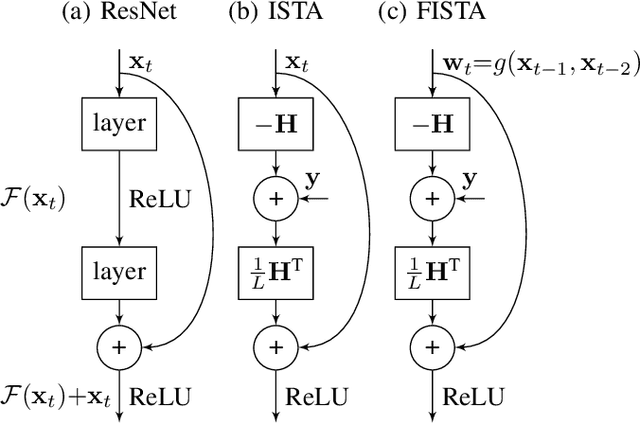

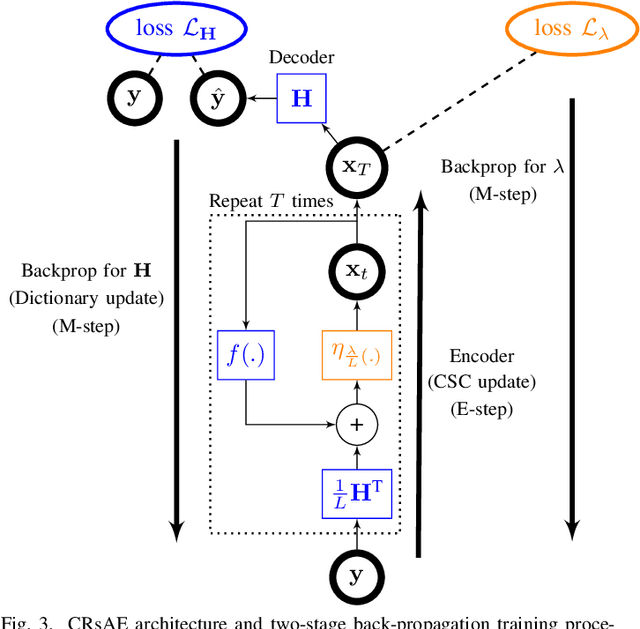

Convolutional dictionary learning (CDL) has become a popular method for learning sparse representations from data. State-of-the-art algorithms perform dictionary learning (DL) through an optimization-based alternating-minimization procedure that comprises a sparse coding and a dictionary update step respectively. Here, we draw connections between CDL and neural networks by proposing an architecture for CDL termed the constrained recurrent sparse auto-encoder (CRsAE). We leverage the interpretation of the alternating-minimization algorithm for DL as an Expectation-Maximization algorithm to develop auto-encoders (AEs) that, for the first time, enable the simultaneous training of the dictionary and regularization parameter. The forward pass of the encoder, which performs sparse coding, solves the E-step using an encoding matrix and a soft-thresholding non-linearity imposed by the FISTA algorithm. The encoder in this regard is a variant of residual and recurrent neural networks. The M-step is implemented via a two-stage back-propagation. In the first stage, we perform back-propagation through the AE formed by the encoder and a linear decoder whose parameters are tied to the encoder. This stage parallels the dictionary update step in DL. In the second stage, we update the regularization parameter by performing back-propagation through the encoder using a loss function that includes a prior on the parameter motivated by Bayesian statistics. We leverage GPUs to achieve significant computational gains relative to state-of-the-art optimization-based approaches to CDL. We apply CRsAE to spike sorting, the problem of identifying the time of occurrence of neural action potentials in recordings of electrical activity from the brain. We demonstrate on recordings lasting hours that CRsAE speeds up spike sorting by 900x compared to notoriously slow classical algorithms based on convex optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge