Sooyoung Jang

Entropy-Aware Model Initialization for Effective Exploration in Deep Reinforcement Learning

Aug 24, 2021

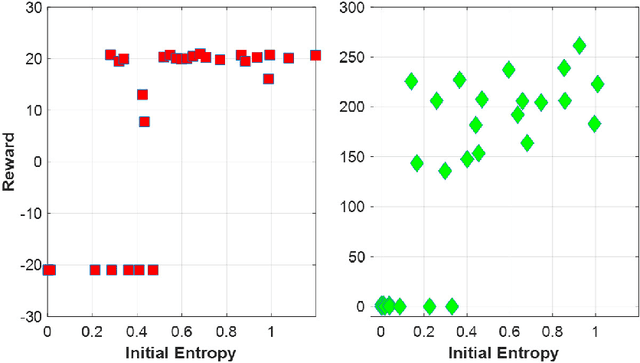

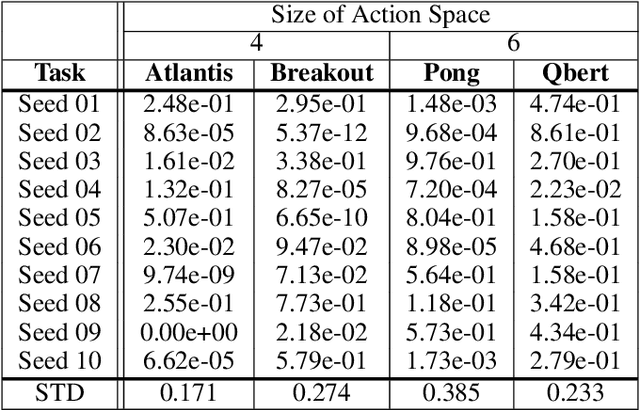

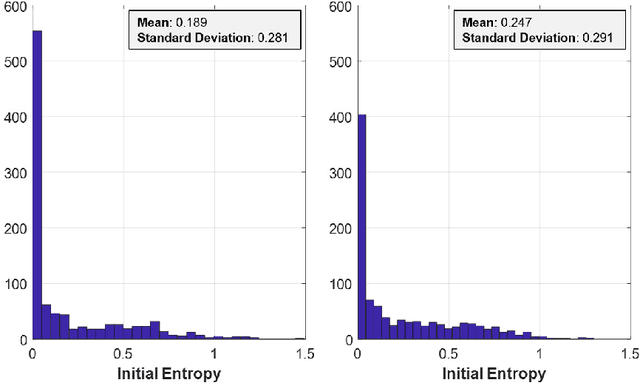

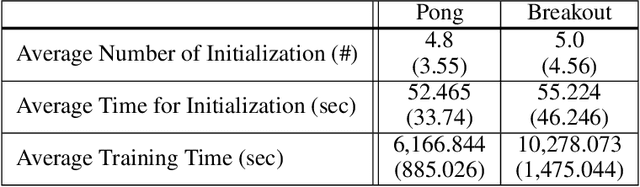

Abstract:Encouraging exploration is a critical issue in deep reinforcement learning. We investigate the effect of initial entropy that significantly influences the exploration, especially at the earlier stage. Our main observations are as follows: 1) low initial entropy increases the probability of learning failure, and 2) this initial entropy is biased towards a low value that inhibits exploration. Inspired by the investigations, we devise entropy-aware model initialization, a simple yet powerful learning strategy for effective exploration. We show that the devised learning strategy significantly reduces learning failures and enhances performance, stability, and learning speed through experiments.

Indoor Path Planning for an Unmanned Aerial Vehicle via Curriculum Learning

Aug 23, 2021

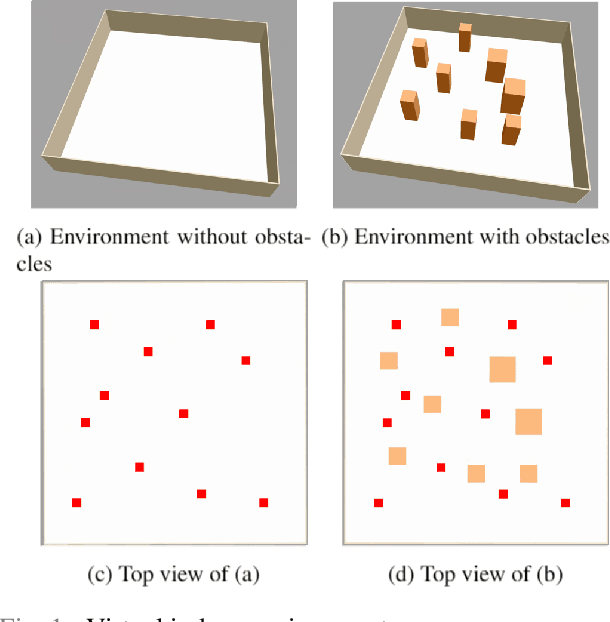

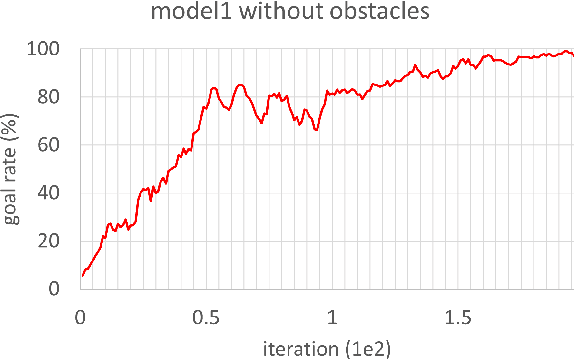

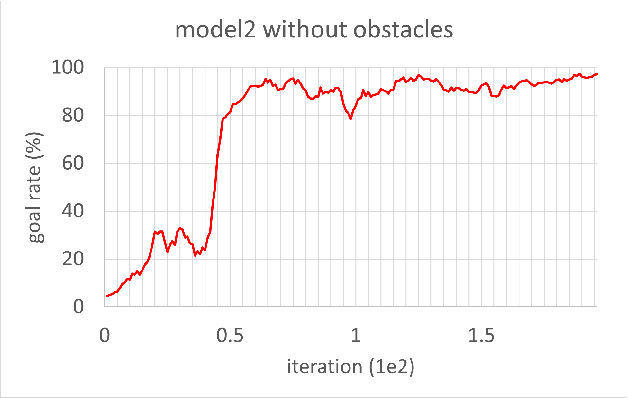

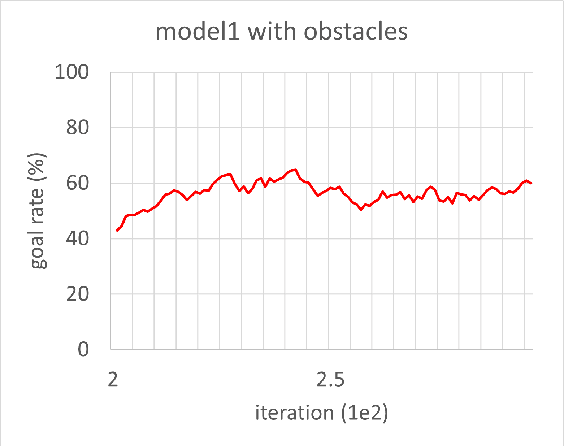

Abstract:In this study, reinforcement learning was applied to learning two-dimensional path planning including obstacle avoidance by unmanned aerial vehicle (UAV) in an indoor environment. The task assigned to the UAV was to reach the goal position in the shortest amount of time without colliding with any obstacles. Reinforcement learning was performed in a virtual environment created using Gazebo, a virtual environment simulator, to reduce the learning time and cost. Curriculum learning, which consists of two stages was performed for more efficient learning. As a result of learning with two reward models, the maximum goal rates achieved were 71.2% and 88.0%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge