Soheil Gharatappeh

Weather-Aware Object Detection Transformer for Domain Adaptation

Apr 15, 2025

Abstract:RT-DETRs have shown strong performance across various computer vision tasks but are known to degrade under challenging weather conditions such as fog. In this work, we investigate three novel approaches to enhance RT-DETR robustness in foggy environments: (1) Domain Adaptation via Perceptual Loss, which distills domain-invariant features from a teacher network to a student using perceptual supervision; (2) Weather Adaptive Attention, which augments the attention mechanism with fog-sensitive scaling by introducing an auxiliary foggy image stream; and (3) Weather Fusion Encoder, which integrates a dual-stream encoder architecture that fuses clear and foggy image features via multi-head self and cross-attention. Despite the architectural innovations, none of the proposed methods consistently outperform the baseline RT-DETR. We analyze the limitations and potential causes, offering insights for future research in weather-aware object detection.

Information Consistent Pruning: How to Efficiently Search for Sparse Networks?

Jan 26, 2025Abstract:Iterative magnitude pruning methods (IMPs), proven to be successful in reducing the number of insignificant nodes in over-parameterized deep neural networks (DNNs), have been getting an enormous amount of attention with the rapid deployment of DNNs into cutting-edge technologies with computation and memory constraints. Despite IMPs popularity in pruning networks, a fundamental limitation of existing IMP algorithms is the significant training time required for each pruning iteration. Our paper introduces a novel \textit{stopping criterion} for IMPs that monitors information and gradient flows between networks layers and minimizes the training time. Information Consistent Pruning (\ourmethod{}) eliminates the need to retrain the network to its original performance during intermediate steps while maintaining overall performance at the end of the pruning process. Through our experiments, we demonstrate that our algorithm is more efficient than current IMPs across multiple dataset-DNN combinations. We also provide theoretical insights into the core idea of our algorithm alongside mathematical explanations of flow-based IMP. Our code is available at \url{https://github.com/Sekeh-Lab/InfCoP}.

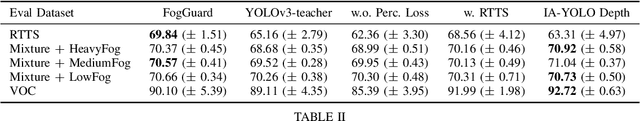

FogGuard: guarding YOLO against fog using perceptual loss

Mar 13, 2024

Abstract:In this paper, we present a novel fog-aware object detection network called FogGuard, designed to address the challenges posed by foggy weather conditions. Autonomous driving systems heavily rely on accurate object detection algorithms, but adverse weather conditions can significantly impact the reliability of deep neural networks (DNNs). Existing approaches fall into two main categories, 1) image enhancement such as IA-YOLO 2) domain adaptation based approaches. Image enhancement based techniques attempt to generate fog-free image. However, retrieving a fogless image from a foggy image is a much harder problem than detecting objects in a foggy image. Domain-adaptation based approaches, on the other hand, do not make use of labelled datasets in the target domain. Both categories of approaches are attempting to solve a harder version of the problem. Our approach builds over fine-tuning on the Our framework is specifically designed to compensate for foggy conditions present in the scene, ensuring robust performance even. We adopt YOLOv3 as the baseline object detection algorithm and introduce a novel Teacher-Student Perceptual loss, to high accuracy object detection in foggy images. Through extensive evaluations on common datasets such as PASCAL VOC and RTTS, we demonstrate the improvement in performance achieved by our network. We demonstrate that FogGuard achieves 69.43\% mAP, as compared to 57.78\% for YOLOv3 on the RTTS dataset. Furthermore, we show that while our training method increases time complexity, it does not introduce any additional overhead during inference compared to the regular YOLO network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge