Soham Bhosale

Improving CT Image Segmentation Accuracy Using StyleGAN Driven Data Augmentation

Feb 07, 2023Abstract:Medical Image Segmentation is a useful application for medical image analysis including detecting diseases and abnormalities in imaging modalities such as MRI, CT etc. Deep learning has proven to be promising for this task but usually has a low accuracy because of the lack of appropriate publicly available annotated or segmented medical datasets. In addition, the datasets that are available may have a different texture because of different dosage values or scanner properties than the images that need to be segmented. This paper presents a StyleGAN-driven approach for segmenting publicly available large medical datasets by using readily available extremely small annotated datasets in similar modalities. The approach involves augmenting the small segmented dataset and eliminating texture differences between the two datasets. The dataset is augmented by being passed through six different StyleGANs that are trained on six different style images taken from the large non-annotated dataset we want to segment. Specifically, style transfer is used to augment the training dataset. The annotations of the training dataset are hence combined with the textures of the non-annotated dataset to generate new anatomically sound images. The augmented dataset is then used to train a U-Net segmentation network which displays a significant improvement in the segmentation accuracy in segmenting the large non-annotated dataset.

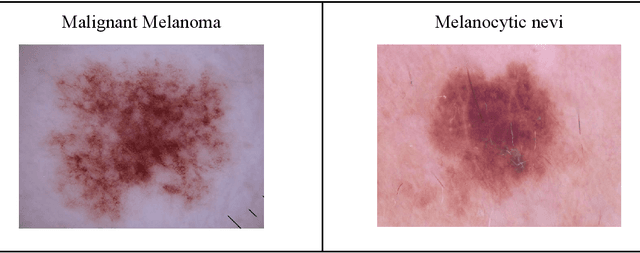

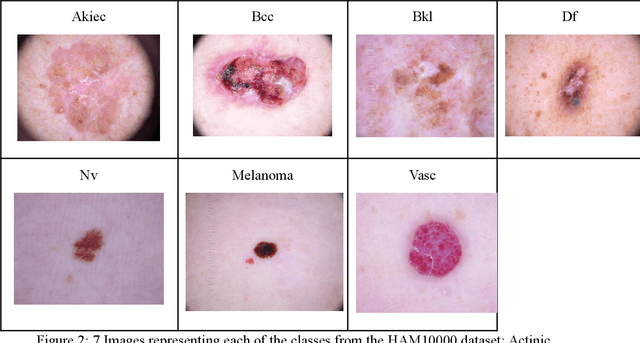

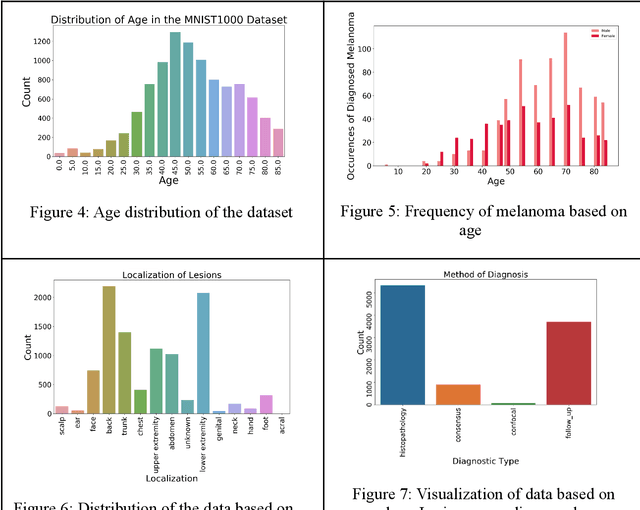

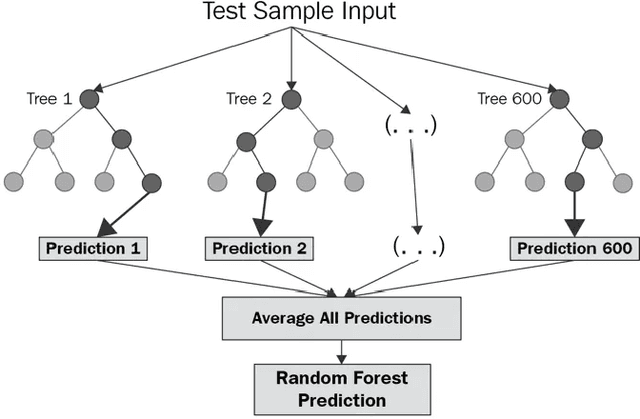

Comparison of Deep Learning and Machine Learning Models and Frameworks for Skin Lesion Classification

Jul 26, 2022

Abstract:The incidence rate for skin cancer has been steadily increasing throughout the world, leading to it being a serious issue. Diagnosis at an early stage has the potential to drastically reduce the harm caused by the disease, however, the traditional biopsy is a labor-intensive and invasive procedure. In addition, numerous rural communities do not have easy access to hospitals and do not prefer visiting one for what they feel might be a minor issue. Using machine learning and deep learning for skin cancer classification can increase accessibility and reduce the discomforting procedures involved in the traditional lesion detection process. These models can be wrapped in web or mobile apps and serve a greater population. In this paper, two such models are tested on the benchmark HAM10000 dataset of common skin lesions. They are Random Forest with Stratified K-Fold Validation, and MobileNetV2 (throughout the rest of the paper referred to as MobileNet). The MobileNet model was trained separately using both TensorFlow and PyTorch frameworks. A side-by-side comparison of both deep learning and machine learning models and a comparison of the same deep learning model on different frameworks for skin lesion diagnosis in a resource-constrained mobile environment has not been conducted before. The results indicate that each of these models fares better at different classification tasks. For greater overall recall, accuracy, and detection of malignant melanoma, the TensorFlow MobileNet was the better choice. However, for detecting noncancerous skin lesions, the PyTorch MobileNet proved to be better. Random Forest was the better algorithm when it came to having a low computational cost with moderate correctness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge