Siqi Bu

How to Use Reinforcement Learning to Facilitate Future Electricity Market Design? Part 2: Method and Applications

May 12, 2023Abstract:This two-part paper develops a paradigmatic theory and detailed methods of the joint electricity market design using reinforcement-learning (RL)-based simulation. In Part 2, this theory is further demonstrated by elaborating detailed methods of designing an electricity spot market (ESM), together with a reserved capacity product (RC) in the ancillary service market (ASM) and a virtual bidding (VB) product in the financial market (FM). Following the theory proposed in Part 1, firstly, market design options in the joint market are specified. Then, the Markov game model is developed, in which we show how to incorporate market design options and uncertain risks in model formulation. A multi-agent policy proximal optimization (MAPPO) algorithm is elaborated, as a practical implementation of the generalized market simulation method developed in Part 1. Finally, the case study demonstrates how to pick the best market design options by using some of the market operation performance indicators proposed in Part 1, based on the simulation results generated by implementing the MAPPO algorithm. The impacts of different market design options on market participants' bidding strategy preference are also discussed.

An Imitation Learning Based Algorithm Enabling Priori Knowledge Transfer in Modern Electricity Markets for Bayesian Nash Equilibrium Estimation

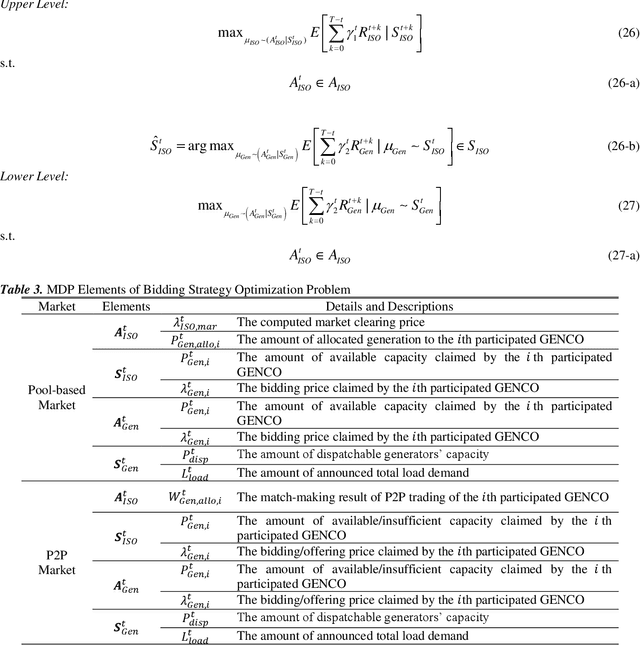

May 12, 2023Abstract:The Nash Equilibrium (NE) estimation in bidding games of electricity markets is the key concern of both generation companies (GENCOs) for bidding strategy optimization and the Independent System Operator (ISO) for market surveillance. However, existing methods for NE estimation in emerging modern electricity markets (FEM) are inaccurate and inefficient because the priori knowledge of bidding strategies before any environment changes, such as load demand variations, network congestion, and modifications of market design, is not fully utilized. In this paper, a Bayes-adaptive Markov Decision Process in FEM (BAMDP-FEM) is therefore developed to model the GENCOs' bidding strategy optimization considering the priori knowledge. A novel Multi-Agent Generative Adversarial Imitation Learning algorithm (MAGAIL-FEM) is then proposed to enable GENCOs to learn simultaneously from priori knowledge and interactions with changing environments. The obtained NE is a Bayesian Nash Equilibrium (BNE) with priori knowledge transferred from the previous environment. In the case study, the superiority of this proposed algorithm in terms of convergence speed compared with conventional methods is verified. It is concluded that the optimal bidding strategies in the obtained BNE can always lead to more profits than NE due to the effective learning from the priori knowledge. Also, BNE is more accurate and consistent with situations in real-world markets.

How to Use Reinforcement Learning to Facilitate Future Electricity Market Design? Part 1: A Paradigmatic Theory

May 12, 2023Abstract:In face of the pressing need of decarbonization in the power sector, the re-design of electricity market is necessary as a Marco-level approach to accommodate the high penetration of renewable generations, and to achieve power system operation security, economic efficiency, and environmental friendliness. However, existing market design methodologies suffer from the lack of coordination among energy spot market (ESM), ancillary service market (ASM) and financial market (FM), i.e., the "joint market", and the lack of reliable simulation-based verification. To tackle these deficiencies, this two-part paper develops a paradigmatic theory and detailed methods of the joint market design using reinforcement-learning (RL)-based simulation. In Part 1, the theory and framework of this novel market design philosophy are proposed. First, the controversial market design options while designing the joint market are summarized as the targeted research questions. Second, the Markov game model is developed to describe the bidding game in the joint market, incorporating the market design options to be determined. Third, a framework of deploying multiple types of RL algorithms to simulate the market model is developed. Finally, several market operation performance indicators are proposed to validate the market design based on the simulation results.

Applications of Reinforcement Learning in Deregulated Power Market: A Comprehensive Review

May 07, 2022

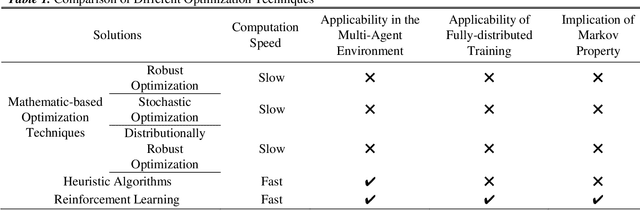

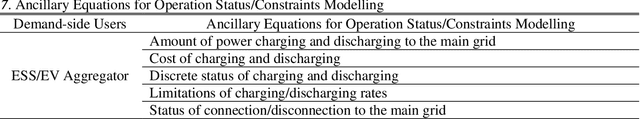

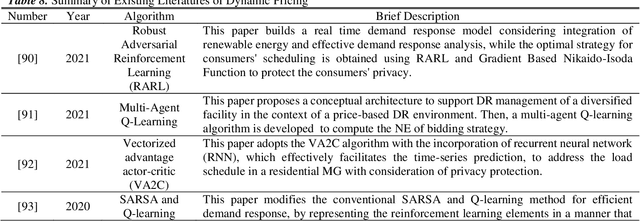

Abstract:The increasing penetration of renewable generations, along with the deregulation and marketization of power industry, promotes the transformation of power market operation paradigms. The optimal bidding strategy and dispatching methodology under these new paradigms are prioritized concerns for both market participants and power system operators, with obstacles of uncertain characteristics, computational efficiency, as well as requirements of hyperopic decision-making. To tackle these problems, the Reinforcement Learning (RL), as an emerging machine learning technique with advantages compared with conventional optimization tools, is playing an increasingly significant role in both academia and industry. This paper presents a comprehensive review of RL applications in deregulated power market operation including bidding and dispatching strategy optimization, based on more than 150 carefully selected literatures. For each application, apart from a paradigmatic summary of generalized methodology, in-depth discussions of applicability and obstacles while deploying RL techniques are also provided. Finally, some RL techniques that have great potentiality to be deployed in bidding and dispatching problems are recommended and discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge