Sina Hafezi

Subspace Hybrid MVDR Beamforming for Augmented Hearing

Nov 30, 2023

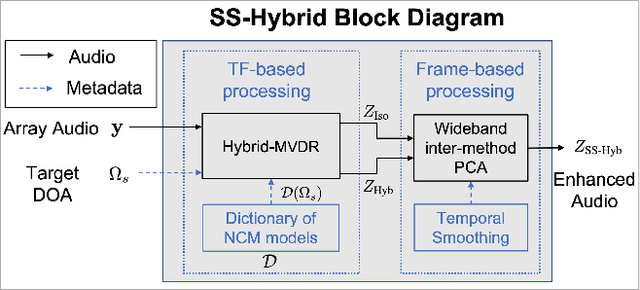

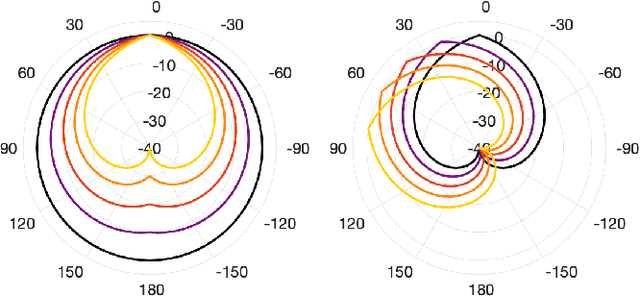

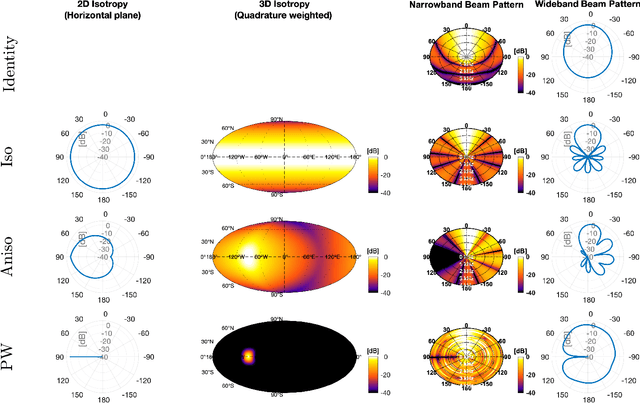

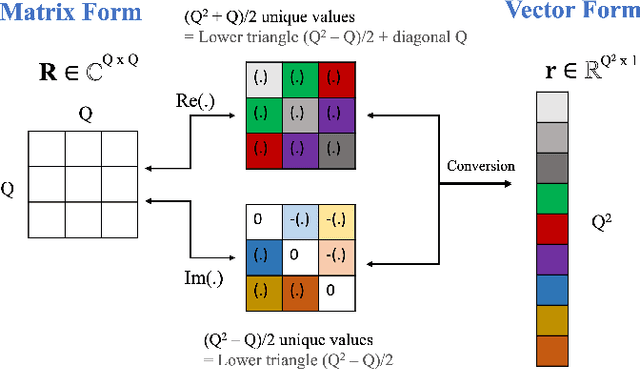

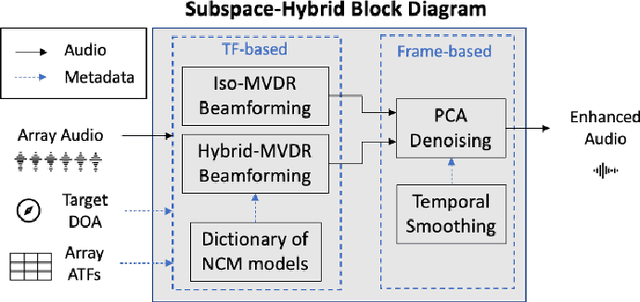

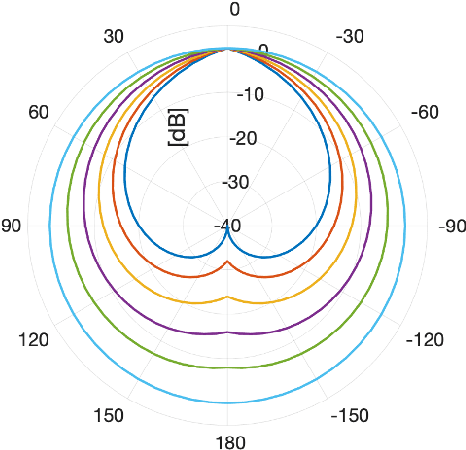

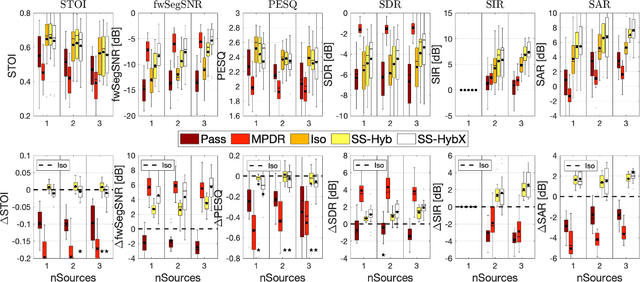

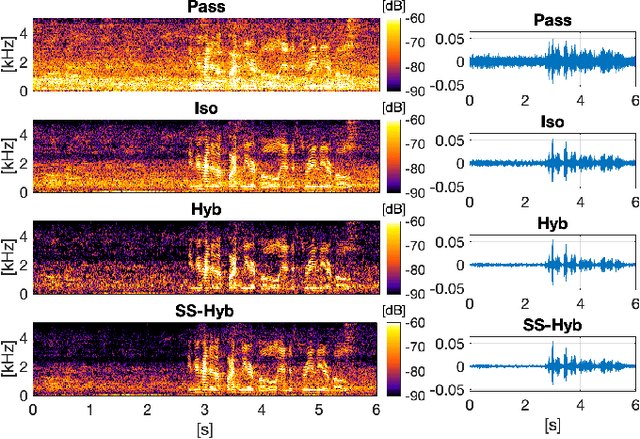

Abstract:Signal-dependent beamformers are advantageous over signal-independent beamformers when the acoustic scenario - be it real-world or simulated - is straightforward in terms of the number of sound sources, the ambient sound field and their dynamics. However, in the context of augmented reality audio using head-worn microphone arrays, the acoustic scenarios encountered are often far from straightforward. The design of robust, high-performance, adaptive beamformers for such scenarios is an on-going challenge. This is due to the violation of the typically required assumptions on the noise field caused by, for example, rapid variations resulting from complex acoustic environments, and/or rotations of the listener's head. This work proposes a multi-channel speech enhancement algorithm which utilises the adaptability of signal-dependent beamformers while still benefiting from the computational efficiency and robust performance of signal-independent super-directive beamformers. The algorithm has two stages. (i) The first stage is a hybrid beamformer based on a dictionary of weights corresponding to a set of noise field models. (ii) The second stage is a wide-band subspace post-filter to remove any artifacts resulting from (i). The algorithm is evaluated using both real-world recordings and simulations of a cocktail-party scenario. Noise suppression, intelligibility and speech quality results show a significant performance improvement by the proposed algorithm compared to the baseline super-directive beamformer. A data-driven implementation of the noise field dictionary is shown to provide more noise suppression, and similar speech intelligibility and quality, compared to a parametric dictionary.

Subspace Hybrid Beamforming for Head-worn Microphone Arrays

Mar 15, 2023

Abstract:A two-stage multi-channel speech enhancement method is proposed which consists of a novel adaptive beamformer, Hybrid Minimum Variance Distortionless Response (MVDR), Isotropic-MVDR (Iso), and a novel multi-channel spectral Principal Components Analysis (PCA) denoising. In the first stage, the Hybrid-MVDR performs multiple MVDRs using a dictionary of pre-defined noise field models and picks the minimum-power outcome, which benefits from the robustness of signal-independent beamforming and the performance of adaptive beamforming. In the second stage, the outcomes of Hybrid and Iso are jointly used in a two-channel PCA-based denoising to remove the 'musical noise' produced by Hybrid beamformer. On a dataset of real 'cocktail-party' recordings with head-worn array, the proposed method outperforms the baseline superdirective beamformer in noise suppression (fwSegSNR, SDR, SIR, SAR) and speech intelligibility (STOI) with similar speech quality (PESQ) improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge