Sina Akbarian

A Computer Vision Approach to Combat Lyme Disease

Sep 24, 2020

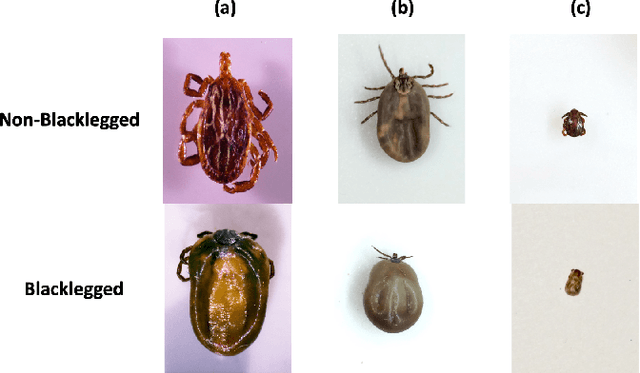

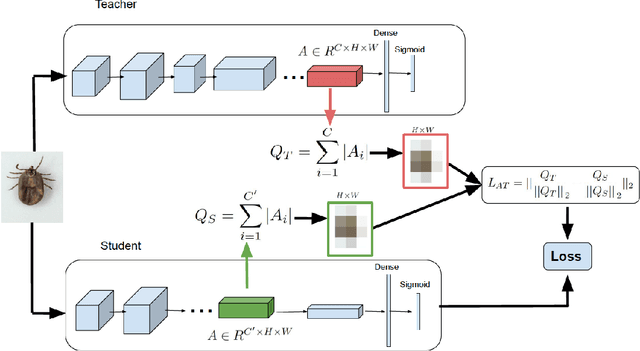

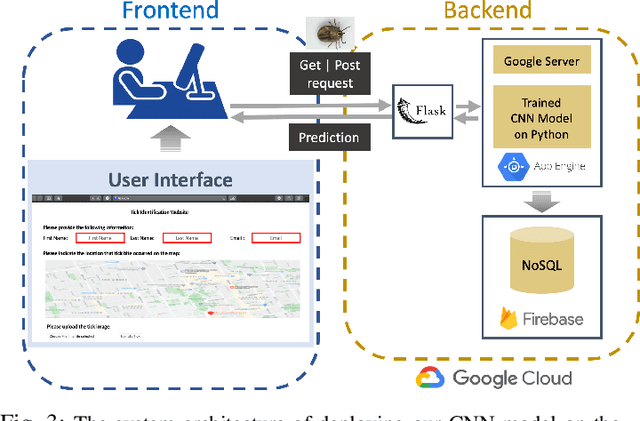

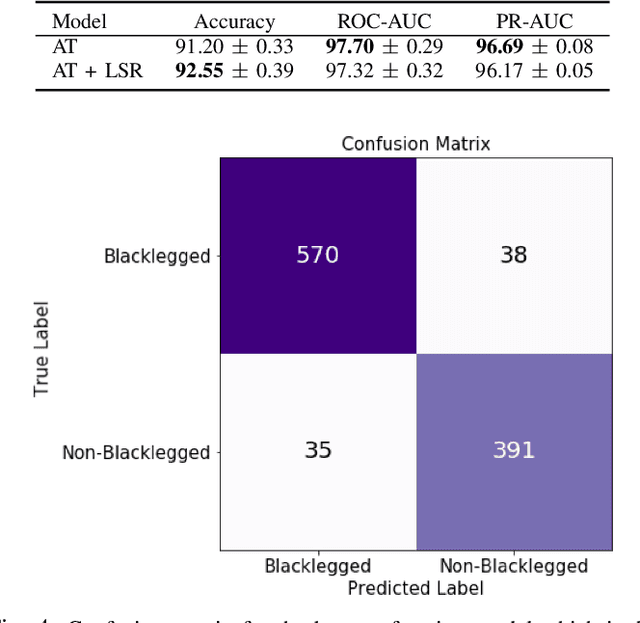

Abstract:Lyme disease is an infectious disease transmitted to humans by a bite from an infected Ixodes species (blacklegged ticks). It is one of the fastest growing vector-borne illness in North America and is expanding its geographic footprint. Lyme disease treatment is time-sensitive, and can be cured by administering an antibiotic (prophylaxis) to the patient within 72 hours after a tick bite by the Ixodes species. However, the laboratory-based identification of each tick that might carry the bacteria is time-consuming and labour intensive and cannot meet the maximum turn-around-time of 72 hours for an effective treatment. Early identification of blacklegged ticks using computer vision technologies is a potential solution in promptly identifying a tick and administering prophylaxis within a crucial window period. In this work, we build an automated detection tool that can differentiate blacklegged ticks from other ticks species using advanced deep learning and computer vision approaches. We demonstrate the classification of tick species using Convolution Neural Network (CNN) models, trained end-to-end from tick images directly. Advanced knowledge transfer techniques within teacher-student learning frameworks are adopted to improve the performance of classification of tick species. Our best CNN model achieves 92% accuracy on test set. The tool can be integrated with the geography of exposure to determine the risk of Lyme disease infection and need for prophylaxis treatment.

Evaluating Knowledge Transfer in Neural Network for Medical Images

Sep 17, 2020

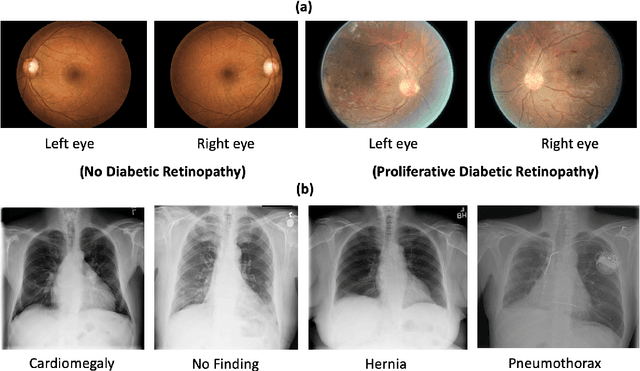

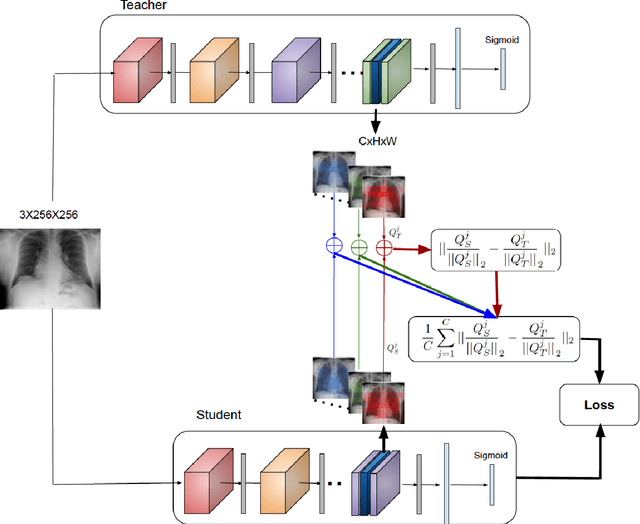

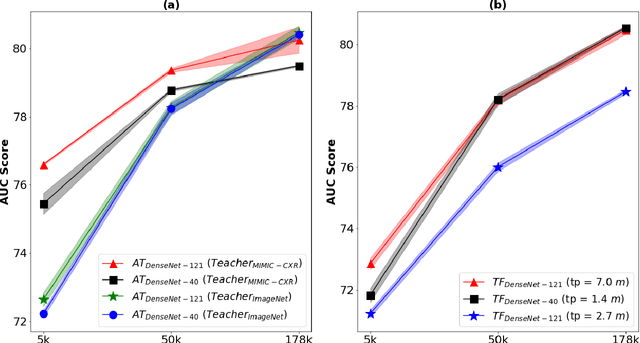

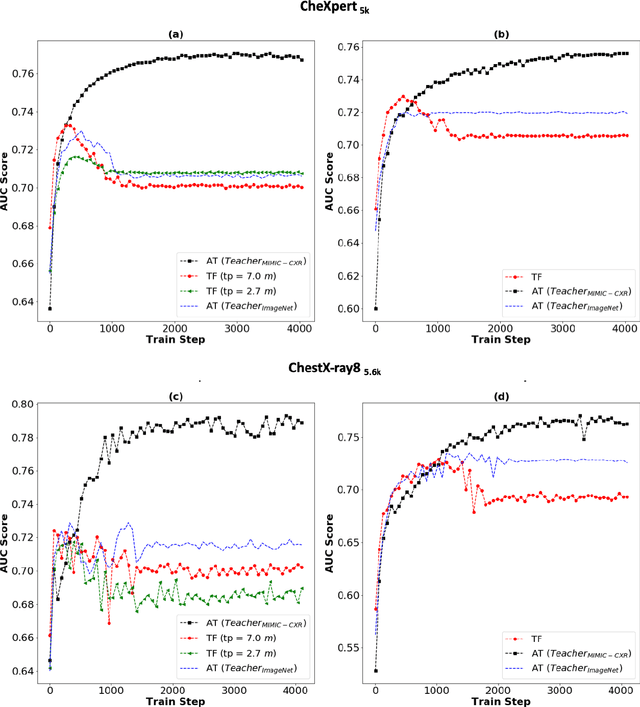

Abstract:Deep learning and knowledge transfer techniques have permeated the field of medical imaging and are considered as key approaches for revolutionizing diagnostic imaging practices. However, there are still challenges for the successful integration of deep learning into medical imaging tasks due to a lack of large annotated imaging data. To address this issue, we propose a teacher-student learning framework to transfer knowledge from a carefully pre-trained convolutional neural network (CNN) teacher to a student CNN. In this study, we explore the performance of knowledge transfer in the medical imaging setting. We investigate the proposed network's performance when the student network is trained on a small dataset (target dataset) as well as when teacher's and student's domains are distinct. The performances of the CNN models are evaluated on three medical imaging datasets including Diabetic Retinopathy, CheXpert, and ChestX-ray8. Our results indicate that the teacher-student learning framework outperforms transfer learning for small imaging datasets. Particularly, the teacher-student learning framework improves the area under the ROC Curve (AUC) of the CNN model on a small sample of CheXpert (n=5k) by 4% and on ChestX-ray8 (n=5.6k) by 9%. In addition to small training data size, we also demonstrate a clear advantage of the teacher-student learning framework in the medical imaging setting compared to transfer learning. We observe that the teacher-student network holds a great promise not only to improve the performance of diagnosis but also to reduce overfitting when the dataset is small.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge