Simone Disabato

Tiny Machine Learning for Concept Drift

Jul 30, 2021

Abstract:Tiny Machine Learning (TML) is a new research area whose goal is to design machine and deep learning techniques able to operate in Embedded Systems and IoT units, hence satisfying the severe technological constraints on memory, computation, and energy characterizing these pervasive devices. Interestingly, the related literature mainly focused on reducing the computational and memory demand of the inference phase of machine and deep learning models. At the same time, the training is typically assumed to be carried out in Cloud or edge computing systems (due to the larger memory and computational requirements). This assumption results in TML solutions that might become obsolete when the process generating the data is affected by concept drift (e.g., due to periodicity or seasonality effect, faults or malfunctioning affecting sensors or actuators, or changes in the users' behavior), a common situation in real-world application scenarios. For the first time in the literature, this paper introduces a Tiny Machine Learning for Concept Drift (TML-CD) solution based on deep learning feature extractors and a k-nearest neighbors classifier integrating a hybrid adaptation module able to deal with concept drift affecting the data-generating process. This adaptation module continuously updates (in a passive way) the knowledge base of TML-CD and, at the same time, employs a Change Detection Test to inspect for changes (in an active way) to quickly adapt to concept drift by removing the obsolete knowledge. Experimental results on both image and audio benchmarks show the effectiveness of the proposed solution, whilst the porting of TML-CD on three off-the-shelf micro-controller units shows the feasibility of what is proposed in real-world pervasive systems.

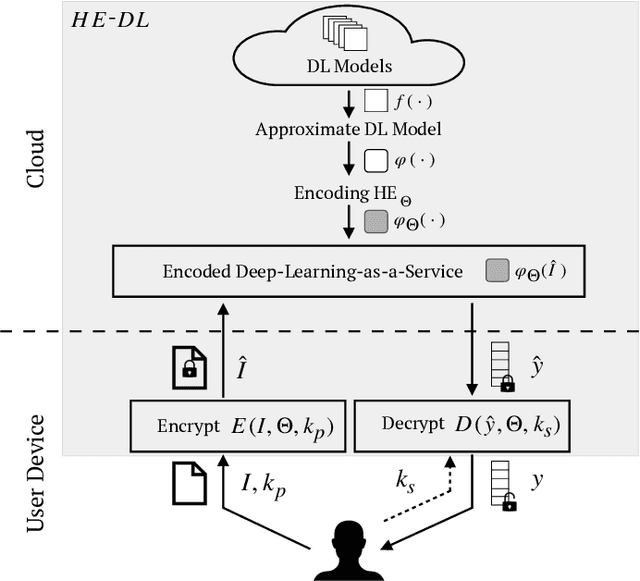

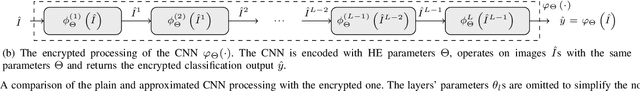

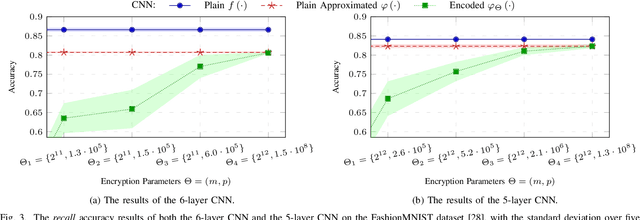

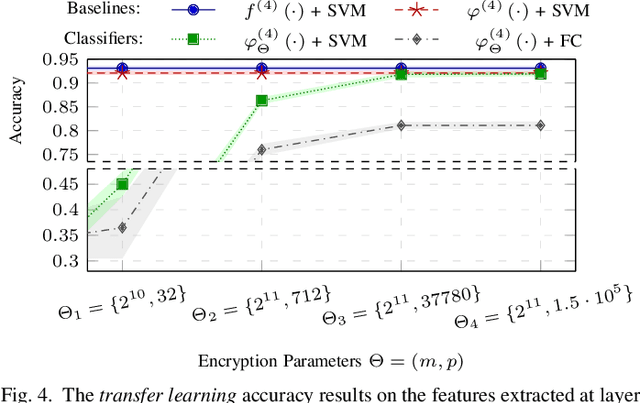

A Privacy-Preserving Distributed Architecture for Deep-Learning-as-a-Service

Mar 30, 2020

Abstract:Deep-learning-as-a-service is a novel and promising computing paradigm aiming at providing machine/deep learning solutions and mechanisms through Cloud-based computing infrastructures. Thanks to its ability to remotely execute and train deep learning models (that typically require high computational loads and memory occupation), such an approach guarantees high performance, scalability, and availability. Unfortunately, such an approach requires to send information to be processed (e.g., signals, images, positions, sounds, videos) to the Cloud, hence having potentially catastrophic-impacts on the privacy of users. This paper introduces a novel distributed architecture for deep-learning-as-a-service that is able to preserve the user sensitive data while providing Cloud-based machine and deep learning services. The proposed architecture, which relies on Homomorphic Encryption that is able to perform operations on encrypted data, has been tailored for Convolutional Neural Networks (CNNs) in the domain of image analysis and implemented through a client-server REST-based approach. Experimental results show the effectiveness of the proposed architecture.

Distributed Deep Convolutional Neural Networks for the Internet-of-Things

Aug 02, 2019

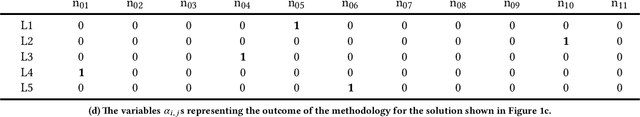

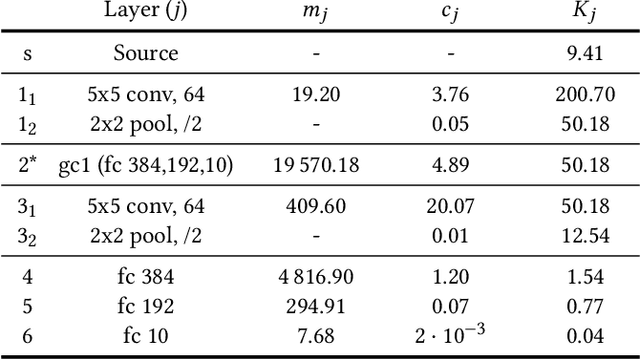

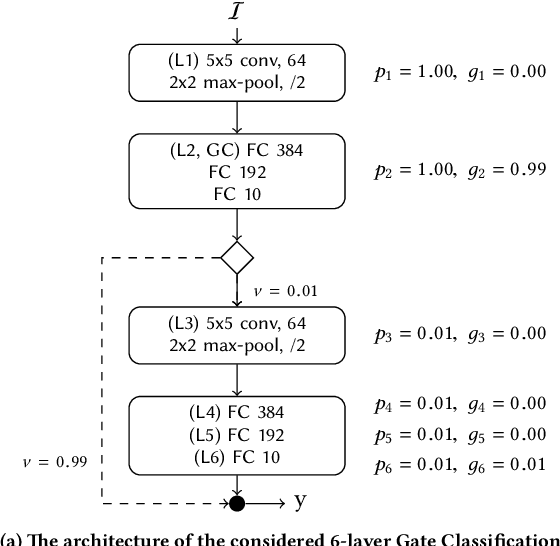

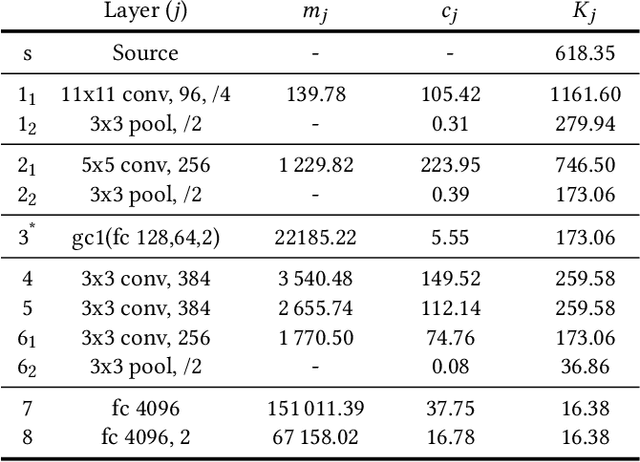

Abstract:Due to the high demand in computation and memory, deep learning solutions are mostly restricted to high-performance computing units, e.g., those present in servers, Cloud, and computing centers. In pervasive systems, e.g., those involving Internet-of-Things (IoT) technological solutions, this would require the transmission of acquired data from IoT sensors to the computing platform and wait for its output. This solution might become infeasible when remote connectivity is either unavailable or limited in bandwidth. Moreover, it introduces uncertainty in the "data production to decision making"-latency, which, in turn, might impair control loop stability if the response should be used to drive IoT actuators. In order to support a real-time recall phase directly at the IoT level, deep learning solutions must be completely rethought having in mind the constraints on memory and computation characterizing IoT units. In this paper we focus on Convolutional Neural Networks (CNNs), a specific deep learning solution for image and video classification, and introduce a methodology aiming at distributing their computation onto the units of the IoT system. We formalize such a methodology as an optimization problem where the latency between the data-gathering phase and the subsequent decision-making one is minimized. The methodology supports multiple IoT sources of data as well as multiple CNNs in execution on the same IoT system, making it a general-purpose distributed computing platform for CNN-based applications demanding autonomy, low decision-latency, and high Quality-of-Service.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge