Simon Narduzzi

Adaptation of MobileNetV2 for Face Detection on Ultra-Low Power Platform

Aug 23, 2022

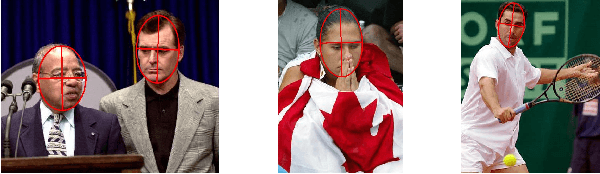

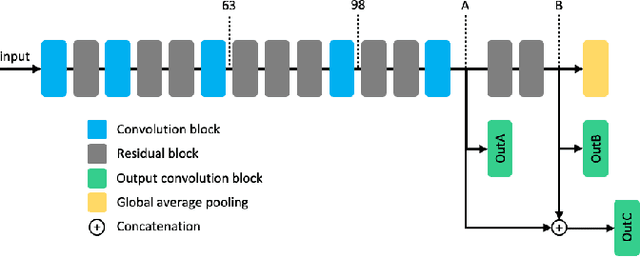

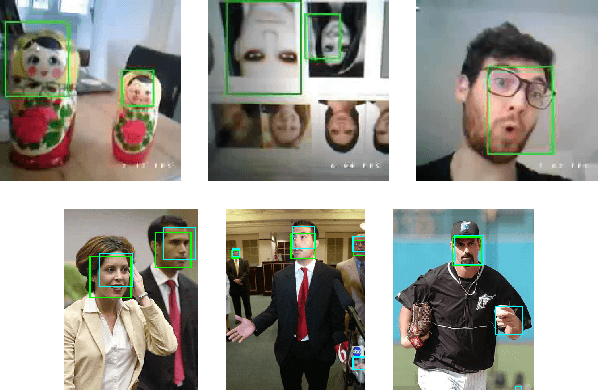

Abstract:Designing Deep Neural Networks (DNNs) running on edge hardware remains a challenge. Standard designs have been adopted by the community to facilitate the deployment of Neural Network models. However, not much emphasis is put on adapting the network topology to fit hardware constraints. In this paper, we adapt one of the most widely used architectures for mobile hardware platforms, MobileNetV2, and study the impact of changing its topology and applying post-training quantization. We discuss the impact of the adaptations and the deployment of the model on an embedded hardware platform for face detection.

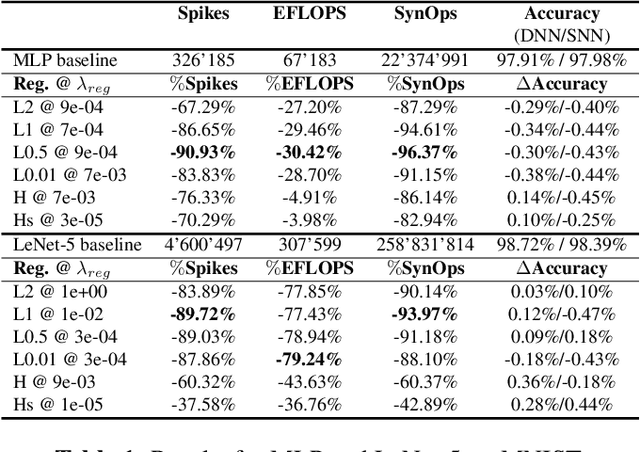

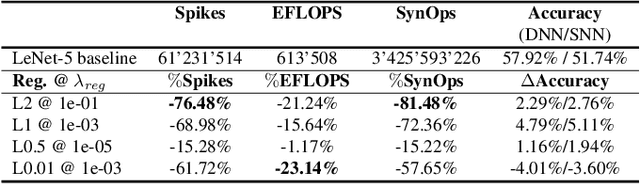

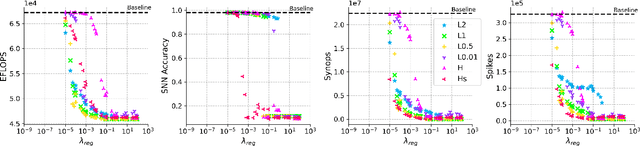

Optimizing the Consumption of Spiking Neural Networks with Activity Regularization

Apr 04, 2022

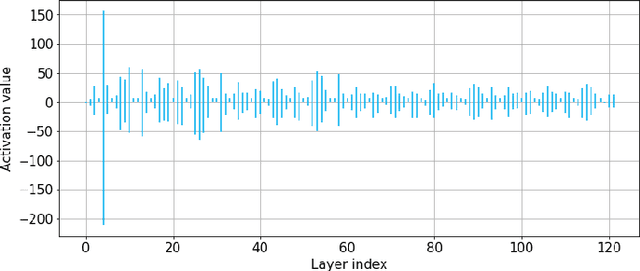

Abstract:Reducing energy consumption is a critical point for neural network models running on edge devices. In this regard, reducing the number of multiply-accumulate (MAC) operations of Deep Neural Networks (DNNs) running on edge hardware accelerators will reduce the energy consumption during inference. Spiking Neural Networks (SNNs) are an example of bio-inspired techniques that can further save energy by using binary activations, and avoid consuming energy when not spiking. The networks can be configured for equivalent accuracy on a task through DNN-to-SNN conversion frameworks but their conversion is based on rate coding therefore the synaptic operations can be high. In this work, we look into different techniques to enforce sparsity on the neural network activation maps and compare the effect of different training regularizers on the efficiency of the optimized DNNs and SNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge