Simon Eyre

Recurrence over Video Frames for the Re-identification of Meerkats

Jun 18, 2024

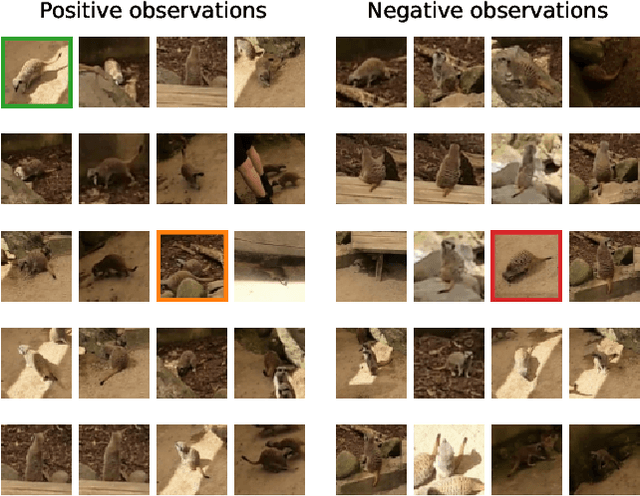

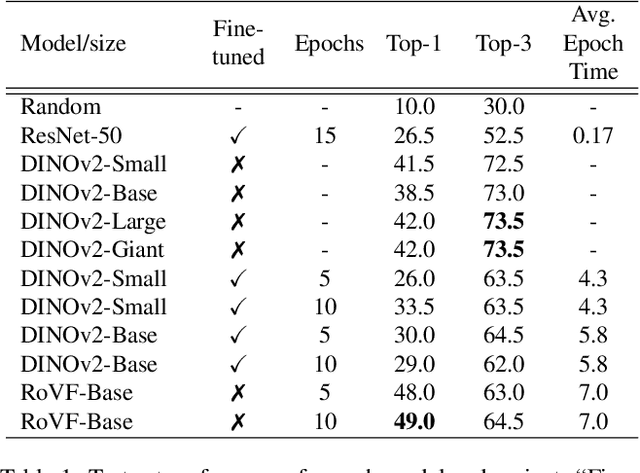

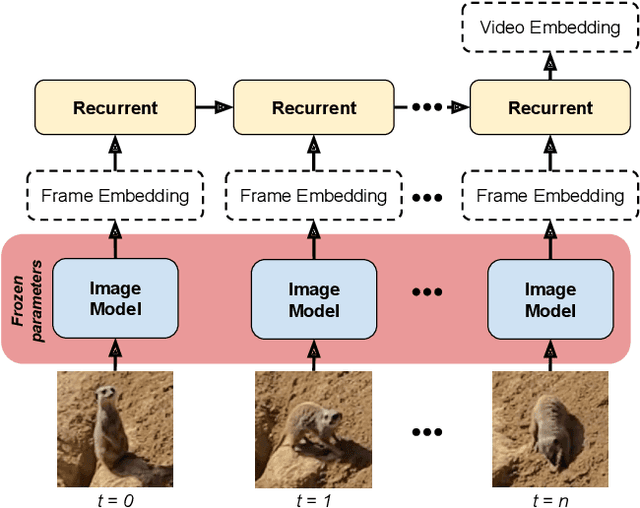

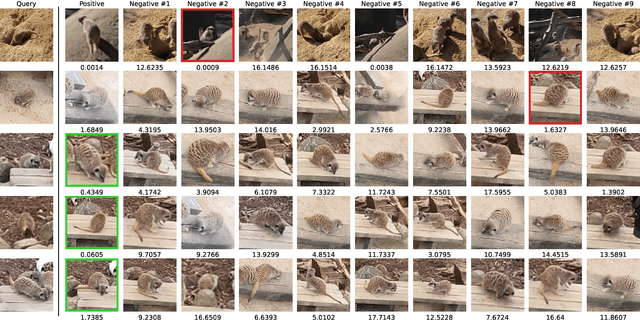

Abstract:Deep learning approaches for animal re-identification have had a major impact on conservation, significantly reducing the time required for many downstream tasks, such as well-being monitoring. We propose a method called Recurrence over Video Frames (RoVF), which uses a recurrent head based on the Perceiver architecture to iteratively construct an embedding from a video clip. RoVF is trained using triplet loss based on the co-occurrence of individuals in the video frames, where the individual IDs are unavailable. We tested this method and various models based on the DINOv2 transformer architecture on a dataset of meerkats collected at the Wellington Zoo. Our method achieves a top-1 re-identification accuracy of $49\%$, which is higher than that of the best DINOv2 model ($42\%$). We found that the model can match observations of individuals where humans cannot, and our model (RoVF) performs better than the comparisons with minimal fine-tuning. In future work, we plan to improve these models by using pre-text tasks, apply them to animal behaviour classification, and perform a hyperparameter search to optimise the models further.

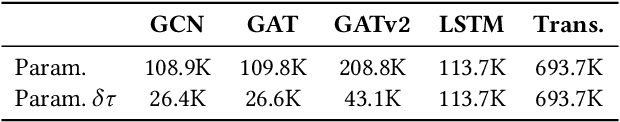

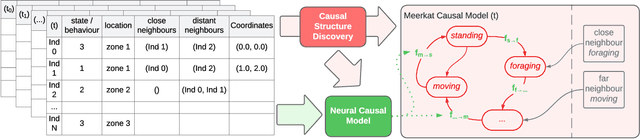

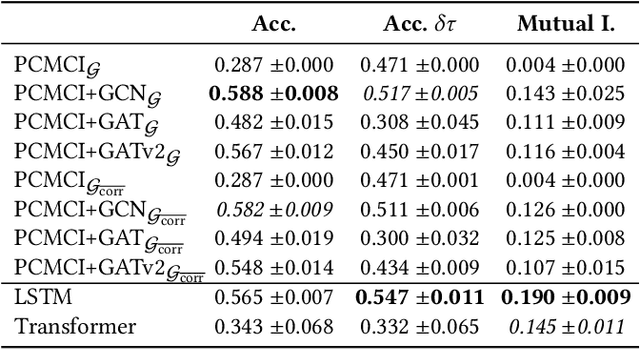

Behaviour Modelling of Social Animals via Causal Structure Discovery and Graph Neural Networks

Dec 21, 2023

Abstract:Better understanding the natural world is a crucial task with a wide range of applications. In environments with close proximity between humans and animals, such as zoos, it is essential to better understand the causes behind animal behaviour and what interventions are responsible for changes in their behaviours. This can help to predict unusual behaviours, mitigate detrimental effects and increase the well-being of animals. There has been work on modelling the dynamics behind swarms of birds and insects but the complex social behaviours of mammalian groups remain less explored. In this work, we propose a method to build behavioural models using causal structure discovery and graph neural networks for time series. We apply this method to a mob of meerkats in a zoo environment and study its ability to predict future actions and model the behaviour distribution at an individual-level and at a group level. We show that our method can match and outperform standard deep learning architectures and generate more realistic data, while using fewer parameters and providing increased interpretability.

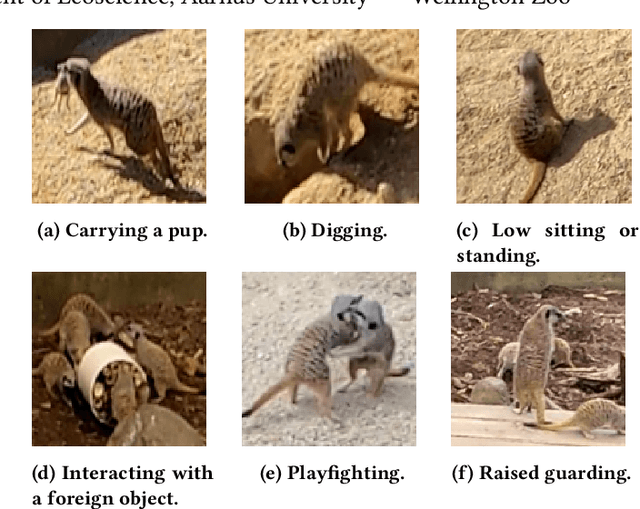

Meerkat Behaviour Recognition Dataset

Jun 20, 2023

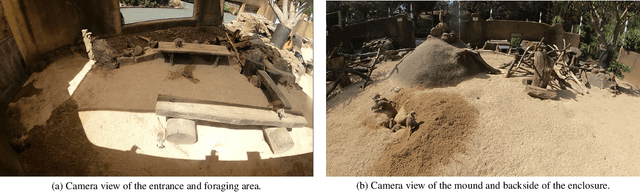

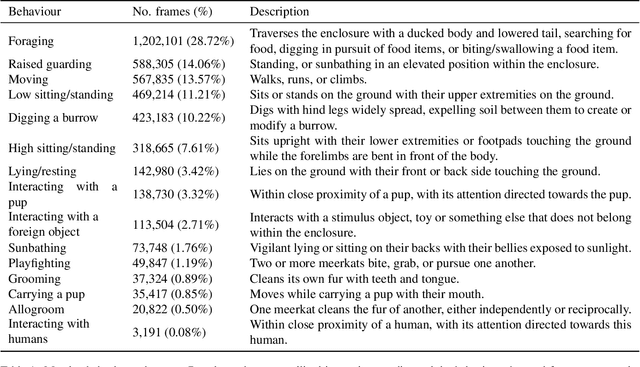

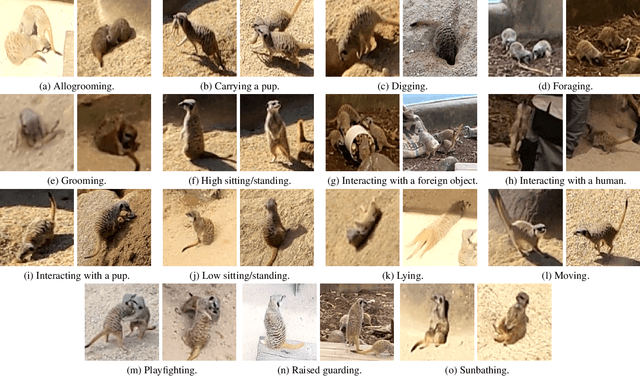

Abstract:Recording animal behaviour is an important step in evaluating the well-being of animals and further understanding the natural world. Current methods for documenting animal behaviour within a zoo setting, such as scan sampling, require excessive human effort, are unfit for around-the-clock monitoring, and may produce human-biased results. Several animal datasets already exist that focus predominantly on wildlife interactions, with some extending to action or behaviour recognition. However, there is limited data in a zoo setting or data focusing on the group behaviours of social animals. We introduce a large meerkat (Suricata Suricatta) behaviour recognition video dataset with diverse annotated behaviours, including group social interactions, tracking of individuals within the camera view, skewed class distribution, and varying illumination conditions. This dataset includes videos from two positions within the meerkat enclosure at the Wellington Zoo (Wellington, New Zealand), with 848,400 annotated frames across 20 videos and 15 unannotated videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge