Sijuan Liu

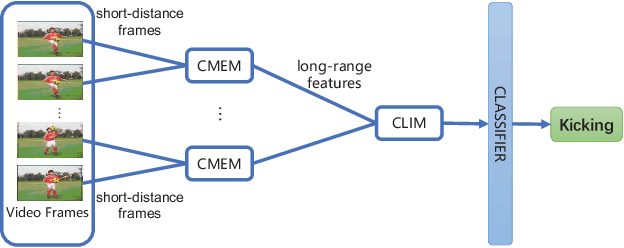

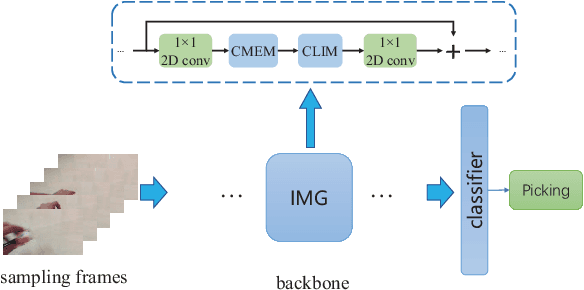

Behavior Recognition Based on the Integration of Multigranular Motion Features

Mar 07, 2022

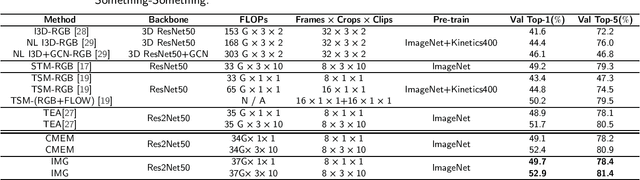

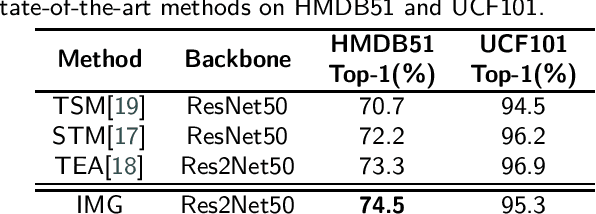

Abstract:The recognition of behaviors in videos usually requires a combinatorial analysis of the spatial information about objects and their dynamic action information in the temporal dimension. Specifically, behavior recognition may even rely more on the modeling of temporal information containing short-range and long-range motions; this contrasts with computer vision tasks involving images that focus on the understanding of spatial information. However, current solutions fail to jointly and comprehensively analyze short-range motion between adjacent frames and long-range temporal aggregations at large scales in videos. In this paper, we propose a novel behavior recognition method based on the integration of multigranular (IMG) motion features. In particular, we achieve reliable motion information modeling through the synergy of a channel attention-based short-term motion feature enhancement module (CMEM) and a cascaded long-term motion feature integration module (CLIM). We evaluate our model on several action recognition benchmarks such as HMDB51, Something-Something and UCF101. The experimental results demonstrate that our approach outperforms the previous state-of-the-art methods, which confirms its effectiveness and efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge