Siddiqui Muhammad Yasir

Deep Neural Networks for Accurate Depth Estimation with Latent Space Features

Feb 17, 2025Abstract:Depth estimation plays a pivotal role in advancing human-robot interactions, especially in indoor environments where accurate 3D scene reconstruction is essential for tasks like navigation and object handling. Monocular depth estimation, which relies on a single RGB camera, offers a more affordable solution compared to traditional methods that use stereo cameras or LiDAR. However, despite recent progress, many monocular approaches struggle with accurately defining depth boundaries, leading to less precise reconstructions. In response to these challenges, this study introduces a novel depth estimation framework that leverages latent space features within a deep convolutional neural network to enhance the precision of monocular depth maps. The proposed model features dual encoder-decoder architecture, enabling both color-to-depth and depth-to-depth transformations. This structure allows for refined depth estimation through latent space encoding. To further improve the accuracy of depth boundaries and local features, a new loss function is introduced. This function combines latent loss with gradient loss, helping the model maintain the integrity of depth boundaries. The framework is thoroughly tested using the NYU Depth V2 dataset, where it sets a new benchmark, particularly excelling in complex indoor scenarios. The results clearly show that this approach effectively reduces depth ambiguities and blurring, making it a promising solution for applications in human-robot interaction and 3D scene reconstruction.

Lightweight Deepfake Detection Based on Multi-Feature Fusion

Feb 17, 2025

Abstract:Deepfake technology utilizes deep learning based face manipulation techniques to seamlessly replace faces in videos creating highly realistic but artificially generated content. Although this technology has beneficial applications in media and entertainment misuse of its capabilities may lead to serious risks including identity theft cyberbullying and false information. The integration of DL with visual cognition has resulted in important technological improvements particularly in addressing privacy risks caused by artificially generated deepfake images on digital media platforms. In this study we propose an efficient and lightweight method for detecting deepfake images and videos making it suitable for devices with limited computational resources. In order to reduce the computational burden usually associated with DL models our method integrates machine learning classifiers in combination with keyframing approaches and texture analysis. Moreover the features extracted with a histogram of oriented gradients (HOG) local binary pattern (LBP) and KAZE bands were integrated to evaluate using random forest extreme gradient boosting extra trees and support vector classifier algorithms. Our findings show a feature-level fusion of HOG LBP and KAZE features improves accuracy to 92% and 96% on FaceForensics++ and Celeb-DFv2 respectively.

3D Instance Segmentation Using Deep Learning on RGB-D Indoor Data

Jun 19, 2024

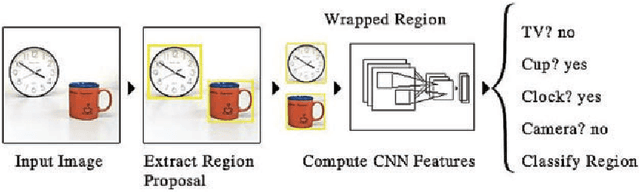

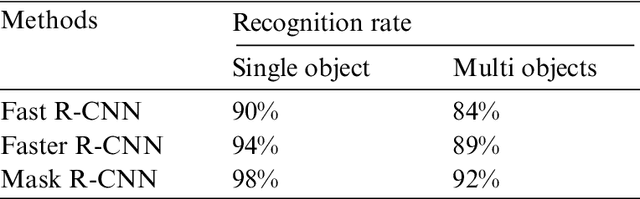

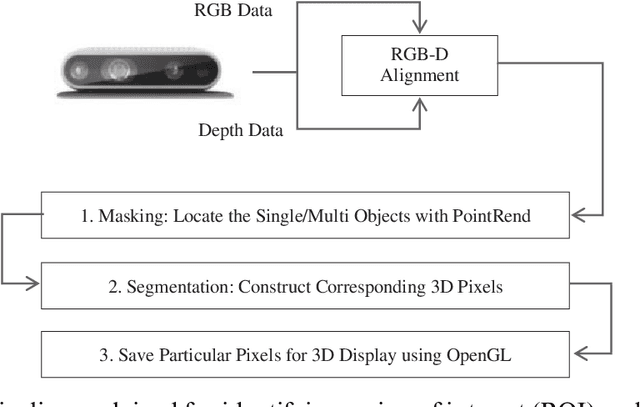

Abstract:3D object recognition is a challenging task for intelligent and robot systems in industrial and home indoor environments. It is critical for such systems to recognize and segment the 3D object instances that they encounter on a frequent basis. The computer vision, graphics, and machine learning fields have all given it a lot of attention. Traditionally, 3D segmentation was done with hand-crafted features and designed approaches that did not achieve acceptable performance and could not be generalized to large-scale data. Deep learning approaches have lately become the preferred method for 3D segmentation challenges by their great success in 2D computer vision. However, the task of instance segmentation is currently less explored. In this paper, we propose a novel approach for efficient 3D instance segmentation using red green blue and depth (RGB-D) data based on deep learning. The 2D region based convolutional neural networks (Mask R-CNN) deep learning model with point based rending module is adapted to integrate with depth information to recognize and segment 3D instances of objects. In order to generate 3D point cloud coordinates (x, y, z), segmented 2D pixels (u, v) of recognized object regions in the RGB image are merged into (u, v) points of the depth image. Moreover, we conducted an experiment and analysis to compare our proposed method from various points of view and distances. The experimentation shows the proposed 3D object recognition and instance segmentation are sufficiently beneficial to support object handling in robotic and intelligent systems.

Media Forensics and Deepfake Systematic Survey

Jun 19, 2024Abstract:Deepfake is a generative deep learning algorithm that creates or changes facial features in a very realistic way making it hard to differentiate the real from the fake features It can be used to make movies look better as well as to spread false information by imitating famous people In this paper many different ways to make a Deepfake are explained analyzed and separated categorically Using Deepfake datasets models are trained and tested for reliability through experiments Deepfakes are a type of facial manipulation that allow people to change their entire faces identities attributes and expressions The trends in the available Deepfake datasets are also discussed with a focus on how they have changed Using Deep learning a general Deepfake detection model is made Moreover the problems in making and detecting Deepfakes are also mentioned As a result of this survey it is expected that the development of new Deepfake based imaging tools will speed up in the future This survey gives indepth review of methods for manipulating images of face and various techniques to spot altered face images Four types of facial manipulation are specifically discussed which are attribute manipulation expression swap entire face synthesis and identity swap Across every manipulation category we yield information on manipulation techniques significant benchmarks for technical evaluation of counterfeit detection techniques available public databases and a summary of the outcomes of all such analyses From all of the topics in the survey we focus on the most recent development of Deepfake showing its advances and obstacles in detecting fake images

Faster Metallic Surface Defect Detection Using Deep Learning with Channel Shuffling

Jun 19, 2024Abstract:Deep learning has been constantly improving in recent years and a significant number of researchers have devoted themselves to the research of defect detection algorithms. Detection and recognition of small and complex targets is still a problem that needs to be solved. The authors of this research would like to present an improved defect detection model for detecting small and complex defect targets in steel surfaces. During steel strip production mechanical forces and environmental factors cause surface defects of the steel strip. Therefore the detection of such defects is key to the production of high-quality products. Moreover surface defects of the steel strip cause great economic losses to the high-tech industry. So far few studies have explored methods of identifying the defects and most of the currently available algorithms are not sufficiently effective. Therefore this study presents an improved real-time metallic surface defect detection model based on You Only Look Once (YOLOv5) specially designed for small networks. For the smaller features of the target the conventional part is replaced with a depth-wise convolution and channel shuffle mechanism. Then assigning weights to Feature Pyramid Networks (FPN) output features and fusing them increases feature propagation and the networks characterization ability. The experimental results reveal that the improved proposed model outperforms other comparable models in terms of accuracy and detection time. The precision of the proposed model achieved by @mAP is 77.5% on the Northeastern University Dataset NEU-DET and 70.18% on the GC10-DET datasets

Modeling & Evaluating the Performance of Convolutional Neural Networks for Classifying Steel Surface Defects

Jun 19, 2024Abstract:Recently, outstanding identification rates in image classification tasks were achieved by convolutional neural networks (CNNs). to use such skills, selective CNNs trained on a dataset of well-known images of metal surface defects captured with an RGB camera. Defects must be detected early to take timely corrective action due to production concerns. For image classification up till now, a model-based method has been utilized, which indicated the predicted reflection characteristics of surface defects in comparison to flaw-free surfaces. The problem of detecting steel surface defects has grown in importance as a result of the vast range of steel applications in end-product sectors such as automobiles, households, construction, etc. The manual processes for detections are time-consuming, labor-intensive, and expensive. Different strategies have been used to automate manual processes, but CNN models have proven to be the most effective rather than image processing and machine learning techniques. By using different CNN models with fine-tuning, easily compare their performance and select the best-performing model for the same kinds of tasks. However, it is important that using different CNN models either from fine tuning can be computationally expensive and time-consuming. Therefore, our study helps the upcoming researchers to choose the CNN without considering the issues of model complexity, performance, and computational resources. In this article, the performance of various CNN models with transfer learning techniques are evaluated. These models were chosen based on their popularity and impact in the field of computer vision research, as well as their performance on benchmark datasets. According to the outcomes, DenseNet201 outperformed the other CNN models and had the greatest detection rate on the NEU dataset, falling in at 98.37 percent.

Empirical Evaluation of Integrated Trust Mechanism to Improve Trust in E-commerce Services

Jun 19, 2024

Abstract:There are mostly two approaches to tackle trust management worldwide Strong and crisp and Soft and Social. We analyze the impact of integrated trust mechanism in three different e-commerce services. The trust aspect is a dormant element between potential users and being developed expert or internet systems. We support our integration by preside over an experiment in controlled laboratory environment. The model selected for the experiment is a composite of policy and reputation based trust mechanisms and widely acknowledged in e-commerce industry. The integration between policy and trust mechanism was accomplished through mapping process, weakness of one brought to a close with the strength of other. Furthermore, experiment has been supervised to validate the effectiveness of implementation by segregating both integrated and traditional trust mechanisms in learning system

Deep Learning-Based 3D Instance and Semantic Segmentation: A Review

Jun 19, 2024Abstract:The process of segmenting point cloud data into several homogeneous areas with points in the same region having the same attributes is known as 3D segmentation. Segmentation is challenging with point cloud data due to substantial redundancy, fluctuating sample density and lack of apparent organization. The research area has a wide range of robotics applications, including intelligent vehicles, autonomous mapping and navigation. A number of researchers have introduced various methodologies and algorithms. Deep learning has been successfully used to a spectrum of 2D vision domains as a prevailing A.I. methods. However, due to the specific problems of processing point clouds with deep neural networks, deep learning on point clouds is still in its initial stages. This study examines many strategies that have been presented to 3D instance and semantic segmentation and gives a complete assessment of current developments in deep learning-based 3D segmentation. In these approaches benefits, draw backs, and design mechanisms are studied and addressed. This study evaluates the impact of various segmentation algorithms on competitiveness on various publicly accessible datasets, as well as the most often used pipelines, their advantages and limits, insightful findings and intriguing future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge