Siddhant Sahu

Blind Deblurring using Deep Learning: A Survey

Jul 23, 2019

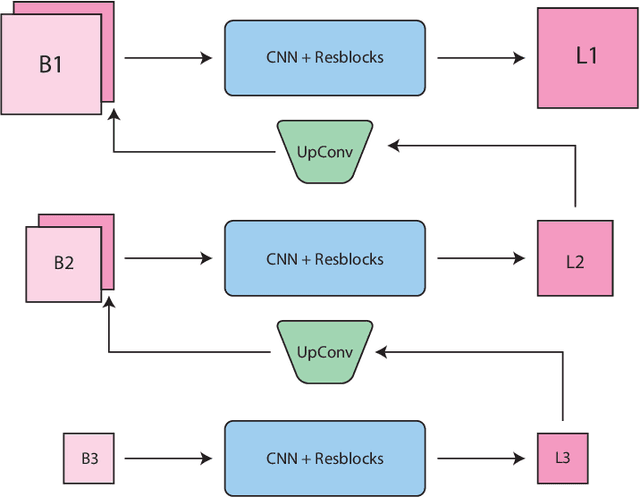

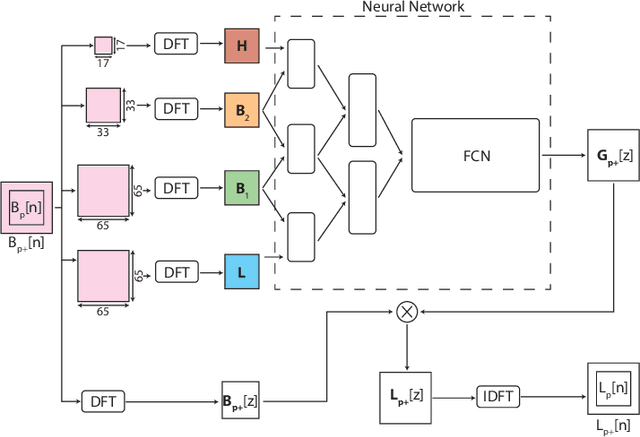

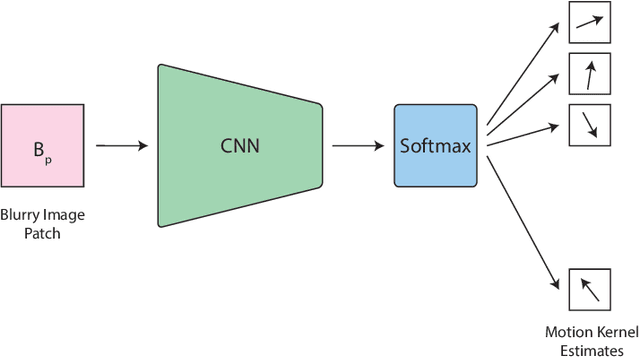

Abstract:We inspect all the deep learning based solutions and provide holistic understanding of various architectures that have evolved over the past few years to solve blind deblurring. The introductory work used deep learning to estimate some features of the blur kernel and then moved onto predicting the blur kernel entirely, which converts the problem into non-blind deblurring. The recent state of the art techniques are end to end, i.e., they don't estimate the blur kernel rather try to estimate the latent sharp image directly from the blurred image. The benchmarking PSNR and SSIM values on standard datasets of GOPRO and Kohler using various architectures are also provided.

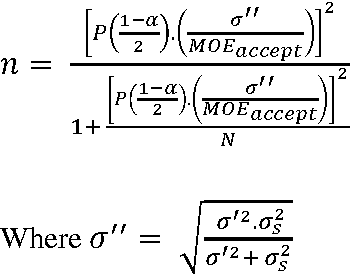

Bayesian Sample Size Determination of Vibration Signals in Machine Learning Approach to Fault Diagnosis of Roller Bearings

Feb 25, 2014

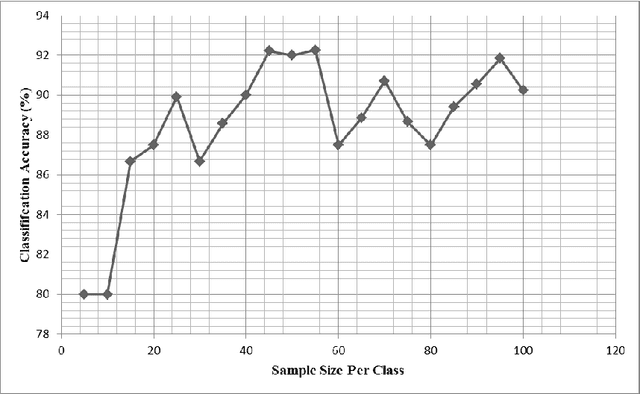

Abstract:Sample size determination for a data set is an important statistical process for analyzing the data to an optimum level of accuracy and using minimum computational work. The applications of this process are credible in every domain which deals with large data sets and high computational work. This study uses Bayesian analysis for determination of minimum sample size of vibration signals to be considered for fault diagnosis of a bearing using pre-defined parameters such as the inverse standard probability and the acceptable margin of error. Thus an analytical formula for sample size determination is introduced. The fault diagnosis of the bearing is done using a machine learning approach using an entropy-based J48 algorithm. The following method will help researchers involved in fault diagnosis to determine minimum sample size of data for analysis for a good statistical stability and precision.

* 14 pages, 1 table, 6 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge