Shiwei Zeng

Attribute-Efficient PAC Learning of Sparse Halfspaces with Constant Malicious Noise Rate

May 27, 2025Abstract:Attribute-efficient learning of sparse halfspaces has been a fundamental problem in machine learning theory. In recent years, machine learning algorithms are faced with prevalent data corruptions or even adversarial attacks. It is of central interest to design efficient algorithms that are robust to noise corruptions. In this paper, we consider that there exists a constant amount of malicious noise in the data and the goal is to learn an underlying $s$-sparse halfspace $w^* \in \mathbb{R}^d$ with $\text{poly}(s,\log d)$ samples. Specifically, we follow a recent line of works and assume that the underlying distribution satisfies a certain concentration condition and a margin condition at the same time. Under such conditions, we show that attribute-efficiency can be achieved by simple variants to existing hinge loss minimization programs. Our key contribution includes: 1) an attribute-efficient PAC learning algorithm that works under constant malicious noise rate; 2) a new gradient analysis that carefully handles the sparsity constraint in hinge loss minimization.

Attribute-Efficient PAC Learning of Low-Degree Polynomial Threshold Functions with Nasty Noise

Jun 01, 2023Abstract:The concept class of low-degree polynomial threshold functions (PTFs) plays a fundamental role in machine learning. In this paper, we study PAC learning of $K$-sparse degree-$d$ PTFs on $\mathbb{R}^n$, where any such concept depends only on $K$ out of $n$ attributes of the input. Our main contribution is a new algorithm that runs in time $({nd}/{\epsilon})^{O(d)}$ and under the Gaussian marginal distribution, PAC learns the class up to error rate $\epsilon$ with $O(\frac{K^{4d}}{\epsilon^{2d}} \cdot \log^{5d} n)$ samples even when an $\eta \leq O(\epsilon^d)$ fraction of them are corrupted by the nasty noise of Bshouty et al. (2002), possibly the strongest corruption model. Prior to this work, attribute-efficient robust algorithms are established only for the special case of sparse homogeneous halfspaces. Our key ingredients are: 1) a structural result that translates the attribute sparsity to a sparsity pattern of the Chow vector under the basis of Hermite polynomials, and 2) a novel attribute-efficient robust Chow vector estimation algorithm which uses exclusively a restricted Frobenius norm to either certify a good approximation or to validate a sparsity-induced degree-$2d$ polynomial as a filter to detect corrupted samples.

List-Decodable Sparse Mean Estimation

May 28, 2022Abstract:Robust mean estimation is one of the most important problems in statistics: given a set of samples $\{x_1, \dots, x_n\} \subset \mathbb{R}^d$ where an $\alpha$ fraction are drawn from some distribution $D$ and the rest are adversarially corrupted, it aims to estimate the mean of $D$. A surge of recent research interest has been focusing on the list-decodable setting where $\alpha \in (0, \frac12]$, and the goal is to output a finite number of estimates among which at least one approximates the target mean. In this paper, we consider that the underlying distribution is Gaussian and the target mean is $k$-sparse. Our main contribution is the first polynomial-time algorithm that enjoys sample complexity $O\big(\mathrm{poly}(k, \log d)\big)$, i.e. poly-logarithmic in the dimension. One of the main algorithmic ingredients is using low-degree sparse polynomials to filter outliers, which may be of independent interest.

Semi-verified Learning from the Crowd with Pairwise Comparisons

Jun 13, 2021Abstract:We study the problem of {\em crowdsourced PAC learning} of Boolean-valued functions through enriched queries, a problem that has attracted a surge of recent research interests. In particular, we consider that the learner may query the crowd to obtain a label of a given instance or a comparison tag of a pair of instances. This is a challenging problem and only recently have budget-efficient algorithms been established for the scenario where the majority of the crowd are correct. In this work, we investigate the significantly more challenging case that the majority are incorrect which renders learning impossible in general. We show that under the {semi-verified model} of Charikar~et~al.~(2017), where we have (limited) access to a trusted oracle who always returns the correct annotation, it is possible to learn the underlying function while the labeling cost is significantly mitigated by the enriched and more easily obtained queries.

Learning Halfspaces with Pairwise Comparisons: Breaking the Barriers of Query Complexity via Crowd Wisdom

Nov 02, 2020

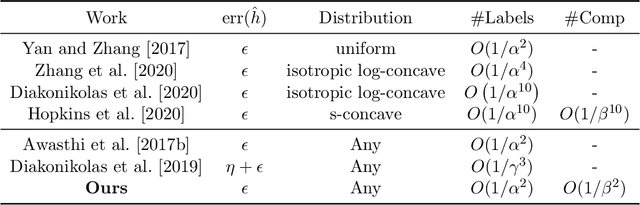

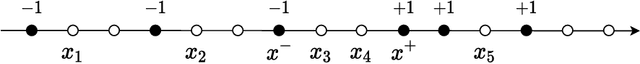

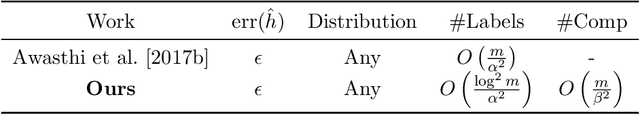

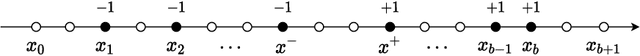

Abstract:In this paper, we study the problem of efficient learning of halfspaces under Massart noise. This is a challenging noise model and only very recently has significant progress been made for problems with sufficient labeled samples. We consider the more practical yet less explored setting where the learner has a limited availability of labeled samples, but has extra access to pairwise comparisons. For the first time, we show that even when both labels and comparisons are corrupted by Massart noise, there is a polynomial-time algorithm that provably learns the underlying halfspace with near-optimal query complexity and noise tolerance, under the distribution-independent setting. In addition, we present a precise tradeoff between label complexity and comparison complexity, showing that the former is order of magnitude lower than the latter, making our algorithm especially suitable for label-demanding problems. Our central idea is to appeal to the wisdom of the crowd: for each instance (respectively each pair of instances), we query the noisy label (respectively noisy comparison) for a number of times to alleviate the noise. We show that with a novel design of an filtering process, together with a powerful boosting framework, the total query complexity is only a constant times more than learning from experts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge