Learning Halfspaces with Pairwise Comparisons: Breaking the Barriers of Query Complexity via Crowd Wisdom

Paper and Code

Nov 02, 2020

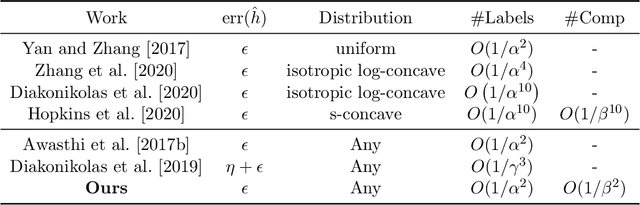

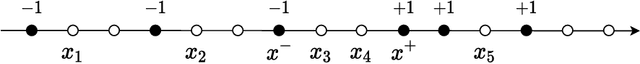

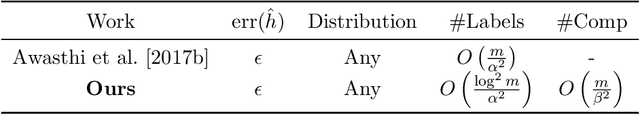

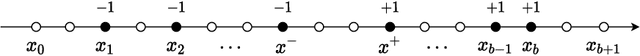

In this paper, we study the problem of efficient learning of halfspaces under Massart noise. This is a challenging noise model and only very recently has significant progress been made for problems with sufficient labeled samples. We consider the more practical yet less explored setting where the learner has a limited availability of labeled samples, but has extra access to pairwise comparisons. For the first time, we show that even when both labels and comparisons are corrupted by Massart noise, there is a polynomial-time algorithm that provably learns the underlying halfspace with near-optimal query complexity and noise tolerance, under the distribution-independent setting. In addition, we present a precise tradeoff between label complexity and comparison complexity, showing that the former is order of magnitude lower than the latter, making our algorithm especially suitable for label-demanding problems. Our central idea is to appeal to the wisdom of the crowd: for each instance (respectively each pair of instances), we query the noisy label (respectively noisy comparison) for a number of times to alleviate the noise. We show that with a novel design of an filtering process, together with a powerful boosting framework, the total query complexity is only a constant times more than learning from experts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge