Shivam Arora

Building Better Deception Probes Using Targeted Instruction Pairs

Feb 01, 2026Abstract:Linear probes are a promising approach for monitoring AI systems for deceptive behaviour. Previous work has shown that a linear classifier trained on a contrastive instruction pair and a simple dataset can achieve good performance. However, these probes exhibit notable failures even in straightforward scenarios, including spurious correlations and false positives on non-deceptive responses. In this paper, we identify the importance of the instruction pair used during training. Furthermore, we show that targeting specific deceptive behaviors through a human-interpretable taxonomy of deception leads to improved results on evaluation datasets. Our findings reveal that instruction pairs capture deceptive intent rather than content-specific patterns, explaining why prompt choice dominates probe performance (70.6% of variance). Given the heterogeneity of deception types across datasets, we conclude that organizations should design specialized probes targeting their specific threat models rather than seeking a universal deception detector.

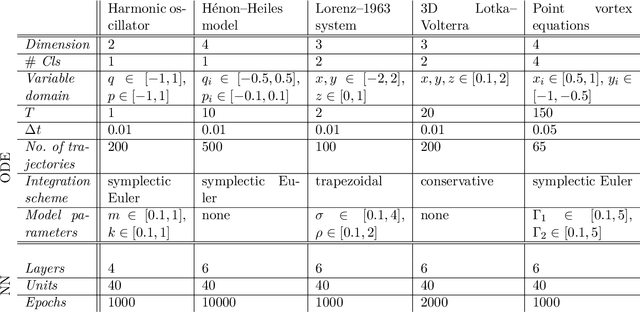

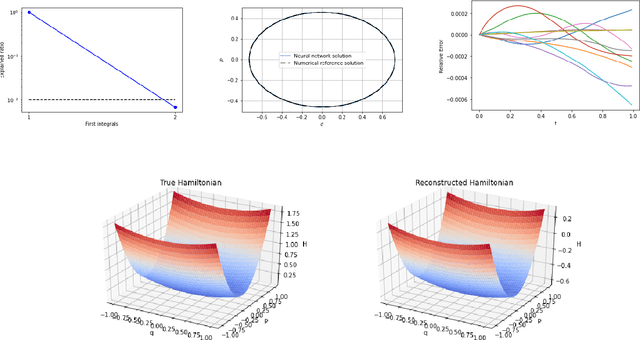

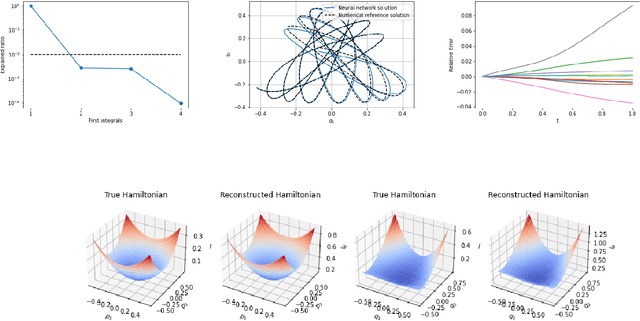

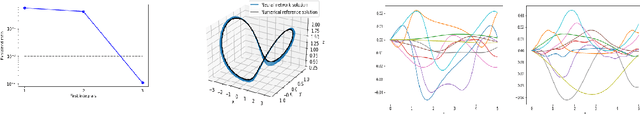

Model-free machine learning of conservation laws from data

Jan 12, 2023

Abstract:We present a machine learning based method for learning first integrals of systems of ordinary differential equations from given trajectory data. The method is model-free in that it does not require explicit knowledge of the underlying system of differential equations that generated the trajectories. As a by-product, once the first integrals have been learned, also the system of differential equations will be known. We illustrate our method by considering several classical problems from the mathematical sciences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge