Shilei Fu

Reinforcement Learning for SAR View Angle Inversion with Differentiable SAR Renderer

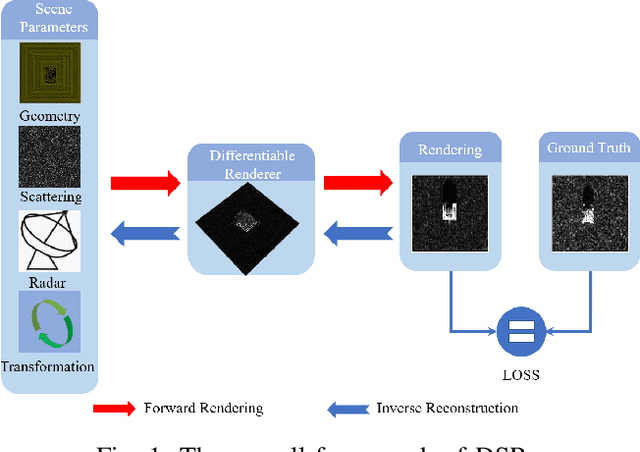

Jan 02, 2024Abstract:The electromagnetic inverse problem has long been a research hotspot. This study aims to reverse radar view angles in synthetic aperture radar (SAR) images given a target model. Nonetheless, the scarcity of SAR data, combined with the intricate background interference and imaging mechanisms, limit the applications of existing learning-based approaches. To address these challenges, we propose an interactive deep reinforcement learning (DRL) framework, where an electromagnetic simulator named differentiable SAR render (DSR) is embedded to facilitate the interaction between the agent and the environment, simulating a human-like process of angle prediction. Specifically, DSR generates SAR images at arbitrary view angles in real-time. And the differences in sequential and semantic aspects between the view angle-corresponding images are leveraged to construct the state space in DRL, which effectively suppress the complex background interference, enhance the sensitivity to temporal variations, and improve the capability to capture fine-grained information. Additionally, in order to maintain the stability and convergence of our method, a series of reward mechanisms, such as memory difference, smoothing and boundary penalty, are utilized to form the final reward function. Extensive experiments performed on both simulated and real datasets demonstrate the effectiveness and robustness of our proposed method. When utilized in the cross-domain area, the proposed method greatly mitigates inconsistency between simulated and real domains, outperforming reference methods significantly.

Differentiable SAR Renderer and SAR Target Reconstruction

May 14, 2022

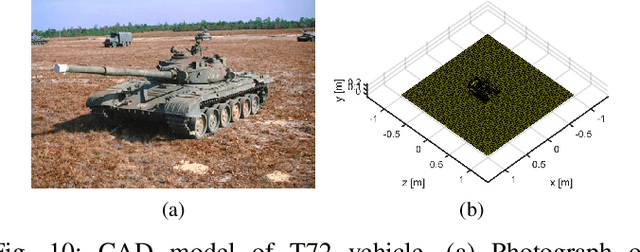

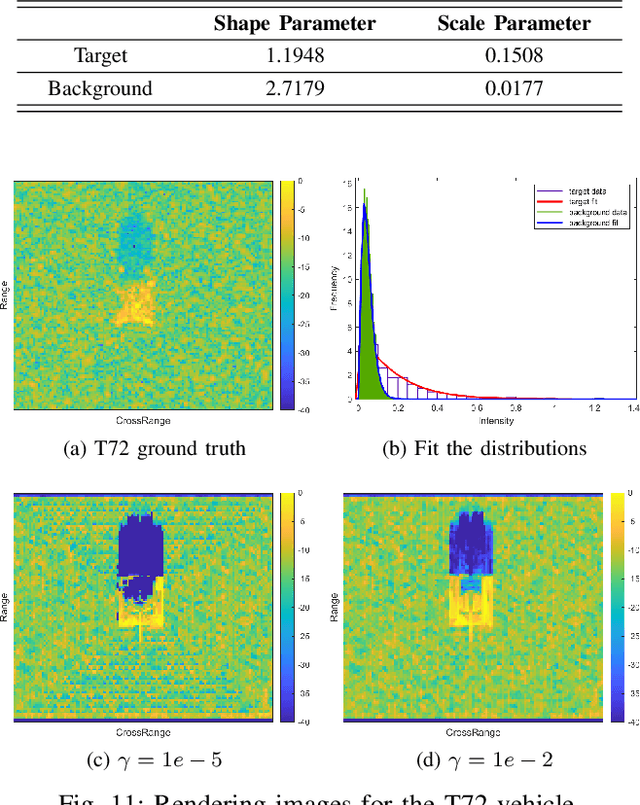

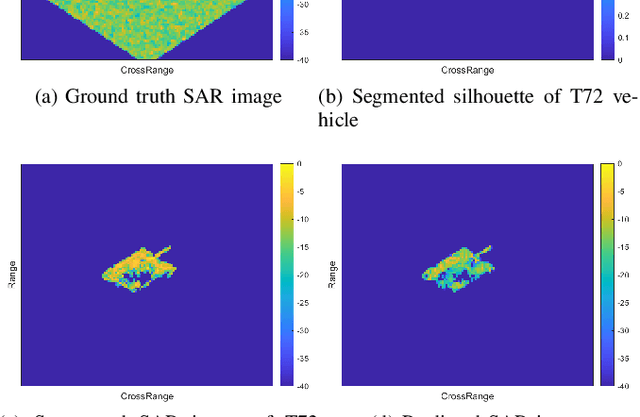

Abstract:Forward modeling of wave scattering and radar imaging mechanisms is the key to information extraction from synthetic aperture radar (SAR) images. Like inverse graphics in optical domain, an inherently-integrated forward-inverse approach would be promising for SAR advanced information retrieval and target reconstruction. This paper presents such an attempt to the inverse graphics for SAR imagery. A differentiable SAR renderer (DSR) is developed which reformulates the mapping and projection algorithm of SAR imaging mechanism in the differentiable form of probability maps. First-order gradients of the proposed DSR are then analytically derived which can be back-propagated from rendered image/silhouette to the target geometry and scattering attributes. A 3D inverse target reconstruction algorithm from SAR images is devised. Several simulation and reconstruction experiments are conducted, including targets with and without background, using both synthesized data or real measured inverse SAR (ISAR) data by ground radar. Results demonstrate the efficacy of the proposed DSR and its inverse approach.

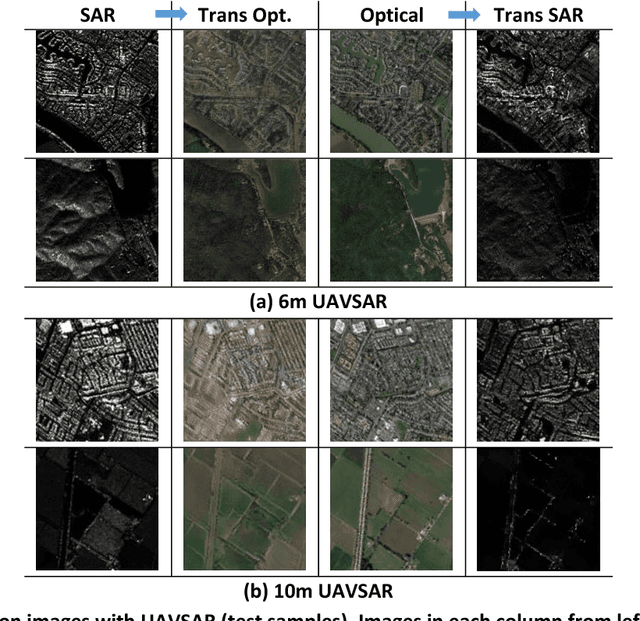

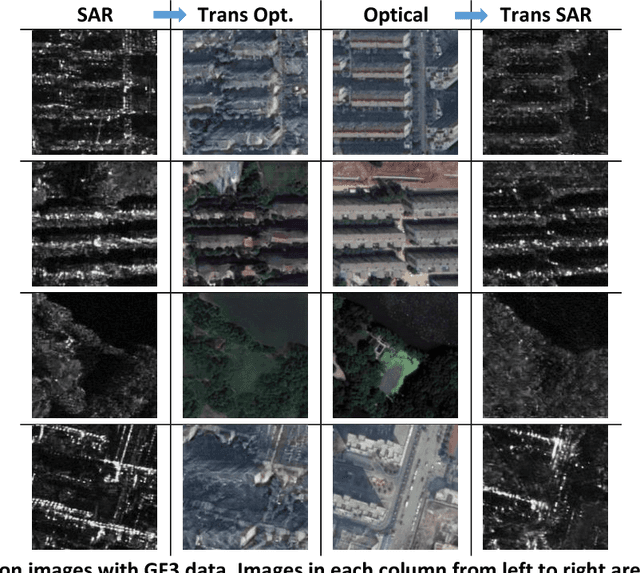

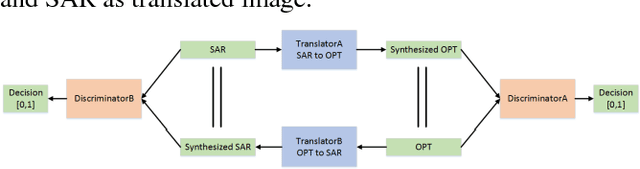

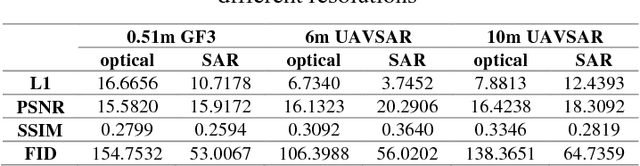

Reciprocal Translation between SAR and Optical Remote Sensing Images with Cascaded-Residual Adversarial Networks

Jan 24, 2019

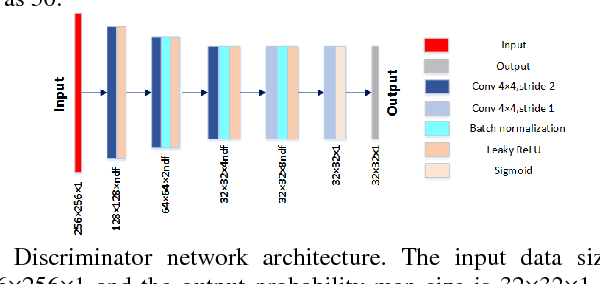

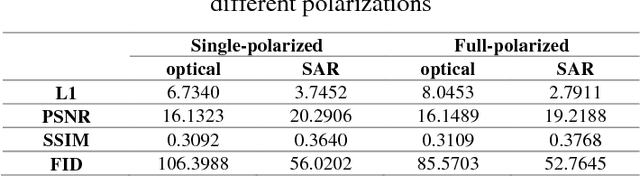

Abstract:Despite the advantages of all-weather and all-day high-resolution imaging, synthetic aperture radar (SAR) images are much less viewed and used by general people because human vision is not adapted to microwave scattering phenomenon. However, expert interpreters can be trained by comparing side-by-side SAR and optical images to learn the mapping rules from SAR to optical. This paper attempts to develop machine intelligence that are trainable with large-volume co-registered SAR and optical images to translate SAR image to optical version for assisted SAR image interpretation. Reciprocal SAR-Optical image translation is a challenging task because it is raw data translation between two physically very different sensing modalities. This paper proposes a novel reciprocal adversarial network scheme where cascaded residual connections and hybrid L1-GAN loss are employed. It is trained and tested on both spaceborne GF-3 and airborne UAVSAR images. Results are presented for datasets of different resolutions and polarizations and compared with other state-of-the-art methods. The FID is used to quantitatively evaluate the translation performance. The possibility of unsupervised learning with unpaired SAR and optical images is also explored. Results show that the proposed translation network works well under many scenarios and it could potentially be used for assisted SAR interpretation.

Translating SAR to Optical Images for Assisted Interpretation

Jan 08, 2019

Abstract:Despite the advantages of all-weather and all-day high-resolution imaging, SAR remote sensing images are much less viewed and used by general people because human vision is not adapted to microwave scattering phenomenon. However, expert interpreters can be trained by compare side-by-side SAR and optical images to learn the translation rules from SAR to optical. This paper attempts to develop machine intelligence that are trainable with large-volume co-registered SAR and optical images to translate SAR image to optical version for assisted SAR interpretation. A novel reciprocal GAN scheme is proposed for this translation task. It is trained and tested on both spaceborne GF-3 and airborne UAVSAR images. Comparisons and analyses are presented for datasets of different resolutions and polarizations. Results show that the proposed translation network works well under many scenarios and it could potentially be used for assisted SAR interpretation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge