Shiguang Liu

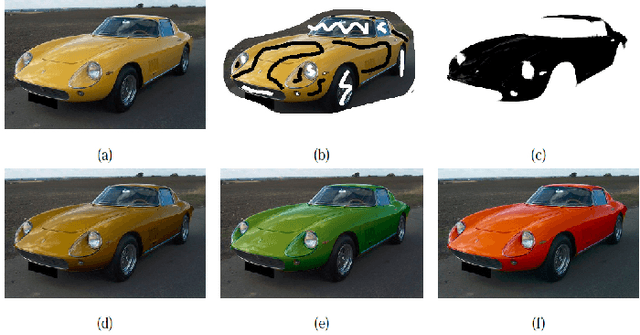

An Overview of Color Transfer and Style Transfer for Images and Videos

Apr 28, 2022

Abstract:Image or video appearance features (e.g., color, texture, tone, illumination, and so on) reflect one's visual perception and direct impression of an image or video. Given a source image (video) and a target image (video), the image (video) color transfer technique aims to process the color of the source image or video (note that the source image or video is also referred to the reference image or video in some literature) to make it look like that of the target image or video, i.e., transferring the appearance of the target image or video to that of the source image or video, which can thereby change one's perception of the source image or video. As an extension of color transfer, style transfer refers to rendering the content of a target image or video in the style of an artist with either a style sample or a set of images through a style transfer model. As an emerging field, the study of style transfer has attracted the attention of a large number of researchers. After decades of development, it has become a highly interdisciplinary research with a variety of artistic expression styles can be achieved. This paper provides an overview of color transfer and style transfer methods over the past years.

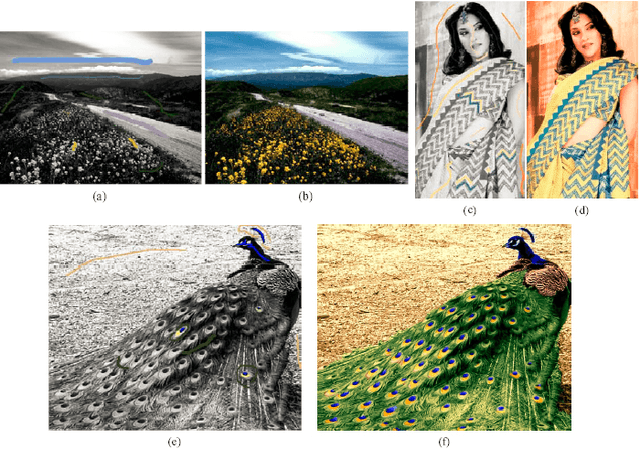

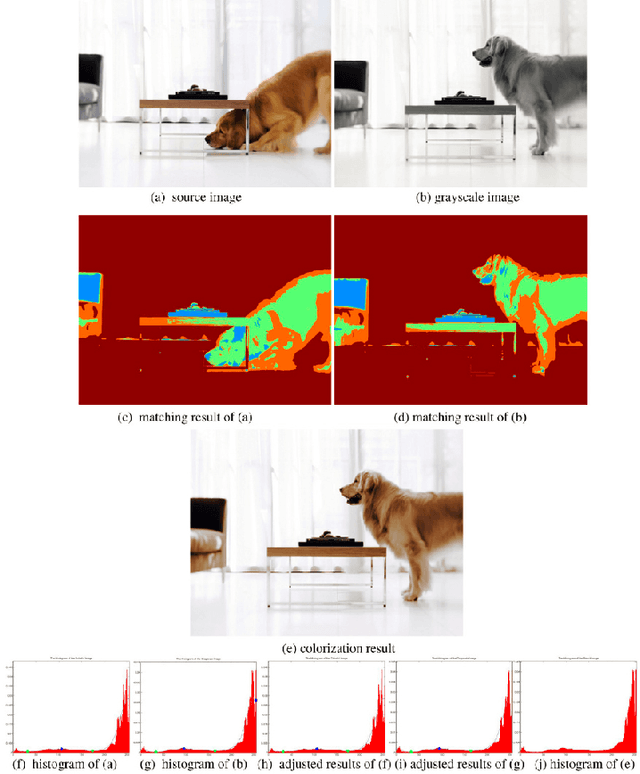

Two Decades of Colorization and Decolorization for Images and Videos

Apr 28, 2022

Abstract:Colorization is a computer-aided process, which aims to give color to a gray image or video. It can be used to enhance black-and-white images, including black-and-white photos, old-fashioned films, and scientific imaging results. On the contrary, decolorization is to convert a color image or video into a grayscale one. A grayscale image or video refers to an image or video with only brightness information without color information. It is the basis of some downstream image processing applications such as pattern recognition, image segmentation, and image enhancement. Different from image decolorization, video decolorization should not only consider the image contrast preservation in each video frame, but also respect the temporal and spatial consistency between video frames. Researchers were devoted to develop decolorization methods by balancing spatial-temporal consistency and algorithm efficiency. With the prevalance of the digital cameras and mobile phones, image and video colorization and decolorization have been paid more and more attention by researchers. This paper gives an overview of the progress of image and video colorization and decolorization methods in the last two decades.

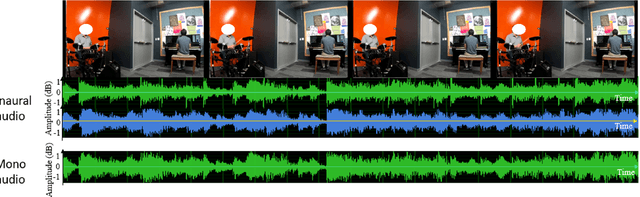

Binaural Audio Generation via Multi-task Learning

Sep 02, 2021

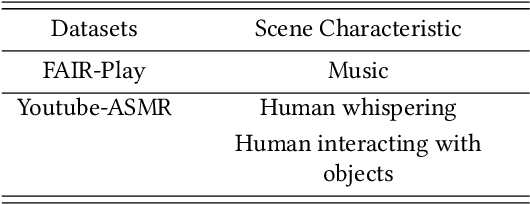

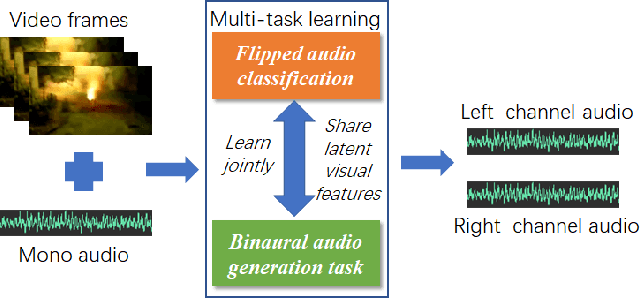

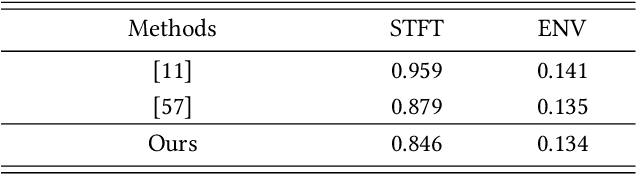

Abstract:We present a learning-based approach for generating binaural audio from mono audio using multi-task learning. Our formulation leverages additional information from two related tasks: the binaural audio generation task and the flipped audio classification task. Our learning model extracts spatialization features from the visual and audio input, predicts the left and right audio channels, and judges whether the left and right channels are flipped. First, we extract visual features using ResNet from the video frames. Next, we perform binaural audio generation and flipped audio classification using separate subnetworks based on visual features. Our learning method optimizes the overall loss based on the weighted sum of the losses of the two tasks. We train and evaluate our model on the FAIR-Play dataset and the YouTube-ASMR dataset. We perform quantitative and qualitative evaluations to demonstrate the benefits of our approach over prior techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge