Shiding Sun

Attention-based Multi-instance Neural Network for Medical Diagnosis from Incomplete and Low Quality Data

Apr 09, 2019

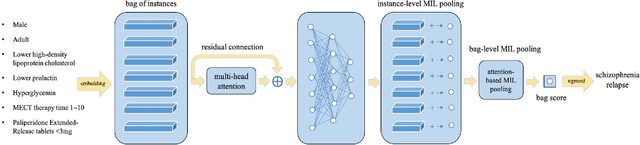

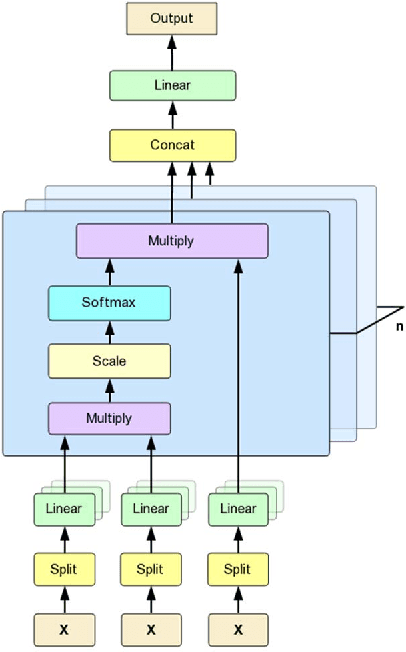

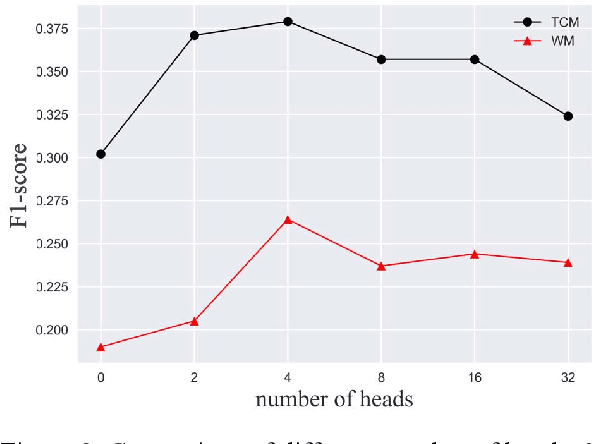

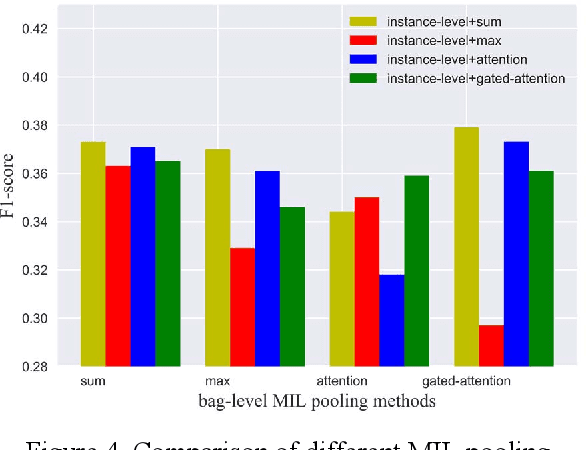

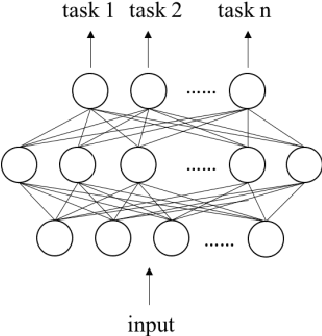

Abstract:One way to extract patterns from clinical records is to consider each patient record as a bag with various number of instances in the form of symptoms. Medical diagnosis is to discover informative ones first and then map them to one or more diseases. In many cases, patients are represented as vectors in some feature space and a classifier is applied after to generate diagnosis results. However, in many real-world cases, data is often of low-quality due to a variety of reasons, such as data consistency, integrity, completeness, accuracy, etc. In this paper, we propose a novel approach, attention based multi-instance neural network (AMI-Net), to make the single disease classification only based on the existing and valid information in the real-world outpatient records. In the context of a patient, it takes a bag of instances as input and output the bag label directly in end-to-end way. Embedding layer is adopted at the beginning, mapping instances into an embedding space which represents the individual patient condition. The correlations among instances and their importance for the final classification are captured by multi-head attention transformer, instance-level multi-instance pooling and bag-level multi-instance pooling. The proposed approach was test on two non-standardized and highly imbalanced datasets, one in the Traditional Chinese Medicine (TCM) domain and the other in the Western Medicine (WM) domain. Our preliminary results show that the proposed approach outperforms all baselines results by a significant margin.

CNN based Multi-Instance Multi-Task Learning for Syndrome Differentiation of Diabetic Patients

Jan 19, 2019

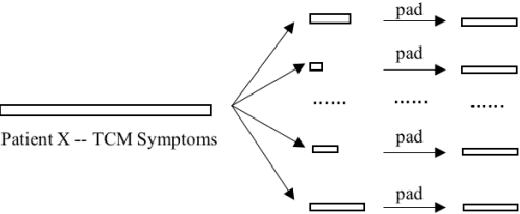

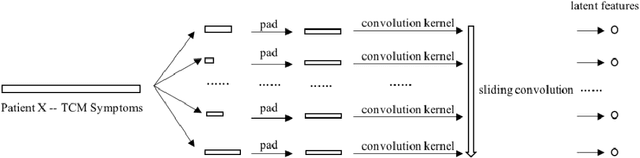

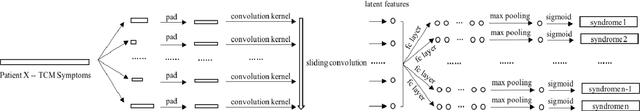

Abstract:Syndrome differentiation in Traditional Chinese Medicine (TCM) is the process of understanding and reasoning body condition, which is the essential step and premise of effective treatments. However, due to its complexity and lack of standardization, it is challenging to achieve. In this study, we consider each patient's record as a one-dimensional image and symptoms as pixels, in which missing and negative values are represented by zero pixels. The objective is to find relevant symptoms first and then map them to proper syndromes, that is similar to the object detection problem in computer vision. Inspired from it, we employ multi-instance multi-task learning combined with the convolutional neural network (MIMT-CNN) for syndrome differentiation, which takes region proposals as input and output image labels directly. The neural network consists of region proposals generation, convolutional layer, fully connected layer, and max pooling (multi-instance pooling) layer followed by the sigmoid function in each syndrome prediction task for image representation learning and final results generation. On the diabetes dataset, it performs better than all other baseline methods. Moreover, it shows stability and reliability to generate results, even on the dataset with small sample size, a large number of missing values and noises.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge