Shengquan Wang

A Semi-Supervised Text Generation Framework Combining a Deep Transformer and a GAN

Feb 09, 2025

Abstract:This paper introduces a framework that connects a deep generative pre-trained Transformer language model with a generative adversarial network for semi-supervised text generation. In other words, the proposed model is first pre-trained unsupervised on a large and diverse text corpus with 24 layers. Then a simple GAN architecture for synthetic text generation is introduced, and Gumbel-Softmax is applied to handle the discreteness of tokens. The paper also shows a semi-supervised approach where real data is augmented with GAN samples, which is further used to fine-tune the Transformer model on the merged dataset. Detailed theoretical derivations are also included, outlining the proof of the min-max objective function, and an extensive discussion of the Gumbel-Softmax reparameterization trick.

RSnet: An improvement for Darknet

Jan 16, 2020

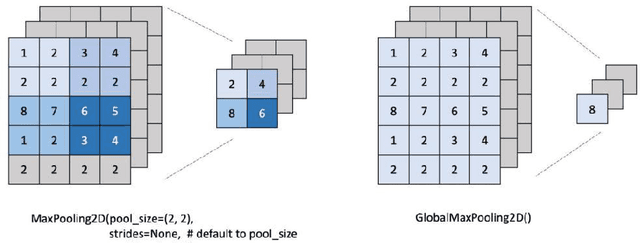

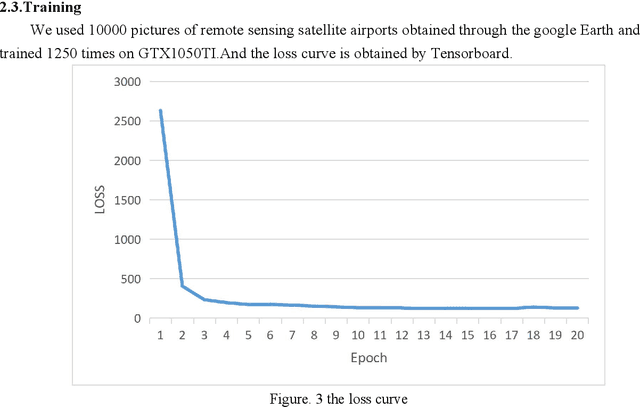

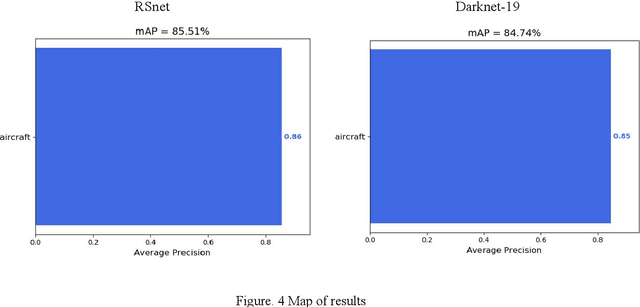

Abstract:Recently, when we used this method to identify aircraft targets in remote sensing images, we found that there are some defects in our own YOLOv2 and Darknet-19 network. Characteristic in the images we identified are not very clear, thats why we couldn't get some much more good results. Then we replaced the maxpooling in the yolov3 network as the global maxpooling. Under the same test conditions, we got a higher. It achieves 76.9 AP50 in 100 ms on a GTX1050TI, compared to 80.5 AP50 in 627 ms by our net. Map.86% of Map was obtained by the improved network, higher than the former.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge