Shenggang Ying

Quantum Privacy-Preserving Perceptron

Jul 31, 2017

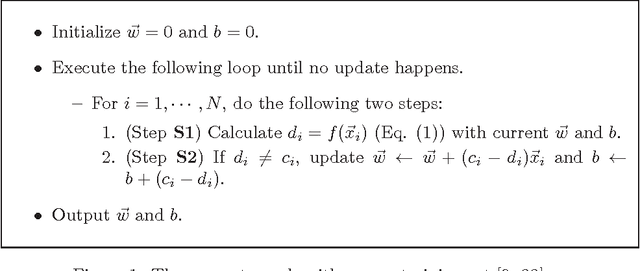

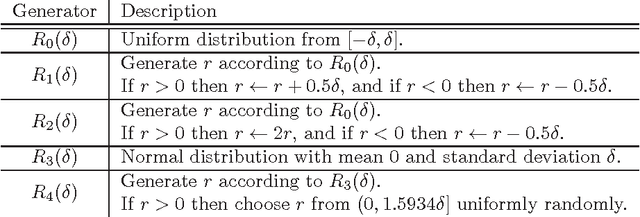

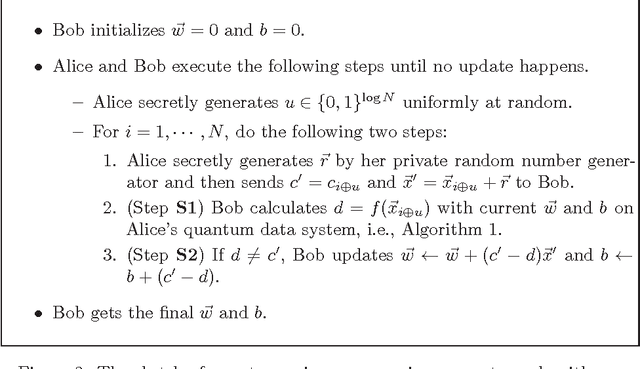

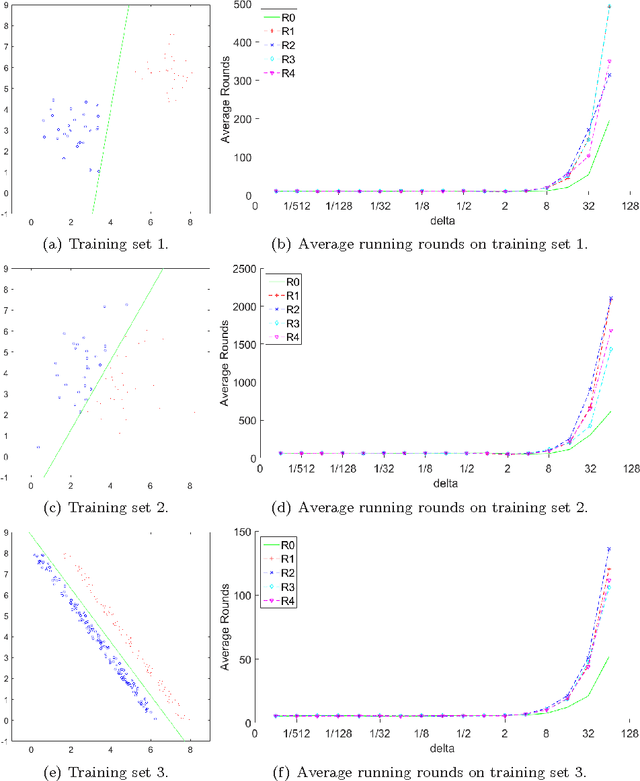

Abstract:With the extensive applications of machine learning, the issue of private or sensitive data in the training examples becomes more and more serious: during the training process, personal information or habits may be disclosed to unexpected persons or organisations, which can cause serious privacy problems or even financial loss. In this paper, we present a quantum privacy-preserving algorithm for machine learning with perceptron. There are mainly two steps to protect original training examples. Firstly when checking the current classifier, quantum tests are employed to detect data user's possible dishonesty. Secondly when updating the current classifier, private random noise is used to protect the original data. The advantages of our algorithm are: (1) it protects training examples better than the known classical methods; (2) it requires no quantum database and thus is easy to implement.

Quantum Privacy-Preserving Data Mining

Jan 14, 2016

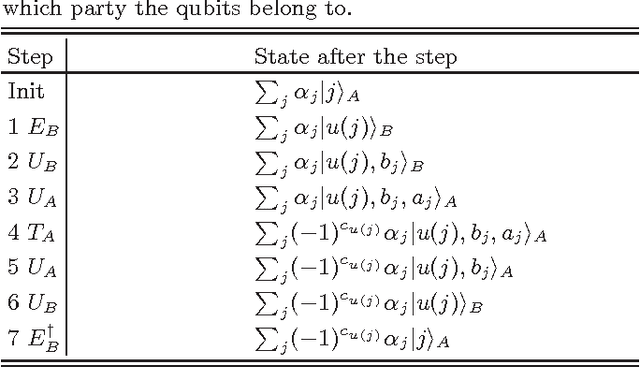

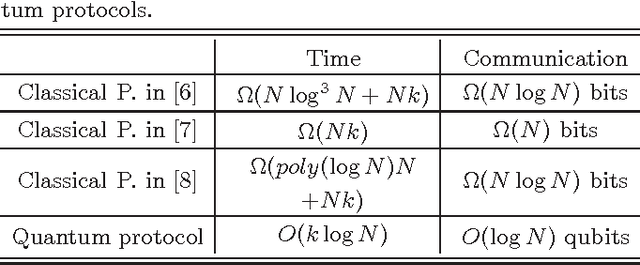

Abstract:Data mining is a key technology in big data analytics and it can discover understandable knowledge (patterns) hidden in large data sets. Association rule is one of the most useful knowledge patterns, and a large number of algorithms have been developed in the data mining literature to generate association rules corresponding to different problems and situations. Privacy becomes a vital issue when data mining is used to sensitive data sets like medical records, commercial data sets and national security. In this Letter, we present a quantum protocol for mining association rules on vertically partitioned databases. The quantum protocol can improve the privacy level preserved by known classical protocols and at the same time it can exponentially reduce the computational complexity and communication cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge