Shao-Bing Gao

Improving Color Constancy by Discounting the Variation of Camera Spectral Sensitivity

Nov 01, 2016

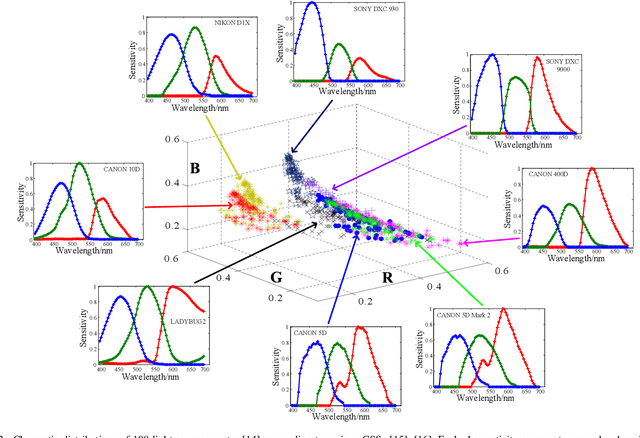

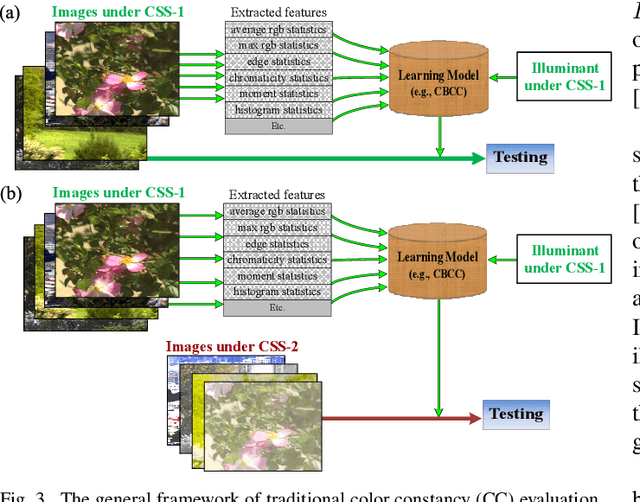

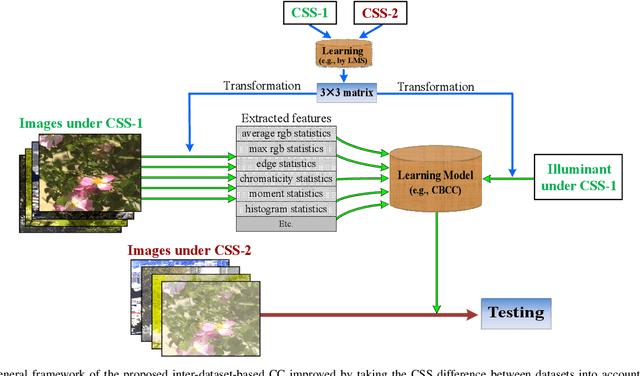

Abstract:It is an ill-posed problem to recover the true scene colors from a color biased image by discounting the effects of scene illuminant and camera spectral sensitivity (CSS) at the same time. Most color constancy (CC) models have been designed to first estimate the illuminant color, which is then removed from the color biased image to obtain an image taken under white light, without the explicit consideration of CSS effect on CC. This paper first studies the CSS effect on illuminant estimation arising in the inter-dataset-based CC (inter-CC), i.e., training a CC model on one dataset and then testing on another dataset captured by a distinct CSS. We show the clear degradation of existing CC models for inter-CC application. Then a simple way is proposed to overcome such degradation by first learning quickly a transform matrix between the two distinct CSSs (CSS-1 and CSS-2). The learned matrix is then used to convert the data (including the illuminant ground truth and the color biased images) rendered under CSS-1 into CSS-2, and then train and apply the CC model on the color biased images under CSS-2, without the need of burdensome acquiring of training set under CSS-2. Extensive experiments on synthetic and real images show that our method can clearly improve the inter-CC performance for traditional CC algorithms. We suggest that by taking the CSS effect into account, it is more likely to obtain the truly color constant images invariant to the changes of both illuminant and camera sensors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge