Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Shang-Nan Wang

Unsupervised prototype learning in an associative-memory network

Jul 25, 2017Figures and Tables:

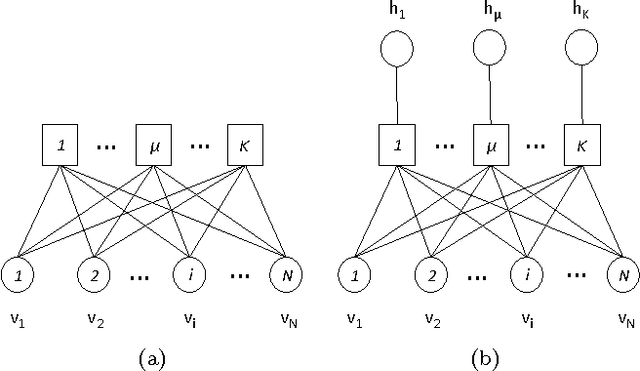

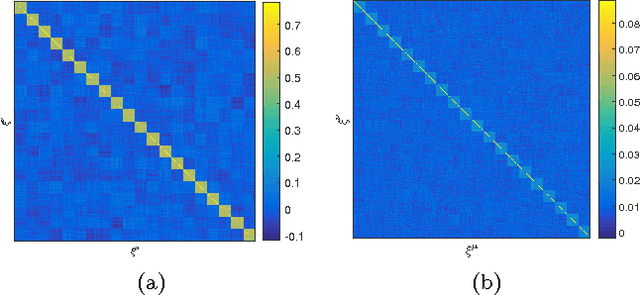

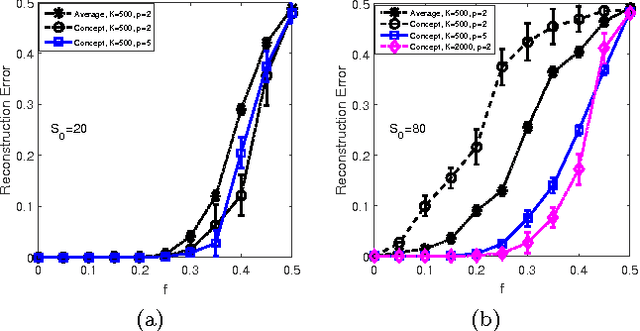

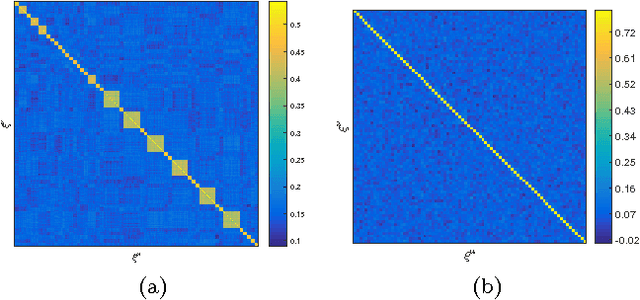

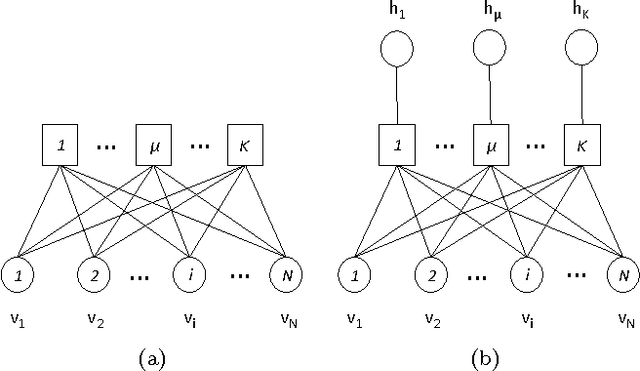

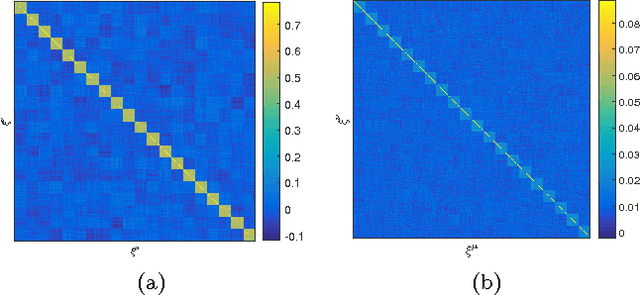

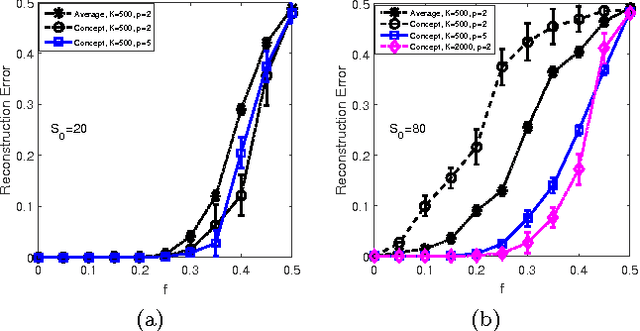

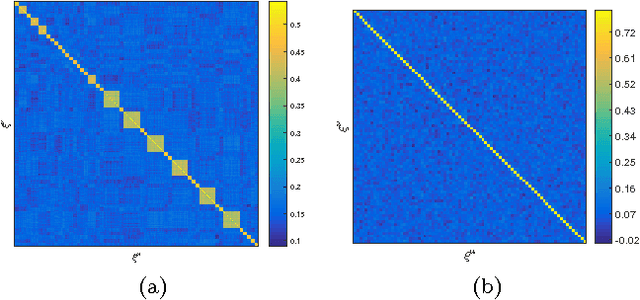

Abstract:Unsupervised learning in a generalized Hopfield associative-memory network is investigated in this work. First, we prove that the (generalized) Hopfield model is equivalent to a semi-restricted Boltzmann machine with a layer of visible neurons and another layer of hidden binary neurons, so it could serve as the building block for a multilayered deep-learning system. We then demonstrate that the Hopfield network can learn to form a faithful internal representation of the observed samples, with the learned memory patterns being prototypes of the input data. Furthermore, we propose a spectral method to extract a small set of concepts (idealized prototypes) as the most concise summary or abstraction of the empirical data.

* We found serious inconsistence between the numerical protocol

described in the text and the actual numerical code used by the first author

to produce the data. Because of this inconsistence, we decide to withdraw the

preprint. The corresponding author (Hai-Jun Zhou) deeply apologizes for not

being able to detect this inconsistence earlier

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge