Seongdeok Bang

Revisiting DDIM Inversion for Controlling Defect Generation by Disentangling the Background

Nov 25, 2024Abstract:In anomaly detection, the scarcity of anomalous data compared to normal data poses a challenge in effectively utilizing deep neural network representations to identify anomalous features. From a data-centric perspective, generative models can solve this data imbalance issue by synthesizing anomaly datasets. Although previous research tried to enhance the controllability and quality of generating defects, they do not consider the relation between background and defect. Since the defect depends on the object's background (i.e., the normal part of an object), training only the defect area cannot utilize the background information, and even generation can be biased depending on the mask information. In addition, controlling logical anomalies should consider the dependency between background and defect areas (e.g., orange colored defect on a orange juice bottle). In this paper, our paper proposes modeling a relationship between the background and defect, where background affects denoising defects; however, the reverse is not. We introduce the regularizing term to disentangle denoising background from defects. From the disentanglement loss, we rethink defect generation with DDIM Inversion, where we generate the defect on the target normal image. Additionally, we theoretically prove that our methodology can generate a defect on the target normal image with an invariant background. We demonstrate our synthetic data is realistic and effective in several experiments.

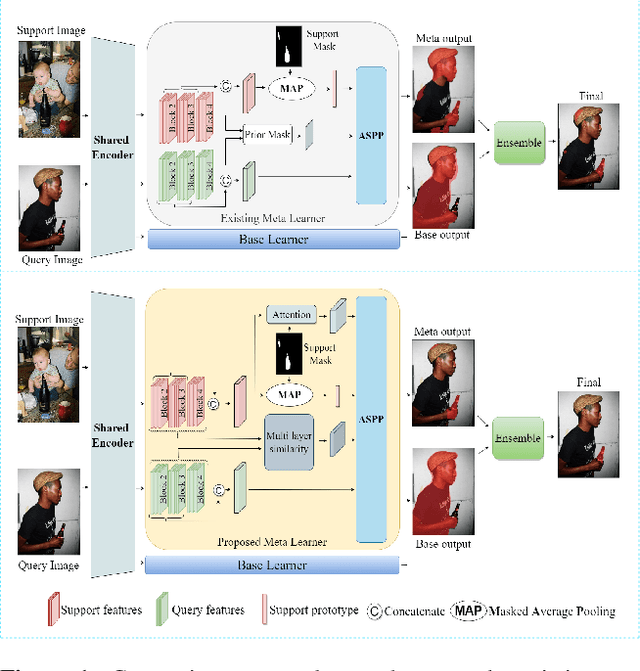

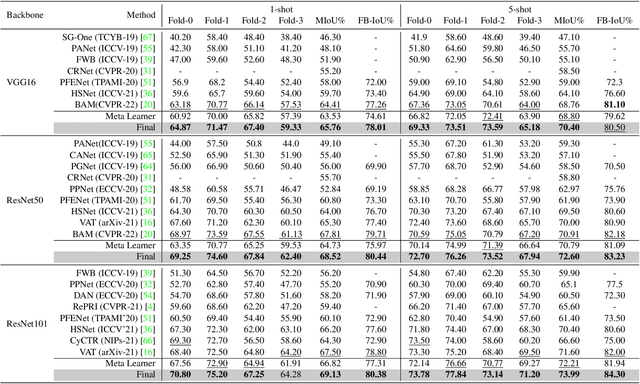

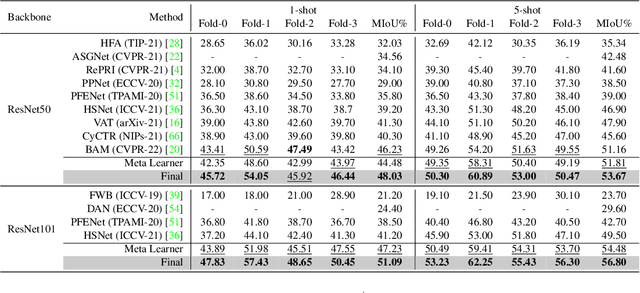

MSANet: Multi-Similarity and Attention Guidance for Boosting Few-Shot Segmentation

Jun 20, 2022

Abstract:Few-shot segmentation aims to segment unseen-class objects given only a handful of densely labeled samples. Prototype learning, where the support feature yields a singleor several prototypes by averaging global and local object information, has been widely used in FSS. However, utilizing only prototype vectors may be insufficient to represent the features for all training data. To extract abundant features and make more precise predictions, we propose a Multi-Similarity and Attention Network (MSANet) including two novel modules, a multi-similarity module and an attention module. The multi-similarity module exploits multiple feature-maps of support images and query images to estimate accurate semantic relationships. The attention module instructs the network to concentrate on class-relevant information. The network is tested on standard FSS datasets, PASCAL-5i 1-shot, PASCAL-5i 5-shot, COCO-20i 1-shot, and COCO-20i 5-shot. The MSANet with the backbone of ResNet-101 achieves the state-of-the-art performance for all 4-benchmark datasets with mean intersection over union (mIoU) of 69.13%, 73.99%, 51.09%, 56.80%, respectively. Code is available at https://github.com/AIVResearch/MSANet

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge