Selam Gano

Low Fidelity Visuo-Tactile Pretraining Improves Vision-Only Manipulation Performance

Jun 25, 2024

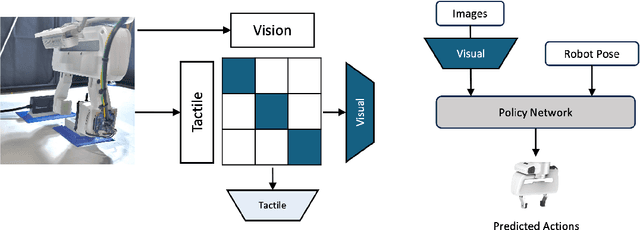

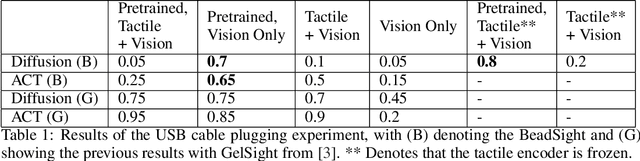

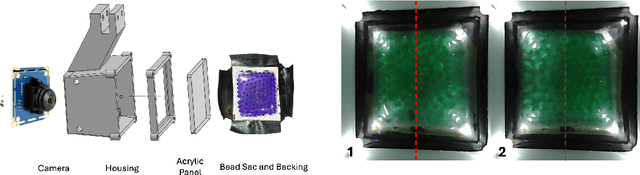

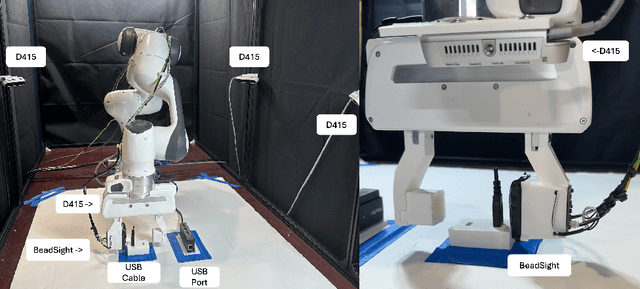

Abstract:Tactile perception is a critical component of solving real-world manipulation tasks, but tactile sensors for manipulation have barriers to use such as fragility and cost. In this work, we engage a robust, low-cost tactile sensor, BeadSight, as an alternative to precise pre-calibrated sensors for a pretraining approach to manipulation. We show that tactile pretraining, even with a low-fidelity sensor as BeadSight, can improve an imitation learning agent's performance on complex manipulation tasks. We demonstrate this method against a baseline USB cable plugging task, previously achieved with a much higher precision GelSight sensor as the tactile input to pretraining. Our best BeadSight pretrained visuo-tactile agent completed the task with 70\% accuracy compared to 85\% for the best GelSight pretrained visuo-tactile agent, with vision-only inference for both.

Visuo-Tactile Pretraining for Cable Plugging

Mar 18, 2024Abstract:Tactile information is a critical tool for fine-grain manipulation. As humans, we rely heavily on tactile information to understand objects in our environments and how to interact with them. We use touch not only to perform manipulation tasks but also to learn how to perform these tasks. Therefore, to create robotic agents that can learn to complete manipulation tasks at a human or super-human level of performance, we need to properly incorporate tactile information into both skill execution and skill learning. In this paper, we investigate how we can incorporate tactile information into imitation learning platforms to improve performance on complex tasks. To do this, we tackle the challenge of plugging in a USB cable, a dexterous manipulation task that relies on fine-grain visuo-tactile serving. By incorporating tactile information into imitation learning frameworks, we are able to train a robotic agent to plug in a USB cable - a first for imitation learning. Additionally, we explore how tactile information can be used to train non-tactile agents through a contrastive-loss pretraining process. Our results show that by pretraining with tactile information, the performance of a non-tactile agent can be significantly improved, reaching a level on par with visuo-tactile agents. For demonstration videos and access to our codebase, see the project website: https://sites.google.com/andrew.cmu.edu/visuo-tactile-cable-plugging/home

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge