Sebastian Simon

Disagreement as Data: Reasoning Trace Analytics in Multi-Agent Systems

Jan 18, 2026Abstract:Learning analytics researchers often analyze qualitative student data such as coded annotations or interview transcripts to understand learning processes. With the rise of generative AI, fully automated and human-AI workflows have emerged as promising methods for analysis. However, methodological standards to guide such workflows remain limited. In this study, we propose that reasoning traces generated by large language model (LLM) agents, especially within multi-agent systems, constitute a novel and rich form of process data to enhance interpretive practices in qualitative coding. We apply cosine similarity to LLM reasoning traces to systematically detect, quantify, and interpret disagreements among agents, reframing disagreement as a meaningful analytic signal. Analyzing nearly 10,000 instances of agent pairs coding human tutoring dialog segments, we show that LLM agents' semantic reasoning similarity robustly differentiates consensus from disagreement and correlates with human coding reliability. Qualitative analysis guided by this metric reveals nuanced instructional sub-functions within codes and opportunities for conceptual codebook refinement. By integrating quantitative similarity metrics with qualitative review, our method has the potential to improve and accelerate establishing inter-rater reliability during coding by surfacing interpretive ambiguity, especially when LLMs collaborate with humans. We discuss how reasoning-trace disagreements represent a valuable new class of analytic signals advancing methodological rigor and interpretive depth in educational research.

Hey AI, Generate Me a Hardware Code! Agentic AI-based Hardware Design & Verification

Jul 03, 2025Abstract:Modern Integrated Circuits (ICs) are becoming increasingly complex, and so is their development process. Hardware design verification entails a methodical and disciplined approach to the planning, development, execution, and sign-off of functionally correct hardware designs. This tedious process requires significant effort and time to ensure a bug-free tape-out. The field of Natural Language Processing has undergone a significant transformation with the advent of Large Language Models (LLMs). These powerful models, often referred to as Generative AI (GenAI), have revolutionized how machines understand and generate human language, enabling unprecedented advancements in a wide array of applications, including hardware design verification. This paper presents an agentic AI-based approach to hardware design verification, which empowers AI agents, in collaboration with Humain-in-the-Loop (HITL) intervention, to engage in a more dynamic, iterative, and self-reflective process, ultimately performing end-to-end hardware design and verification. This methodology is evaluated on five open-source designs, achieving over 95% coverage with reduced verification time while demonstrating superior performance, adaptability, and configurability.

FuzzWiz -- Fuzzing Framework for Efficient Hardware Coverage

Oct 23, 2024

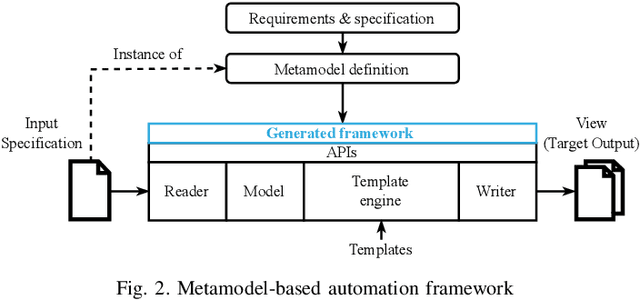

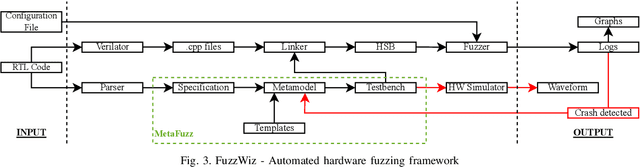

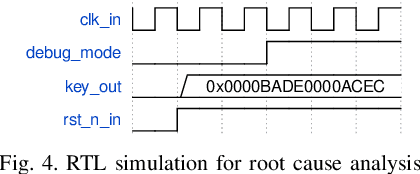

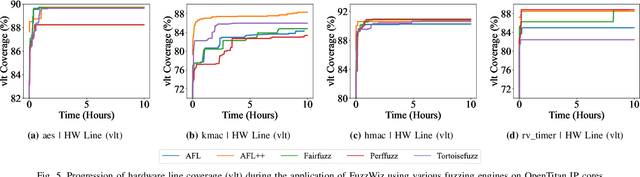

Abstract:Ever-increasing design complexity of System-on-Chips (SoCs) led to significant verification challenges. Unlike software, bugs in hardware design are vigorous and eternal i.e., once the hardware is fabricated, it cannot be repaired with any patch. Despite being one of the powerful techniques used in verification, the dynamic random approach cannot give confidence to complex Register Transfer Leve (RTL) designs during the pre-silicon design phase. In particular, achieving coverage targets and exposing bugs is a complicated task with random simulations. In this paper, we leverage an existing testing solution available in the software world known as fuzzing and apply it to hardware verification in order to achieve coverage targets in quick time. We created an automated hardware fuzzing framework FuzzWiz using metamodeling and Python to achieve coverage goals faster. It includes parsing the RTL design module, converting it into C/C++ models, creating generic testbench with assertions, fuzzer-specific compilation, linking, and fuzzing. Furthermore, it is configurable and provides the debug flow if any crash is detected during the fuzzing process. The proposed framework is applied on four IP blocks from Google's OpenTitan chip with various fuzzing engines to show its scalability and compatibility. Our benchmarking results show that we could achieve around 90% of the coverage 10 times faster than traditional simulation regression based approach.

A Methodology for Evaluating RAG Systems: A Case Study On Configuration Dependency Validation

Oct 11, 2024

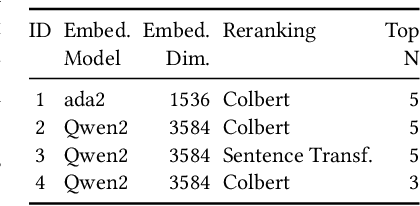

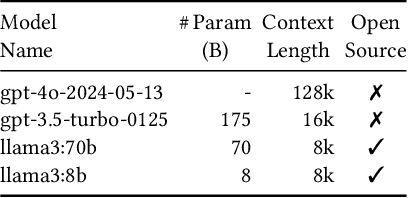

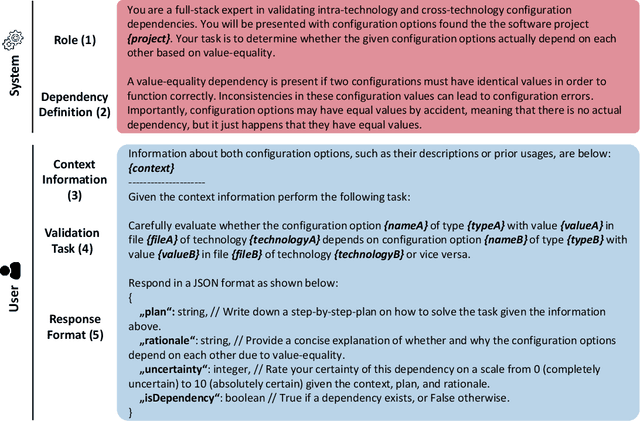

Abstract:Retrieval-augmented generation (RAG) is an umbrella of different components, design decisions, and domain-specific adaptations to enhance the capabilities of large language models and counter their limitations regarding hallucination and outdated and missing knowledge. Since it is unclear which design decisions lead to a satisfactory performance, developing RAG systems is often experimental and needs to follow a systematic and sound methodology to gain sound and reliable results. However, there is currently no generally accepted methodology for RAG evaluation despite a growing interest in this technology. In this paper, we propose a first blueprint of a methodology for a sound and reliable evaluation of RAG systems and demonstrate its applicability on a real-world software engineering research task: the validation of configuration dependencies across software technologies. In summary, we make two novel contributions: (i) A novel, reusable methodological design for evaluating RAG systems, including a demonstration that represents a guideline, and (ii) a RAG system, which has been developed following this methodology, that achieves the highest accuracy in the field of dependency validation. For the blueprint's demonstration, the key insights are the crucial role of choosing appropriate baselines and metrics, the necessity for systematic RAG refinements derived from qualitative failure analysis, as well as the reporting practices of key design decision to foster replication and evaluation.

Efficient Stimuli Generation using Reinforcement Learning in Design Verification

May 30, 2024Abstract:The increasing design complexity of System-on-Chips (SoCs) has led to significant verification challenges, particularly in meeting coverage targets within a timely manner. At present, coverage closure is heavily dependent on constrained random and coverage driven verification methodologies where the randomized stimuli are bounded to verify certain scenarios and to reach coverage goals. This process is said to be exhaustive and to consume a lot of project time. In this paper, a novel methodology is proposed to generate efficient stimuli with the help of Reinforcement Learning (RL) to reach the maximum code coverage of the Design Under Verification (DUV). Additionally, an automated framework is created using metamodeling to generate a SystemVerilog testbench and an RL environment for any given design. The proposed approach is applied to various designs and the produced results proves that the RL agent provides effective stimuli to achieve code coverage faster in comparison with baseline random simulations. Furthermore, various RL agents and reward schemes are analyzed in our work.

Improving Simulation Regression Efficiency using a Machine Learning-based Method in Design Verification

May 24, 2024Abstract:The verification throughput is becoming a major challenge bottleneck, since the complexity and size of SoC designs are still ever increasing. Simply adding more CPU cores and running more tests in parallel will not scale anymore. This paper discusses various methods of improving verification throughput: ranking and the new machine learning (ML) based technology introduced by Cadence i.e. Xcelium ML. Both methods aim at getting comparable coverage in less CPU time by applying more efficient stimulus. Ranking selects specific seeds that simply turned out to come up with the largest coverage in previous simulations, while Xcelium ML generates optimized patterns as a result of finding correlations between randomization points and achieved coverage of previous regressions. Quantified results as well as pros & cons of each approach are discussed in this paper at the example of three actual industry projects. Both Xcelium ML and Ranking methods gave comparable compression & speedup factors around 3 consistently. But the optimized ML based regressions simulated new random scenarios occasionally producing a coverage regain of more than 100%. Finally, a methodology is proposed to use Xcelium ML efficiently throughout the product development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge