Sebastian Sartor

Winning Through Simplicity: Autonomous Car Design for Formula Student

Jun 19, 2024

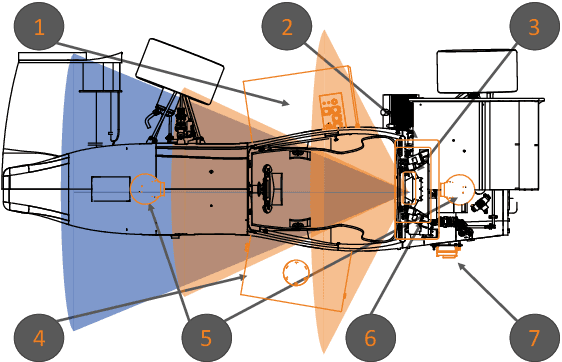

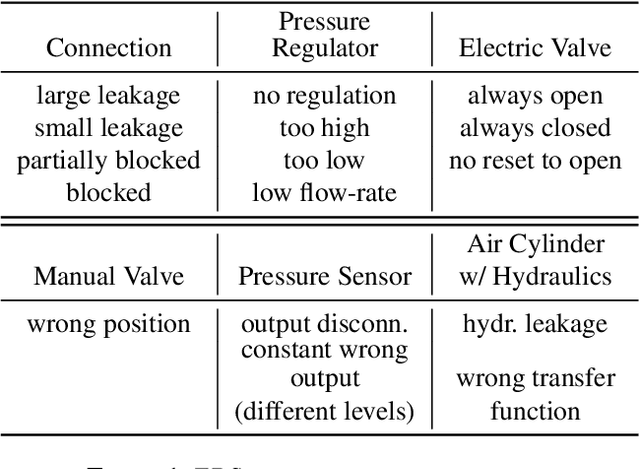

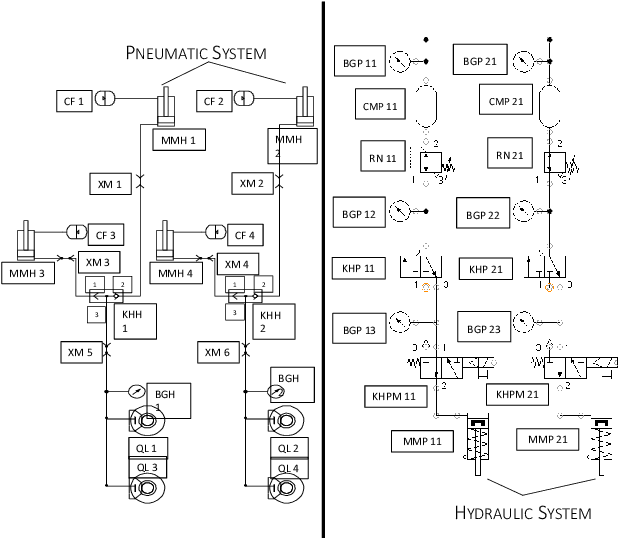

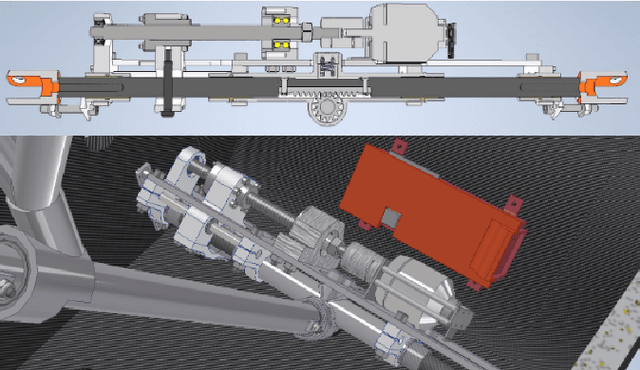

Abstract:This paper presents the design of an autonomous race car that is self-designed, self-developed, and self-built by the Elefant Racing team at the University of Bayreuth. The system is created to compete in the Formula Student Driverless competition. Its primary focus is on the Acceleration track, a straight 75-meter-long course, and the Skidpad track, which comprises two circles forming an eight. Additionally, it is experimentally capable of competing in the Autocross and Trackdrive events, which feature tracks with previously unknown straights and curves. The paper details the hardware, software and sensor setup employed during the 2020/2021 season. Despite being developed by a small team with limited computer science expertise, the design won the Formula Student East Engineering Design award. Emphasizing simplicity and efficiency, the team employed streamlined techniques to achieve their success.

Neural Scaling Laws for Embodied AI

May 22, 2024Abstract:Scaling laws have driven remarkable progress across machine learning domains like language modeling and computer vision. However, the exploration of scaling laws in embodied AI and robotics has been limited, despite the rapidly increasing usage of machine learning in this field. This paper presents the first study to quantify scaling laws for Robot Foundation Models (RFMs) and the use of LLMs in robotics tasks. Through a meta-analysis spanning 198 research papers, we analyze how key factors like compute, model size, and training data quantity impact model performance across various robotic tasks. Our findings confirm that scaling laws apply to both RFMs and LLMs in robotics, with performance consistently improving as resources increase. The power law coefficients for RFMs closely match those of LLMs in robotics, resembling those found in computer vision and outperforming those for LLMs in the language domain. We also note that these coefficients vary with task complexity, with familiar tasks scaling more efficiently than unfamiliar ones, emphasizing the need for large and diverse datasets. Furthermore, we highlight the absence of standardized benchmarks in embodied AI. Most studies indicate diminishing returns, suggesting that significant resources are necessary to achieve high performance, posing challenges due to data and computational limitations. Finally, as models scale, we observe the emergence of new capabilities, particularly related to data and model size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge