Sean Gillen

Leveraging Reward Gradients For Reinforcement Learning in Differentiable Physics Simulations

Mar 06, 2022

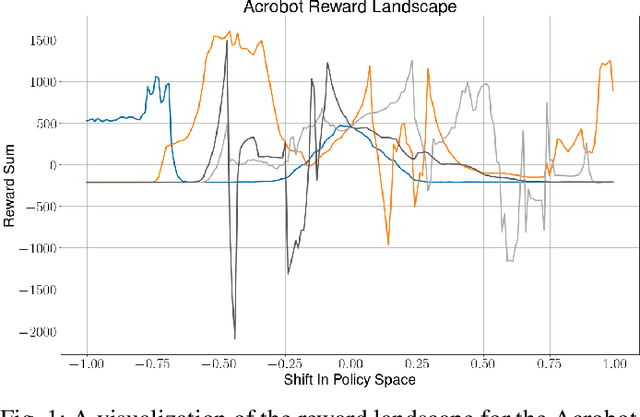

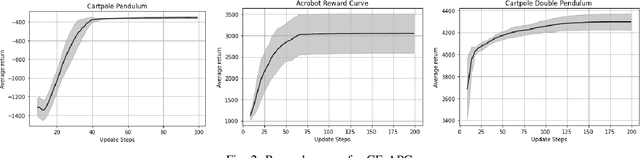

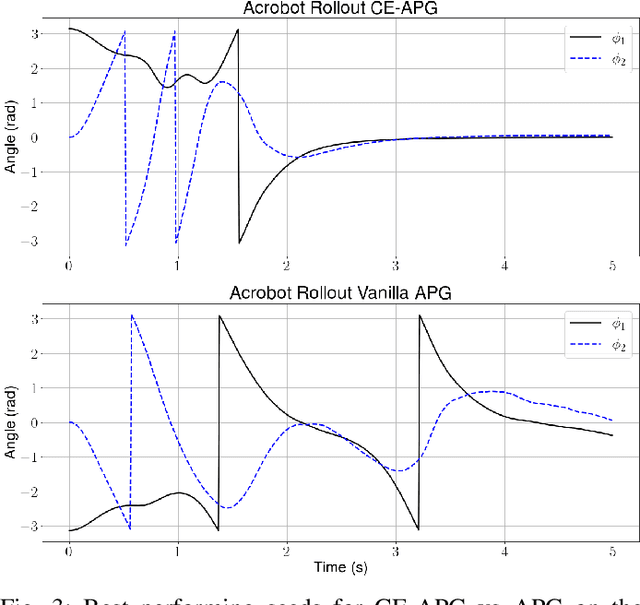

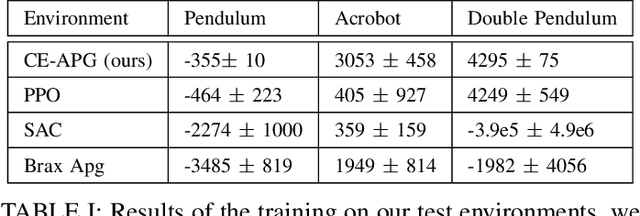

Abstract:In recent years, fully differentiable rigid body physics simulators have been developed, which can be used to simulate a wide range of robotic systems. In the context of reinforcement learning for control, these simulators theoretically allow algorithms to be applied directly to analytic gradients of the reward function. However, to date, these gradients have proved extremely challenging to use, and are outclassed by algorithms using no gradient information at all. In this work we present a novel algorithm, cross entropy analytic policy gradients, that is able to leverage these gradients to outperform state of art deep reinforcement learning on a set of challenging nonlinear control problems.

Direct Random Search for Fine Tuning of Deep Reinforcement Learning Policies

Sep 12, 2021

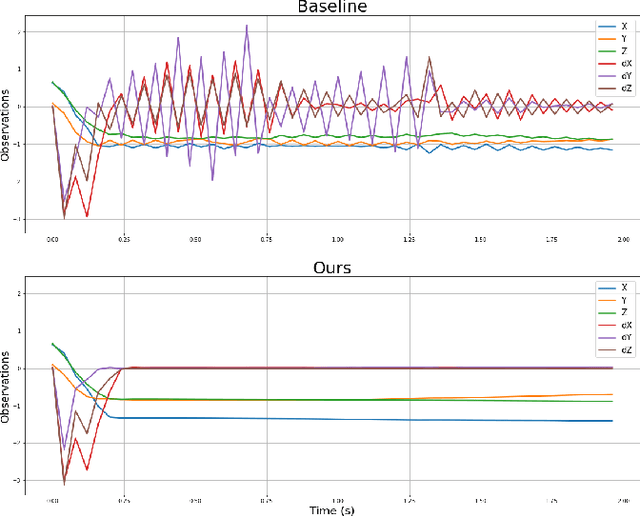

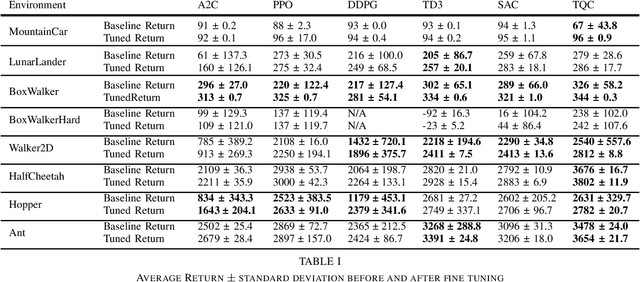

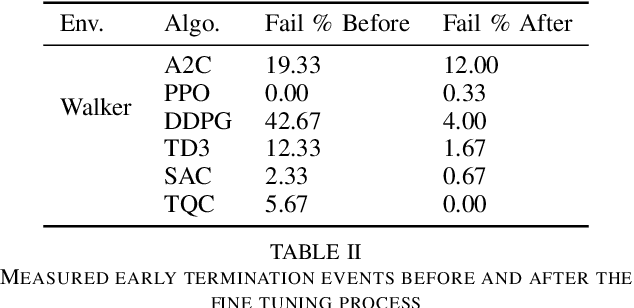

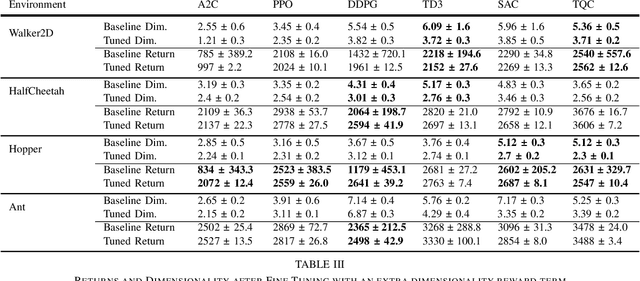

Abstract:Researchers have demonstrated that Deep Reinforcement Learning (DRL) is a powerful tool for finding policies that perform well on complex robotic systems. However, these policies are often unpredictable and can induce highly variable behavior when evaluated with only slightly different initial conditions. Training considerations constrain DRL algorithm designs in that most algorithms must use stochastic policies during training. The resulting policy used during deployment, however, can and frequently is a deterministic one that uses the Maximum Likelihood Action (MLA) at each step. In this work, we show that a direct random search is very effective at fine-tuning DRL policies by directly optimizing them using deterministic rollouts. We illustrate this across a large collection of reinforcement learning environments, using a wide variety of policies obtained from different algorithms. Our results show that this method yields more consistent and higher performing agents on the environments we tested. Furthermore, we demonstrate how this method can be used to extend our previous work on shrinking the dimensionality of the reachable state space of closed-loop systems run under Deep Neural Network (DNN) policies.

Mesh Based Analysis of Low Fractal Dimension ReinforcementLearning Policies

Dec 24, 2020

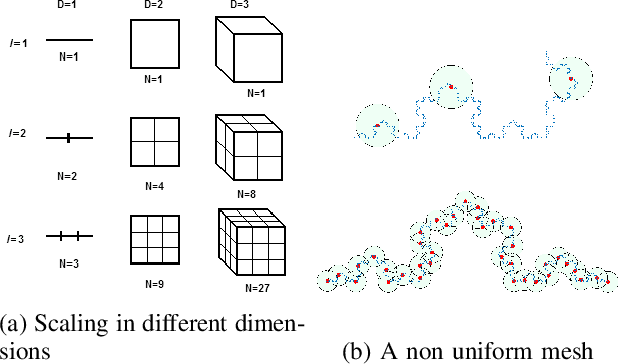

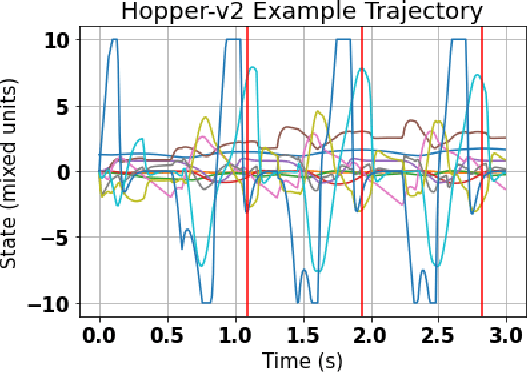

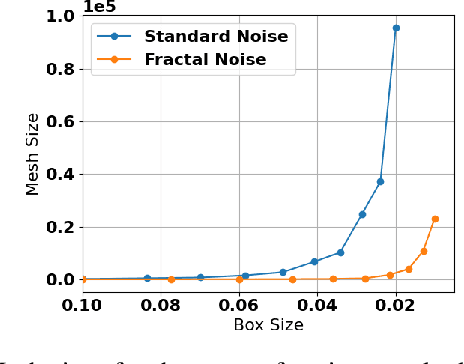

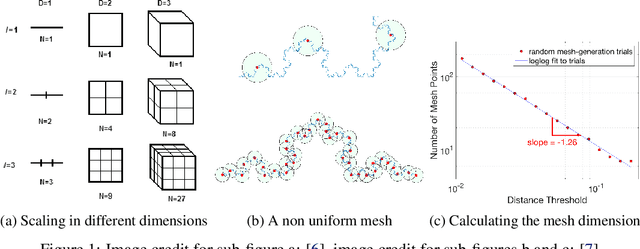

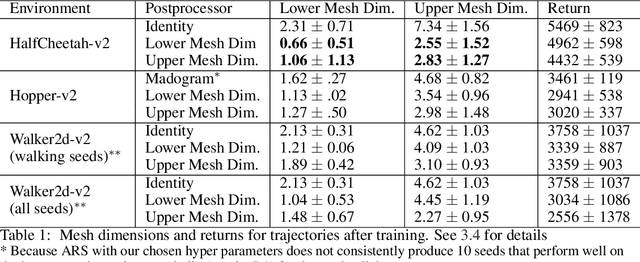

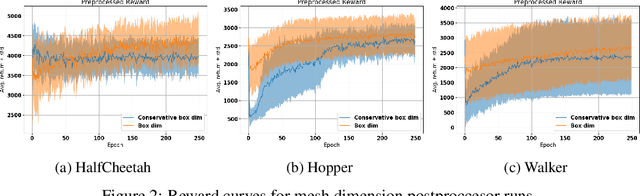

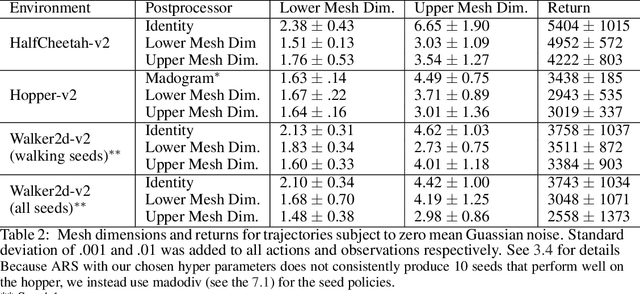

Abstract:In previous work, using a process we call meshing, the reachable state spaces for various continuous and hybrid systems were approximated as a discrete set of states which can then be synthesized into a Markov chain. One of the applications for this approach has been to analyze locomotion policies obtained by reinforcement learning, in a step towards making empirical guarantees about the stability properties of the resulting system. In a separate line of research, we introduced a modified reward function for on-policy reinforcement learning algorithms that utilizes a "fractal dimension" of rollout trajectories. This reward was shown to encourage policies that induce individual trajectories which can be more compactly represented as a discrete mesh. In this work we combine these two threads of research by building meshes of the reachable state space of a system subject to disturbances and controlled by policies obtained with the modified reward. Our analysis shows that the modified policies do produce much smaller reachable meshes. This shows that agents trained with the fractal dimension reward transfer their desirable quality of having a more compact state space to a setting with external disturbances. The results also suggest that the previous work using mesh based tools to analyze RL policies may be extended to higher dimensional systems or to higher resolution meshes than would have otherwise been possible.

Combining Deep Reinforcement Learning And Local Control For The Acrobot Swing-up And Balance Task

Dec 21, 2020

Abstract:In this work we present a novel extension of soft actor critic, a state of the art deep reinforcement algorithm. Our method allows us to combine traditional controllers with learned neural network policies. This combination allows us to leverage both our own domain knowledge and some of the advantages of model free reinforcement learning. We demonstrate our algorithm by combining a hand designed linear quadratic regulator with a learned controller for the acrobot problem. We show that our technique outperforms other state of the art reinforcement learning algorithms in this setting.

Explicitly Encouraging Low Fractional Dimensional Trajectories Via Reinforcement Learning

Dec 21, 2020

Abstract:A key limitation in using various modern methods of machine learning in developing feedback control policies is the lack of appropriate methodologies to analyze their long-term dynamics, in terms of making any sort of guarantees (even statistically) about robustness. The central reasons for this are largely due to the so-called curse of dimensionality, combined with the black-box nature of the resulting control policies themselves. This paper aims at the first of these issues. Although the full state space of a system may be quite large in dimensionality, it is a common feature of most model-based control methods that the resulting closed-loop systems demonstrate dominant dynamics that are rapidly driven to some lower-dimensional sub-space within. In this work we argue that the dimensionality of this subspace is captured by tools from fractal geometry, namely various notions of a fractional dimension. We then show that the dimensionality of trajectories induced by model free reinforcement learning agents can be influenced adding a post processing function to the agents reward signal. We verify that the dimensionality reduction is robust to noise being added to the system and show that that the modified agents are more actually more robust to noise and push disturbances in general for the systems we examined.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge