Scott Sorensen

Physics Driven Image Simulation from Commercial Satellite Imagery

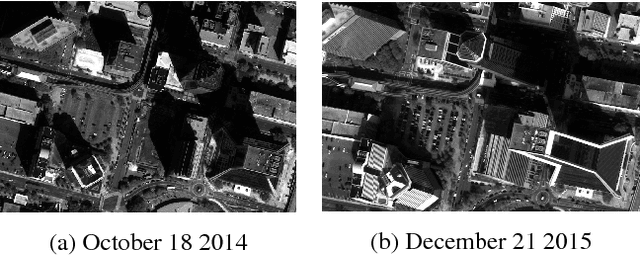

Apr 21, 2025Abstract:Physics driven image simulation allows for the modeling and creation of realistic imagery beyond what is afforded by typical rendering pipelines. We aim to automatically generate a physically realistic scene for simulation of a given region using satellite imagery to model the scene geometry, drive material estimates, and populate the scene with dynamic elements. We present automated techniques to utilize satellite imagery throughout the simulated scene to expedite scene construction and decrease manual overhead. Our technique does not use lidar, enabling simulations that could not be constructed previously. To develop a 3D scene, we model the various components of the real location, addressing the terrain, modelling man-made structures, and populating the scene with smaller elements such as vegetation and vehicles. To create the scene we begin with a Digital Surface Model, which serves as the basis for scene geometry, and allows us to reason about the real location in a common 3D frame of reference. These simulated scenes can provide increased fidelity with less manual intervention for novel locations on earth, and can facilitate algorithm development, and processing pipelines for imagery ranging from UV to LWIR $(200nm-20\mu m)$.

Learning Dense Stereo Matching for Digital Surface Models from Satellite Imagery

Dec 11, 2018

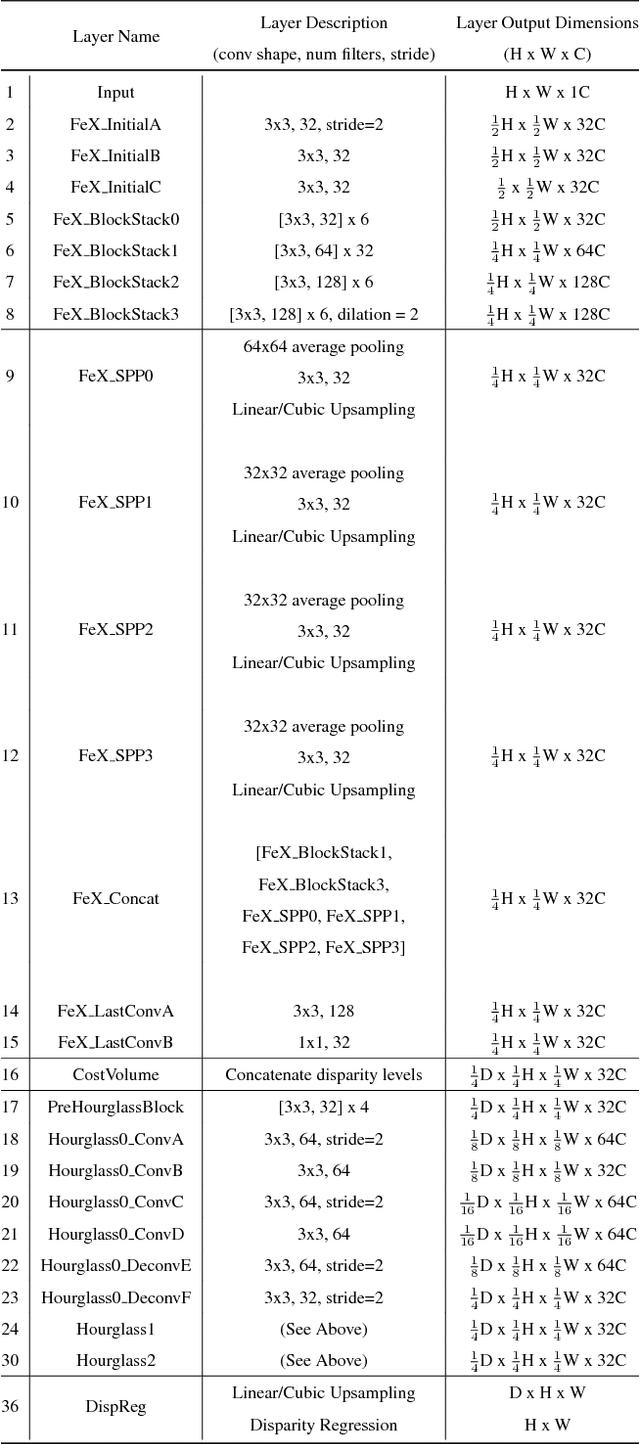

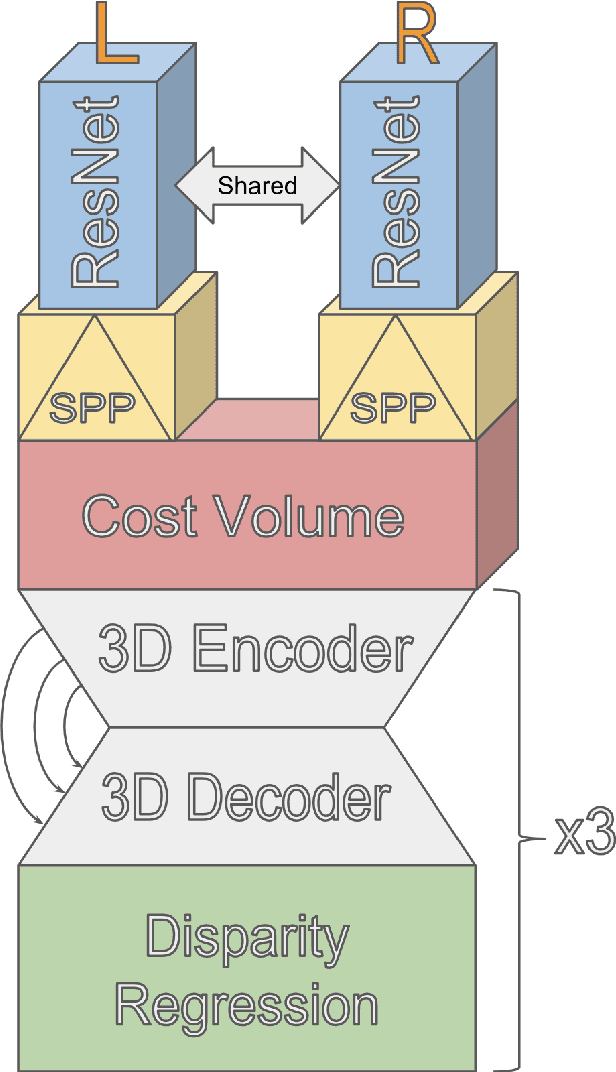

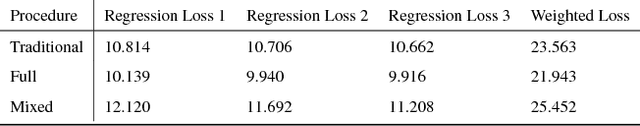

Abstract:Digital Surface Model generation from satellite imagery is a difficult task that has been largely overlooked by the deep learning community. Stereo reconstruction techniques developed for terrestrial systems including self driving cars do not translate well to satellite imagery where image pairs vary considerably. In this work we present neural network tailored for Digital Surface Model generation, a ground truthing and training scheme which maximizes available hardware, and we present a comparison to existing methods. The resulting models are smooth, preserve boundaries, and enable further processing. This represents one of the first attempts at leveraging deep learning in this domain.

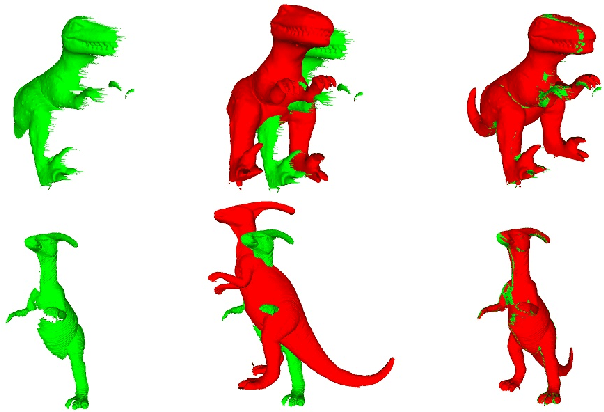

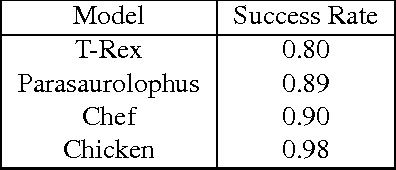

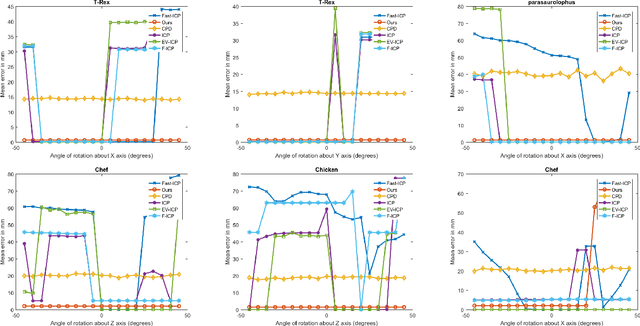

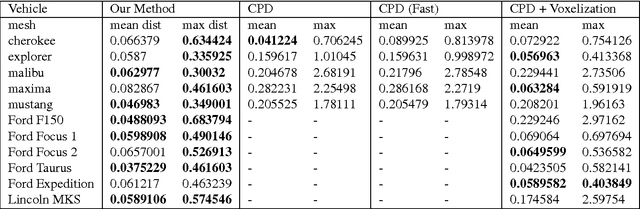

Robust Shape Registration using Fuzzy Correspondences

Feb 18, 2017

Abstract:Shape registration is the process of aligning one 3D model to another. Most previous methods to align shapes with no known correspondences attempt to solve for both the transformation and correspondences iteratively. We present a shape registration approach that solves for the transformation using fuzzy correspondences to maximize the overlap between the given shape and the target shape. A coarse to fine approach with Levenberg-Marquardt method is used for optimization. Real and synthetic experiments show our approach is robust and outperforms other state of the art methods when point clouds are noisy, sparse, and have non-uniform density. Experiments show our method is more robust to initialization and can handle larger scale changes and rotation than other methods. We also show that the approach can be used for 2D-3D alignment via ray-point alignment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge