Scott Heidbrink

Using Neural Architecture Search for Improving Software Flaw Detection in Multimodal Deep Learning Models

Sep 22, 2020

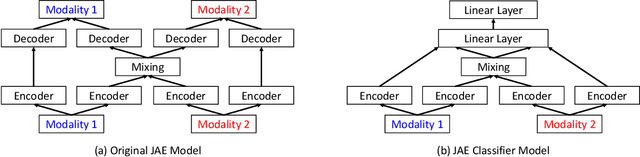

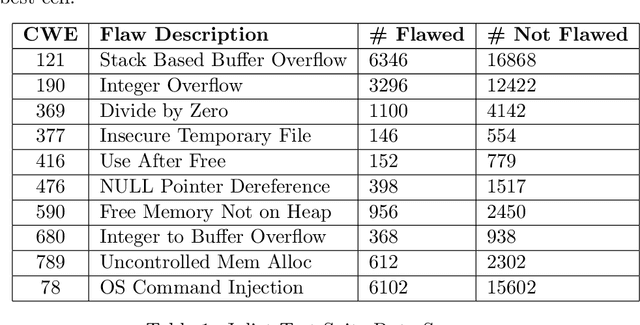

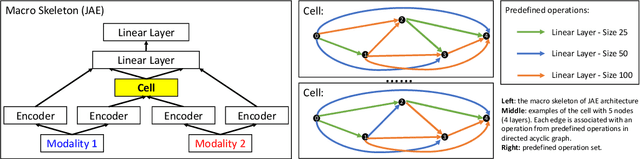

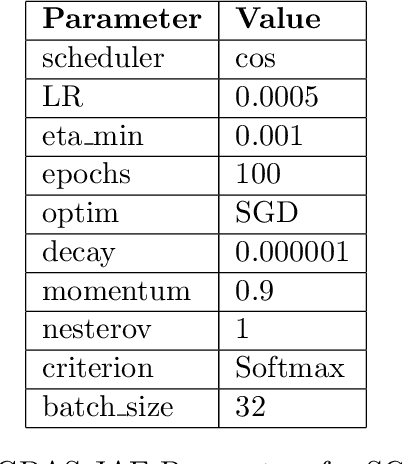

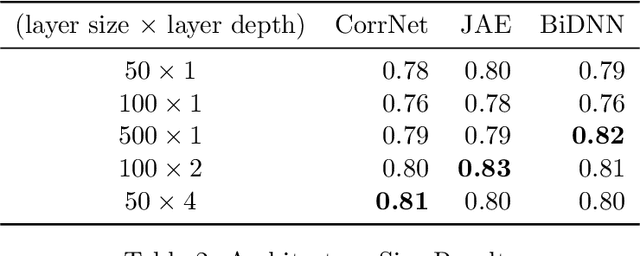

Abstract:Software flaw detection using multimodal deep learning models has been demonstrated as a very competitive approach on benchmark problems. In this work, we demonstrate that even better performance can be achieved using neural architecture search (NAS) combined with multimodal learning models. We adapt a NAS framework aimed at investigating image classification to the problem of software flaw detection and demonstrate improved results on the Juliet Test Suite, a popular benchmarking data set for measuring performance of machine learning models in this problem domain.

Multimodal Deep Learning for Flaw Detection in Software Programs

Sep 09, 2020

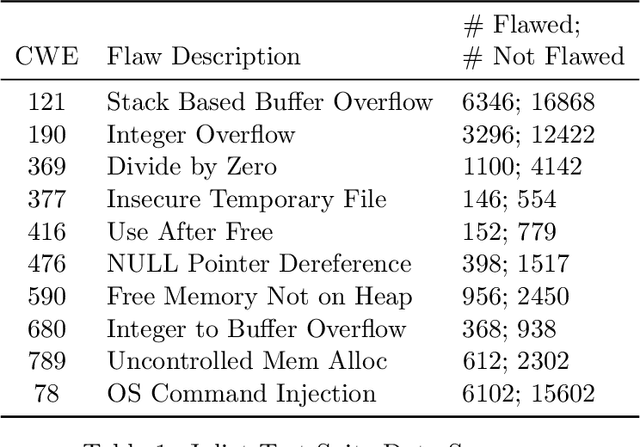

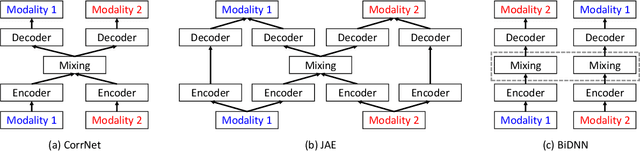

Abstract:We explore the use of multiple deep learning models for detecting flaws in software programs. Current, standard approaches for flaw detection rely on a single representation of a software program (e.g., source code or a program binary). We illustrate that, by using techniques from multimodal deep learning, we can simultaneously leverage multiple representations of software programs to improve flaw detection over single representation analyses. Specifically, we adapt three deep learning models from the multimodal learning literature for use in flaw detection and demonstrate how these models outperform traditional deep learning models. We present results on detecting software flaws using the Juliet Test Suite and Linux Kernel.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge