Sauman Das

Tree Learning: Optimal Algorithms and Sample Complexity

Feb 09, 2023

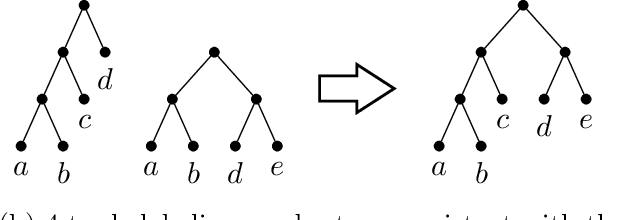

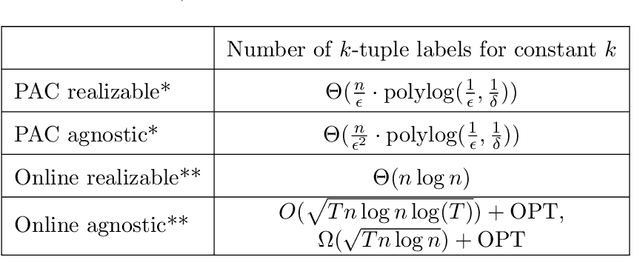

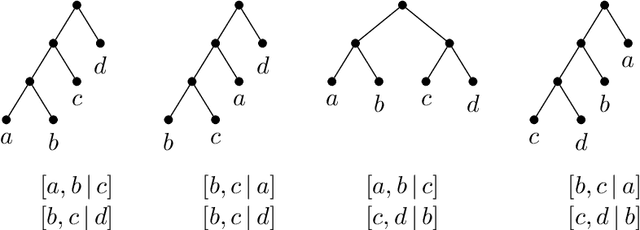

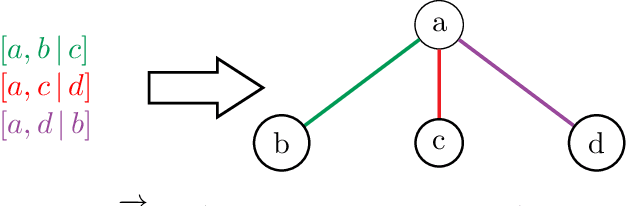

Abstract:We study the problem of learning a hierarchical tree representation of data from labeled samples, taken from an arbitrary (and possibly adversarial) distribution. Consider a collection of data tuples labeled according to their hierarchical structure. The smallest number of such tuples required in order to be able to accurately label subsequent tuples is of interest for data collection in machine learning. We present optimal sample complexity bounds for this problem in several learning settings, including (agnostic) PAC learning and online learning. Our results are based on tight bounds of the Natarajan and Littlestone dimensions of the associated problem. The corresponding tree classifiers can be constructed efficiently in near-linear time.

Optimizing Prediction of MGMT Promoter Methylation from MRI Scans using Adversarial Learning

Jan 27, 2022

Abstract:Glioblastoma Multiforme (GBM) is a malignant brain cancer forming around 48% of al brain and Central Nervous System (CNS) cancers. It is estimated that annually over 13,000 deaths occur in the US due to GBM, making it crucial to have early diagnosis systems that can lead to predictable and effective treatment. The most common treatment after GBM diagnosis is chemotherapy, which works by sending rapidly dividing cells to apoptosis. However, this form of treatment is not effective when the MGMT promoter sequence is methylated, and instead leads to severe side effects decreasing patient survivability. Therefore, it is important to be able to identify the MGMT promoter methylation status through non-invasive magnetic resonance imaging (MRI) based machine learning (ML) models. This is accomplished using the Brain Tumor Segmentation (BraTS) 2021 dataset, which was recently used for an international Kaggle competition. We developed four primary models - two radiomic models and two CNN models - each solving the binary classification task with progressive improvements. We built a novel ML model termed as the Intermediate State Generator which was used to normalize the slice thicknesses of all MRI scans. With further improvements, our best model was able to achieve performance significantly ($p < 0.05$) better than the best performing Kaggle model with a 6% increase in average cross-validation accuracy. This improvement could potentially lead to a more informed choice of chemotherapy as a treatment option, prolonging lives of thousands of patients with GBM each year.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge