Sarvo Madhavan

PatentsView-Evaluation: Evaluation Datasets and Tools to Advance Research on Inventor Name Disambiguation

Jan 09, 2023

Abstract:We present PatentsView-Evaluation, a Python package that enables researchers to evaluate the performance of inventor name disambiguation systems such as PatentsView.org. The package includes benchmark datasets and evaluation tools, and aims to advance research on inventor name disambiguation by providing access to high-quality evaluation data and improving evaluation standards.

Estimating the Performance of Entity Resolution Algorithms: Lessons Learned Through PatentsView.org

Oct 03, 2022

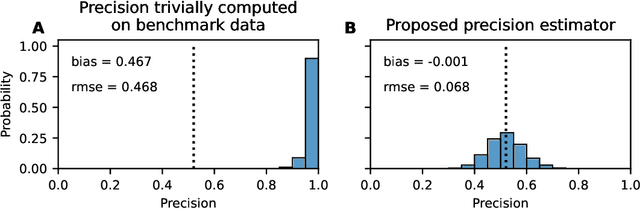

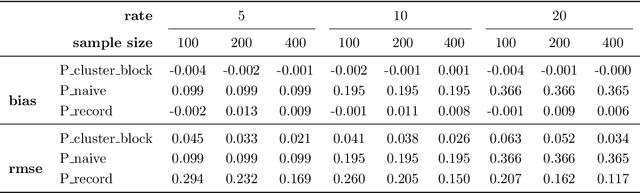

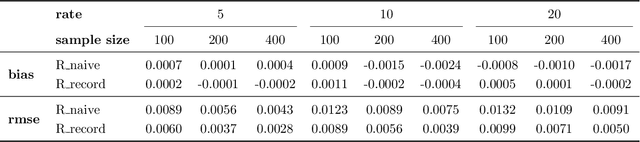

Abstract:This paper introduces a novel evaluation methodology for entity resolution algorithms. It is motivated by PatentsView.org, a U.S. Patents and Trademarks Office patent data exploration tool that disambiguates patent inventors using an entity resolution algorithm. We provide a data collection methodology and tailored performance estimators that account for sampling biases. Our approach is simple, practical and principled -- key characteristics that allow us to paint the first representative picture of PatentsView's disambiguation performance. This approach is used to inform PatentsView's users of the reliability of the data and to allow the comparison of competing disambiguation algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge