Sarv Priya

Motion-compensated cardiac MRI using low-rank diffeomorphic flow (DMoCo)

May 07, 2025Abstract:We introduce an unsupervised motion-compensated image reconstruction algorithm for free-breathing and ungated 3D cardiac magnetic resonance imaging (MRI). We express the image volume corresponding to each specific motion phase as the deformation of a single static image template. The main contribution of the work is the low-rank model for the compact joint representation of the family of diffeomorphisms, parameterized by the motion phases. The diffeomorphism at a specific motion phase is obtained by integrating a parametric velocity field along a path connecting the reference template phase to the motion phase. The velocity field at different phases is represented using a low-rank model. The static template and the low-rank motion model parameters are learned directly from the k-space data in an unsupervised fashion. The more constrained motion model is observed to offer improved recovery compared to current motion-resolved and motion-compensated algorithms for free-breathing 3D cine MRI.

Motion Compensated Unsupervised Deep Learning for 5D MRI

Sep 08, 2023Abstract:We propose an unsupervised deep learning algorithm for the motion-compensated reconstruction of 5D cardiac MRI data from 3D radial acquisitions. Ungated free-breathing 5D MRI simplifies the scan planning, improves patient comfort, and offers several clinical benefits over breath-held 2D exams, including isotropic spatial resolution and the ability to reslice the data to arbitrary views. However, the current reconstruction algorithms for 5D MRI take very long computational time, and their outcome is greatly dependent on the uniformity of the binning of the acquired data into different physiological phases. The proposed algorithm is a more data-efficient alternative to current motion-resolved reconstructions. This motion-compensated approach models the data in each cardiac/respiratory bin as Fourier samples of the deformed version of a 3D image template. The deformation maps are modeled by a convolutional neural network driven by the physiological phase information. The deformation maps and the template are then jointly estimated from the measured data. The cardiac and respiratory phases are estimated from 1D navigators using an auto-encoder. The proposed algorithm is validated on 5D bSSFP datasets acquired from two subjects.

Joint alignment and reconstruction of multislice dynamic MRI using variational manifold learning

Nov 21, 2021

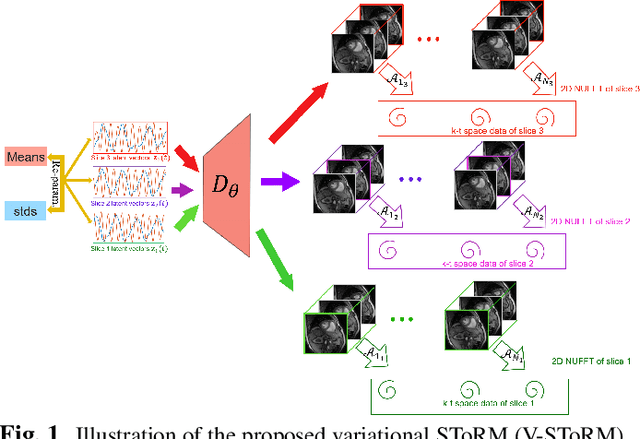

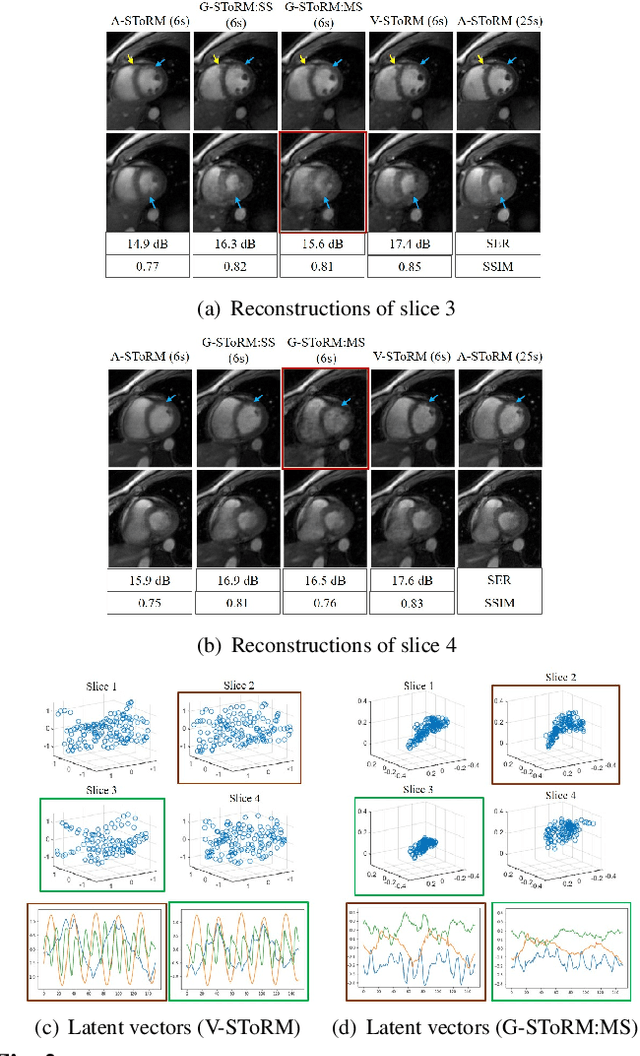

Abstract:Free-breathing cardiac MRI schemes are emerging as competitive alternatives to breath-held cine MRI protocols, enabling applicability to pediatric and other population groups that cannot hold their breath. Because the data from the slices are acquired sequentially, the cardiac/respiratory motion patterns may be different for each slice; current free-breathing approaches perform independent recovery of each slice. In addition to not being able to exploit the inter-slice redundancies, manual intervention or sophisticated post-processing methods are needed to align the images post-recovery for quantification. To overcome these challenges, we propose an unsupervised variational deep manifold learning scheme for the joint alignment and reconstruction of multislice dynamic MRI. The proposed scheme jointly learns the parameters of the deep network as well as the latent vectors for each slice, which capture the motion-induced dynamic variations, from the k-t space data of the specific subject. The variational framework minimizes the non-uniqueness in the representation, thus offering improved alignment and reconstructions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge