Sarah Walker

Learnable Adaptive Cosine Estimator (LACE) for Image Classification

Oct 15, 2021

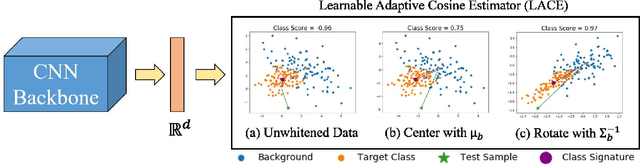

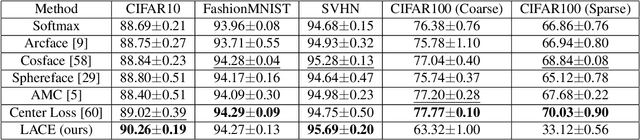

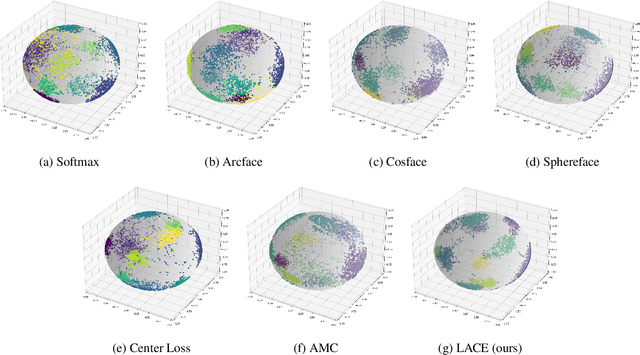

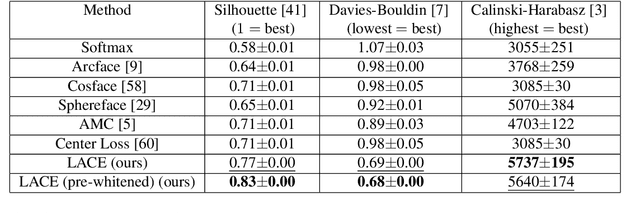

Abstract:In this work, we propose a new loss to improve feature discriminability and classification performance. Motivated by the adaptive cosine/coherence estimator (ACE), our proposed method incorporates angular information that is inherently learned by artificial neural networks. Our learnable ACE (LACE) transforms the data into a new "whitened" space that improves the inter-class separability and intra-class compactness. We compare our LACE to alternative state-of-the art softmax-based and feature regularization approaches. Our results show that the proposed method can serve as a viable alternative to cross entropy and angular softmax approaches. Our code is publicly available: https://github.com/GatorSense/LACE.

Explainable Systematic Analysis for Synthetic Aperture Sonar Imagery

Jan 21, 2021

Abstract:In this work, we present an in-depth and systematic analysis using tools such as local interpretable model-agnostic explanations (LIME) (arXiv:1602.04938) and divergence measures to analyze what changes lead to improvement in performance in fine tuned models for synthetic aperture sonar (SAS) data. We examine the sensitivity to factors in the fine tuning process such as class imbalance. Our findings show not only an improvement in seafloor texture classification, but also provide greater insight into what features play critical roles in improving performance as well as a knowledge of the importance of balanced data for fine tuning deep learning models for seafloor classification in SAS imagery.

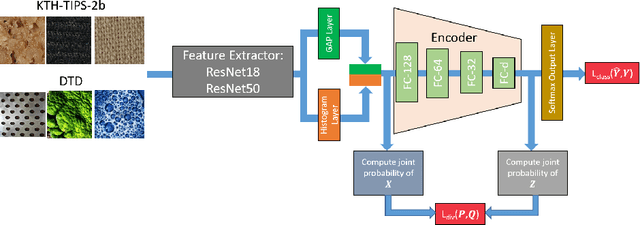

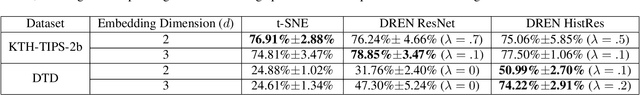

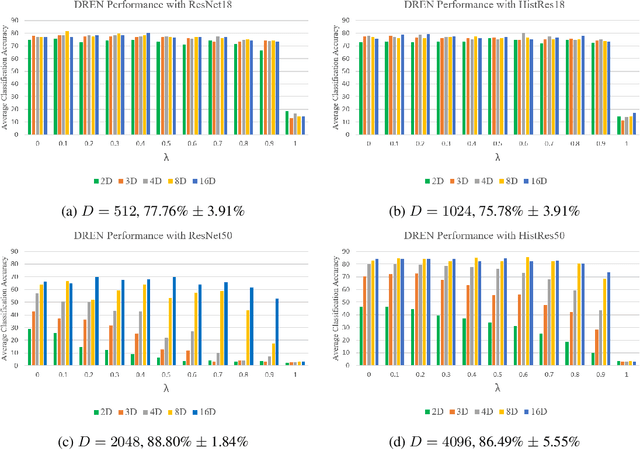

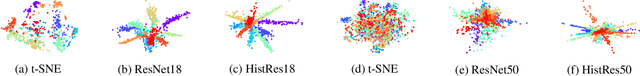

Divergence Regulated Encoder Network for Joint Dimensionality Reduction and Classification

Jan 21, 2021

Abstract:In this paper, we investigate performing joint dimensionality reduction and classification using a novel histogram neural network. Motivated by a popular dimensionality reduction approach, t-Distributed Stochastic Neighbor Embedding (t-SNE), our proposed method incorporates a classification loss computed on samples in a low-dimensional embedding space. We compare the learned sample embeddings against coordinates found by t-SNE in terms of classification accuracy and qualitative assessment. We also explore use of various divergence measures in the t-SNE objective. The proposed method has several advantages such as readily embedding out-of-sample points and reducing feature dimensionality while retaining class discriminability. Our results show that the proposed approach maintains and/or improves classification performance and reveals characteristics of features produced by neural networks that may be helpful for other applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge