Sarah Leung

Vision-Aided Absolute Trajectory Estimation Using an Unsupervised Deep Network with Online Error Correction

Mar 08, 2018

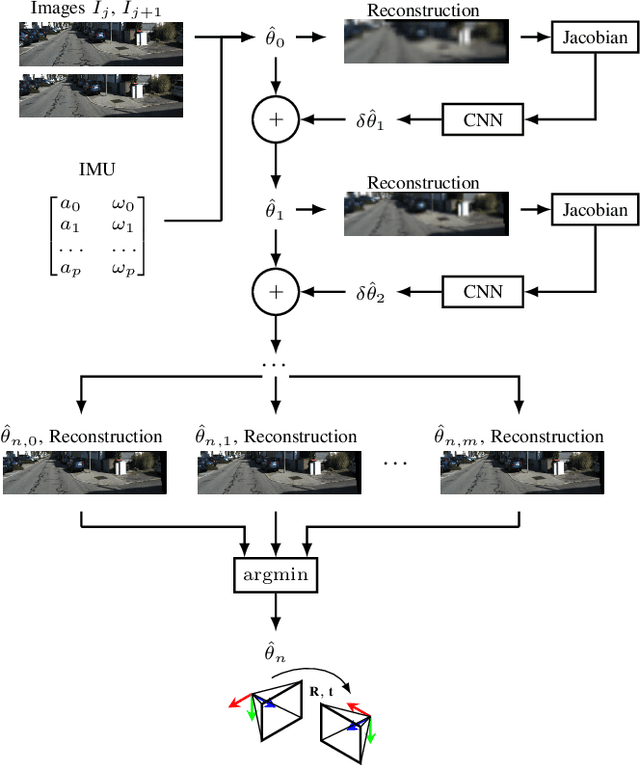

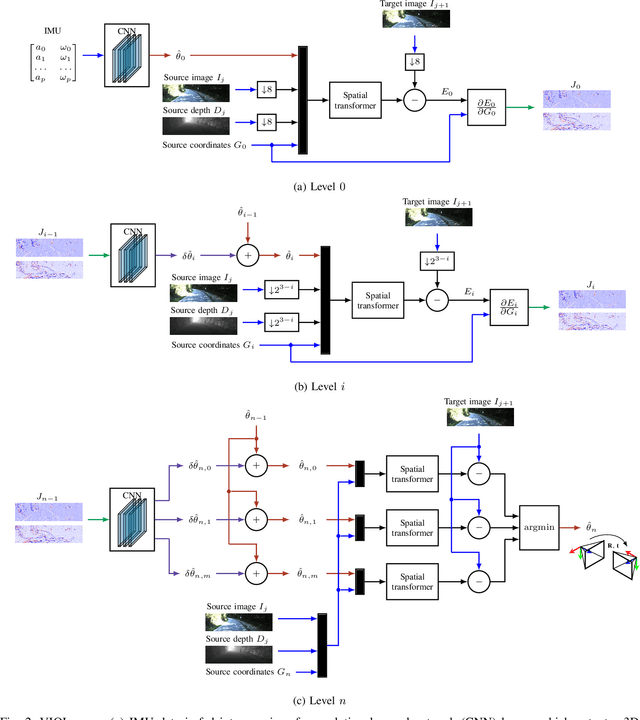

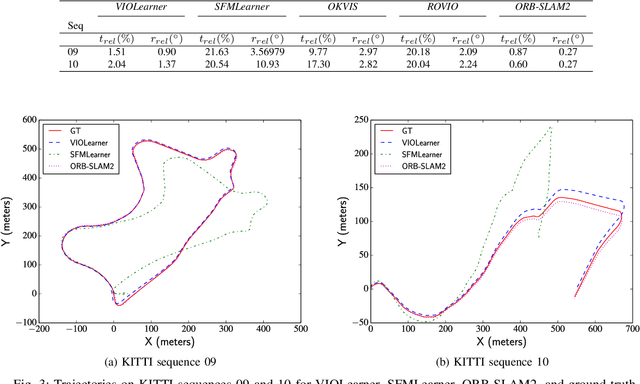

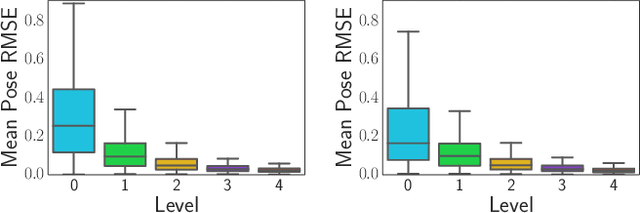

Abstract:We present an unsupervised deep neural network approach to the fusion of RGB-D imagery with inertial measurements for absolute trajectory estimation. Our network, dubbed the Visual-Inertial-Odometry Learner (VIOLearner), learns to perform visual-inertial odometry (VIO) without inertial measurement unit (IMU) intrinsic parameters (corresponding to gyroscope and accelerometer bias or white noise) or the extrinsic calibration between an IMU and camera. The network learns to integrate IMU measurements and generate hypothesis trajectories which are then corrected online according to the Jacobians of scaled image projection errors with respect to a spatial grid of pixel coordinates. We evaluate our network against state-of-the-art (SOA) visual-inertial odometry, visual odometry, and visual simultaneous localization and mapping (VSLAM) approaches on the KITTI Odometry dataset and demonstrate competitive odometry performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge