Sanchit Arora

Large-Scale Video Classification with Feature Space Augmentation coupled with Learned Label Relations and Ensembling

Sep 21, 2018

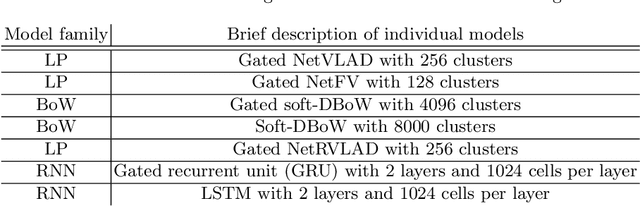

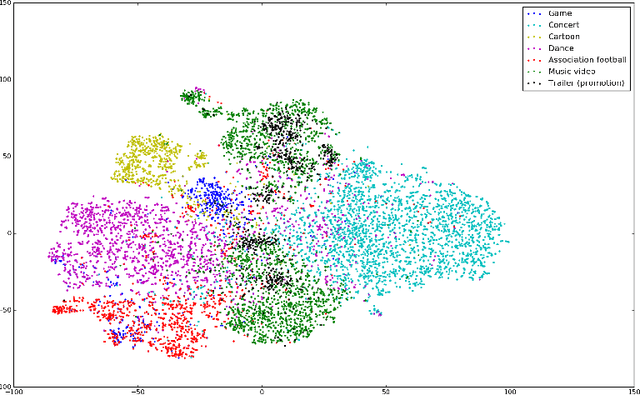

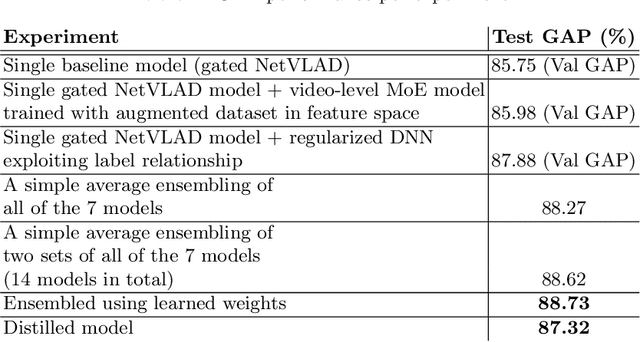

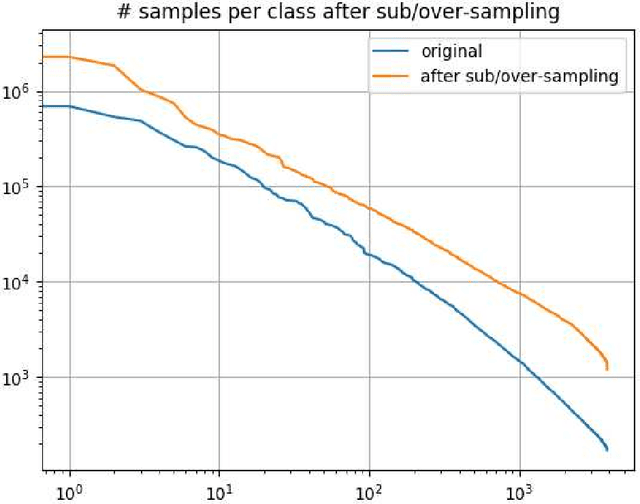

Abstract:This paper presents the Axon AI's solution to the 2nd YouTube-8M Video Understanding Challenge, achieving the final global average precision (GAP) of 88.733% on the private test set (ranked 3rd among 394 teams, not considering the model size constraint), and 87.287% using a model that meets size requirement. Two sets of 7 individual models belonging to 3 different families were trained separately. Then, the inference results on a training data were aggregated from these multiple models and fed to train a compact model that meets the model size requirement. In order to further improve performance we explored and employed data over/sub-sampling in feature space, an additional regularization term during training exploiting label relationship, and learned weights for ensembling different individual models.

Towards ontology driven learning of visual concept detectors

May 31, 2016

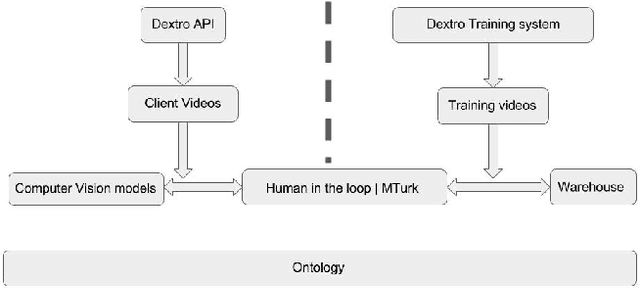

Abstract:The maturity of deep learning techniques has led in recent years to a breakthrough in object recognition in visual media. While for some specific benchmarks, neural techniques seem to match if not outperform human judgement, challenges are still open for detecting arbitrary concepts in arbitrary videos. In this paper, we propose a system that combines neural techniques, a large scale visual concepts ontology, and an active learning loop, to provide on the fly model learning of arbitrary concepts. We give an overview of the system as a whole, and focus on the central role of the ontology for guiding and bootstrapping the learning of new concepts, improving the recall of concept detection, and, on the user end, providing semantic search on a library of annotated videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge