Sai Teja Erukude

AI-Driven Cybersecurity Threats: A Survey of Emerging Risks and Defensive Strategies

Jan 06, 2026Abstract:Artificial Intelligence's dual-use nature is revolutionizing the cybersecurity landscape, introducing new threats across four main categories: deepfakes and synthetic media, adversarial AI attacks, automated malware, and AI-powered social engineering. This paper aims to analyze emerging risks, attack mechanisms, and defense shortcomings related to AI in cybersecurity. We introduce a comparative taxonomy connecting AI capabilities with threat modalities and defenses, review over 70 academic and industry references, and identify impactful opportunities for research, such as hybrid detection pipelines and benchmarking frameworks. The paper is structured thematically by threat type, with each section addressing technical context, real-world incidents, legal frameworks, and countermeasures. Our findings emphasize the urgency for explainable, interdisciplinary, and regulatory-compliant AI defense systems to maintain trust and security in digital ecosystems.

CornViT: A Multi-Stage Convolutional Vision Transformer Framework for Hierarchical Corn Kernel Analysis

Dec 31, 2025Abstract:Accurate grading of corn kernels is critical for seed certification, directional seeding, and breeding, yet it is still predominantly performed by manual inspection. This work introduces CornViT, a three-stage Convolutional Vision Transformer (CvT) framework that emulates the hierarchical reasoning of human seed analysts for single-kernel evaluation. Three sequential CvT-13 classifiers operate on 384x384 RGB images: Stage 1 distinguishes pure from impure kernels; Stage 2 categorizes pure kernels into flat and round morphologies; and Stage 3 determines the embryo orientation (up vs. down) for pure, flat kernels. Starting from a public corn seed image collection, we manually relabeled and filtered images to construct three stage-specific datasets: 7265 kernels for purity, 3859 pure kernels for morphology, and 1960 pure-flat kernels for embryo orientation, all released as benchmarks. Head-only fine-tuning of ImageNet-22k pretrained CvT-13 backbones yields test accuracies of 93.76% for purity, 94.11% for shape, and 91.12% for embryo-orientation detection. Under identical training conditions, ResNet-50 reaches only 76.56 to 81.02 percent, whereas DenseNet-121 attains 86.56 to 89.38 percent accuracy. These results highlight the advantages of convolution-augmented self-attention for kernel analysis. To facilitate adoption, we deploy CornViT in a Flask-based web application that performs stage-wise inference and exposes interpretable outputs through a browser interface. Together, the CornViT framework, curated datasets, and web application provide a deployable solution for automated corn kernel quality assessment in seed quality workflows. Source code and data are publicly available.

* 23 pages

Galaxy image simplification using Generative AI

Jul 15, 2025Abstract:Modern digital sky surveys have been acquiring images of billions of galaxies. While these images often provide sufficient details to analyze the shape of the galaxies, accurate analysis of such high volumes of images requires effective automation. Current solutions often rely on machine learning annotation of the galaxy images based on a set of pre-defined classes. Here we introduce a new approach to galaxy image analysis that is based on generative AI. The method simplifies the galaxy images and automatically converts them into a ``skeletonized" form. The simplified images allow accurate measurements of the galaxy shapes and analysis that is not limited to a certain pre-defined set of classes. We demonstrate the method by applying it to galaxy images acquired by the DESI Legacy Survey. The code and data are publicly available. The method was applied to 125,000 DESI Legacy Survey images, and the catalog of the simplified images is publicly available.

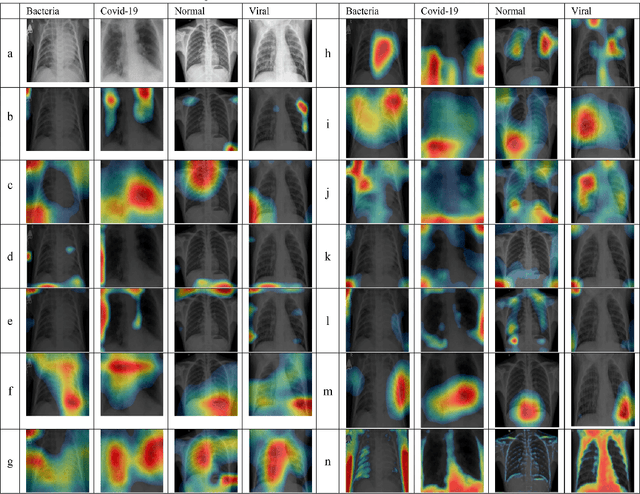

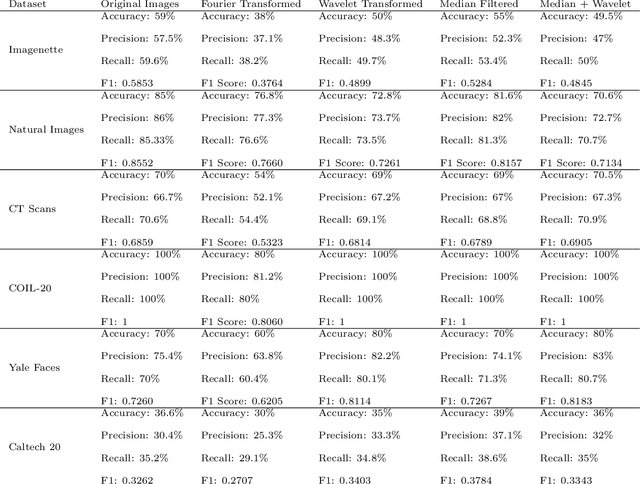

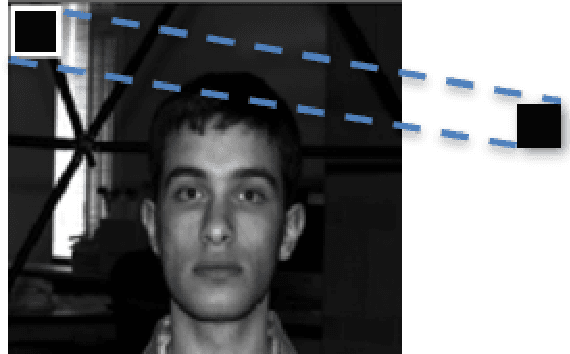

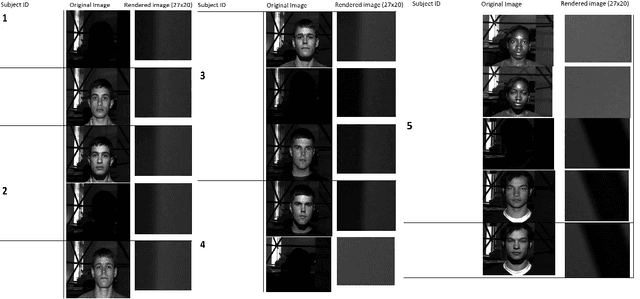

Identifying Bias in Deep Neural Networks Using Image Transforms

Dec 17, 2024

Abstract:CNNs have become one of the most commonly used computational tool in the past two decades. One of the primary downsides of CNNs is that they work as a ``black box", where the user cannot necessarily know how the image data are analyzed, and therefore needs to rely on empirical evaluation to test the efficacy of a trained CNN. This can lead to hidden biases that affect the performance evaluation of neural networks, but are difficult to identify. Here we discuss examples of such hidden biases in common and widely used benchmark datasets, and propose techniques for identifying dataset biases that can affect the standard performance evaluation metrics. One effective approach to identify dataset bias is to perform image classification by using merely blank background parts of the original images. However, in some situations a blank background in the images is not available, making it more difficult to separate foreground or contextual information from the bias. To overcome this, we propose a method to identify dataset bias without the need to crop background information from the images. That method is based on applying several image transforms to the original images, including Fourier transform, wavelet transforms, median filter, and their combinations. These transforms were applied to recover background bias information that CNNs use to classify images. This transformations affect the contextual visual information in a different manner than it affects the systemic background bias. Therefore, the method can distinguish between contextual information and the bias, and alert on the presence of background bias even without the need to separate sub-images parts from the blank background of the original images. Code used in the experiments is publicly available.

* Computers, published

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge