Sai Samrat Kankanala

Uncovering the role of semantic and acoustic cues in normal and dichotic listening

Nov 18, 2024

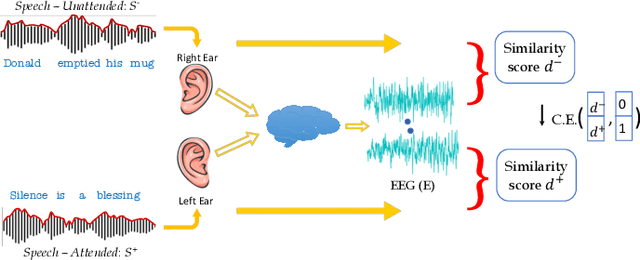

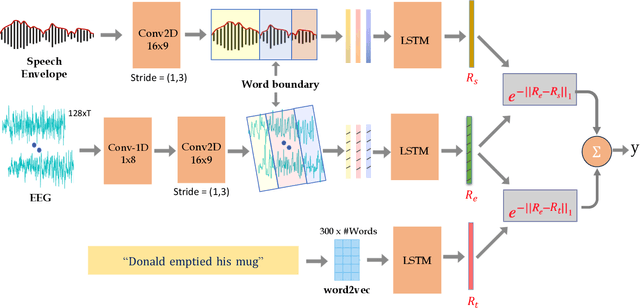

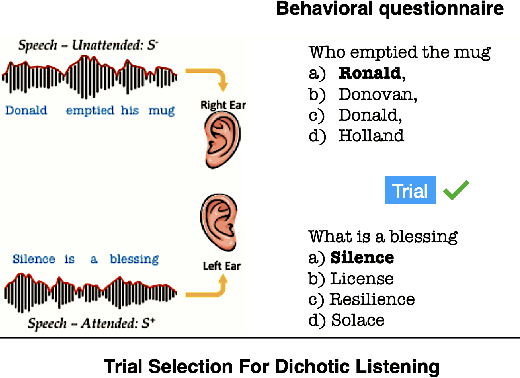

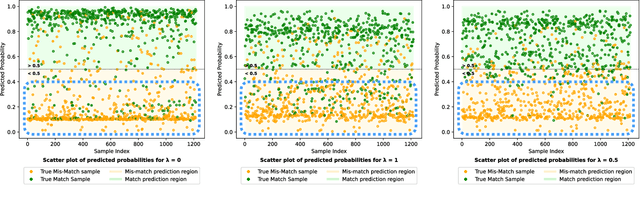

Abstract:Despite extensive research, the precise role of acoustic and semantic cues in complex speech perception tasks remains unclear. In this study, we propose a paradigm to understand the encoding of these cues in electroencephalogram (EEG) data, using match-mismatch (MM) classification task. The MM task involves determining whether the stimulus and response correspond to each other or not. We design a multi-modal sequence model, based on long short term memory (LSTM) architecture, to perform the MM task. The model is input with acoustic stimulus (derived from the speech envelope), semantic stimulus (derived from textual representations of the speech content), and neural response (derived from the EEG data). Our experiments are performed on two separate conditions, i) natural passive listening condition and, ii) an auditory attention based dichotic listening condition. Using the MM task as the analysis framework, we observe that - a) speech perception is fragmented based on word boundaries, b) acoustic and semantic cues offer similar levels of MM task performance in natural listening conditions, and c) semantic cues offer significantly improved MM classification over acoustic cues in dichotic listening task. Further, the study provides evidence of right ear advantage in dichotic listening conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge