S. Chatzinotas

MU-MIMO Symbol-Level Precoding for QAM Constellations with Maximum Likelihood Receivers

Oct 29, 2024Abstract:In this paper, we investigate symbol-level precoding (SLP) and efficient decoding techniques for downlink transmission, where we focus on scenarios where the base station (BS) transmits multiple QAM constellation streams to users equipped with multiple receive antennas. We begin by formulating a joint symbol-level transmit precoding and receive combining optimization problem. This coupled problem is addressed by employing the alternating optimization (AO) method, and closed-form solutions are derived by analyzing the obtained two subproblems. Furthermore, to address the dependence of the receive combining matrix on the transmit signals, we switch to maximum likelihood detection (MLD) method for decoding. Notably, we have demonstrated that the smallest singular value of the precoding matrix significantly impacts the performance of MLD method. Specifically, a lower value of the smallest singular value results in degraded detection performance. Additionally, we show that the traditional SLP matrix is rank-one, making it infeasible to directly apply MLD at the receiver end. To circumvent this limitation, we propose a novel symbol-level smallest singular value maximization problem, termed SSVMP, to enable SLP in systems where users employ the MLD decoding approach. Moreover, to reduce the number of variables to be optimized, we further derive a more generic semidefinite programming (SDP)-based optimization problem. Numerical results validate the effectiveness of our proposed schemes and demonstrate that they significantly outperform the traditional block diagonalization (BD)-based method.

Artificial Intelligence for Satellite Communication and Non-Terrestrial Networks: A Survey

Apr 25, 2023Abstract:This paper surveys the application and development of Artificial Intelligence (AI) in Satellite Communication (SatCom) and Non-Terrestrial Networks (NTN). We first present a comprehensive list of use cases, the relative challenges and the main AI tools capable of addressing those challenges. For each use case, we present the main motivation, a system description, the available non-AI solutions and the potential benefits and available works using AI. We also discuss the pros and cons of an on-board and on-ground AI-based architecture, and we revise the current commercial and research activities relevant to this topic. Next, we describe the state-of-the-art hardware solutions for developing ML in real satellite systems. Finally, we discuss the long-term developments of AI in the SatCom and NTN sectors and potential research directions. This paper provides a comprehensive and up-to-date overview of the opportunities and challenges offered by AI to improve the performance and efficiency of NTNs.

NB-IoT via LEO satellites: An efficient resource allocation strategy for uplink data transmission

Jul 02, 2021

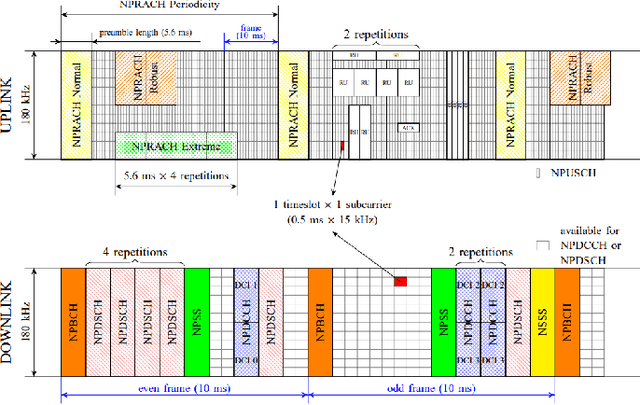

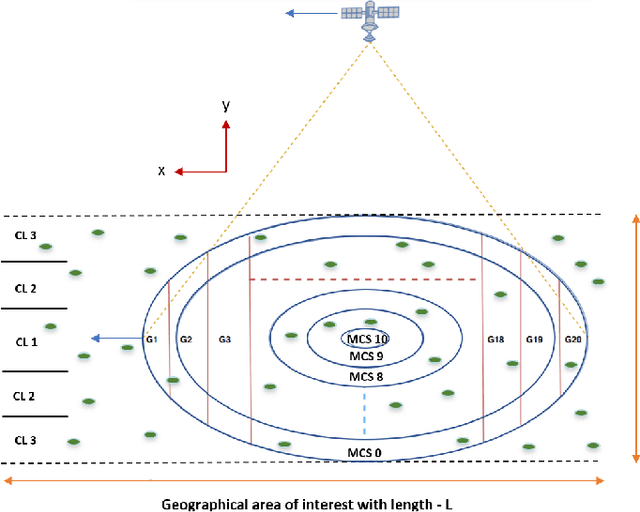

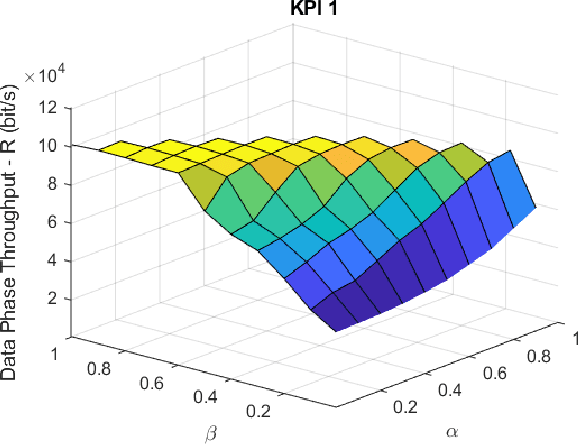

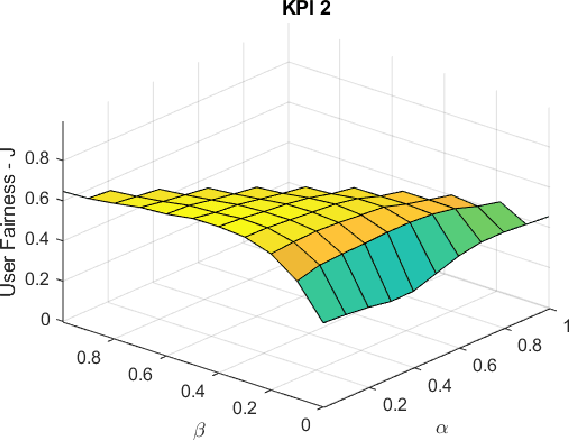

Abstract:In this paper, we focus on the use of Low-Eart Orbit (LEO) satellites providing the Narrowband Internet of Things (NB-IoT) connectivity to the on-ground user equipment (UEs). Conventional resource allocation algorithms for the NBIoT systems are particularly designed for terrestrial infrastructures, where devices are under the coverage of a specific base station and the whole system varies very slowly in time. The existing methods in the literature cannot be applied over LEO satellite-based NB-IoT systems for several reasons. First, with the movement of the LEO satellite, the corresponding channel parameters for each user will quickly change over time. Delaying the scheduling of a certain user would result in a resource allocation based on outdated parameters. Second, the differential Doppler shift, which is a typical impairment in communications over LEO, directly depends on the relative distance among users. Scheduling at the same radio frame users that overcome a certain distance would violate the differential Doppler limit supported by the NB-IoT standard. Third, the propagation delay over a LEO satellite channel is around 4-16 times higher compared to a terrestrial system, imposing the need for message exchange minimization between the users and the base station. In this work, we propose a novel uplink resource allocation strategy that jointly incorporates the new design considerations previously mentioned together with the distinct channel conditions, satellite coverage times and data demands of various users on Earth. The novel methodology proposed in this paper can act as a framework for future works in the field.

Random Access Procedure over Non-Terrestrial Networks: From Theory to Practice

Jun 29, 2021

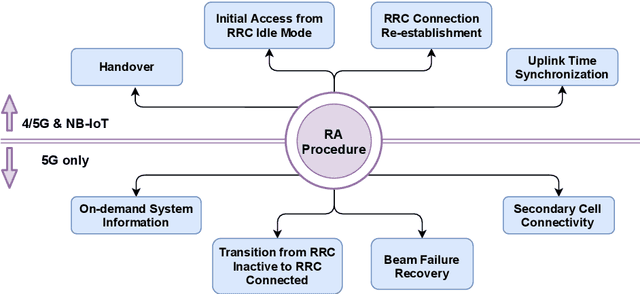

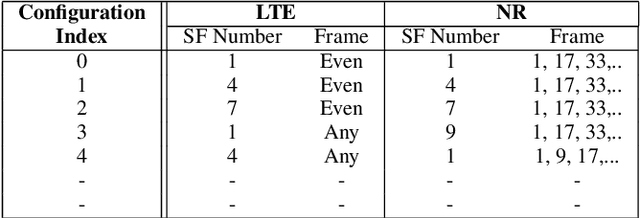

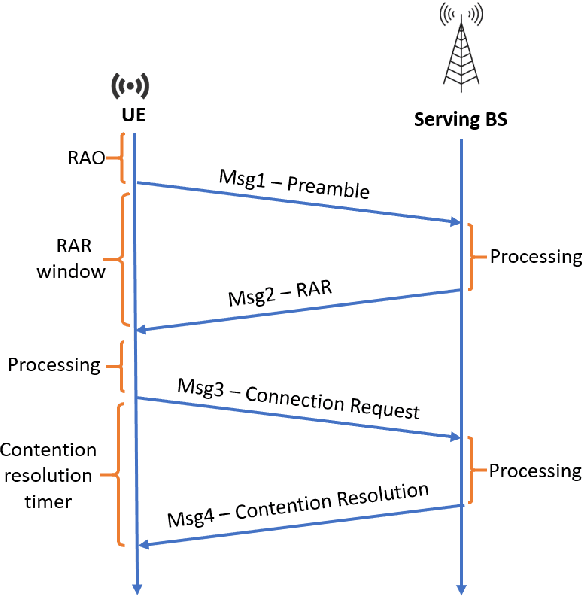

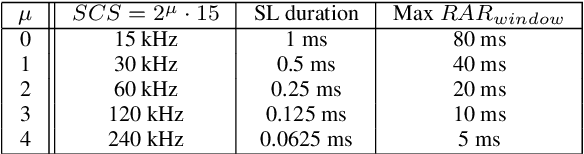

Abstract:Non-terrestrial Networks (NTNs) have become an appealing concept over the last few years and they are foreseen as a cornerstone for the next generations of mobile communication systems. Despite opening up new market opportunities and use cases for the future, the novel impairments caused by the signal propagation over the NTN channel compromises several procedures of the current cellular standards. One of the first and most important procedures impacted is the random access (RA) procedure, which is mainly utilized for achieving uplink synchronization among users in several standards, such as the fourth and fifth generation of mobile communication (4 & 5G) and narrowband internet of things (NB-IoT). In this work, we analyse the challenges imposed by the considerably increased delay in the communication link on the RA procedure and propose new solutions to overcome those challenges. A trade-off analysis of various solutions is provided taking into account also the already existing ones in the literature. In order to broaden the scope of applicability, we keep the analysis general targeting 4G, 5G and NB-IoT systems since the RA procedure is quasi-identical among these technologies. Last but not least, we go one step further and validate our techniques in an experimental setup, consisting of a user and a base station implemented in open air interface (OAI), and an NTN channel implemented in hardware that emulates the signal propagation delay. The laboratory test-bed built in this work, not only enables us to validate various solutions, but also plays a crucial role in identifying novel challenges not previously treated in the literature. Finally, an important key performance indicator (KPI) of the RA procedure over NTN is shown, which is the time that a single user requires to establish a connection with the base station.

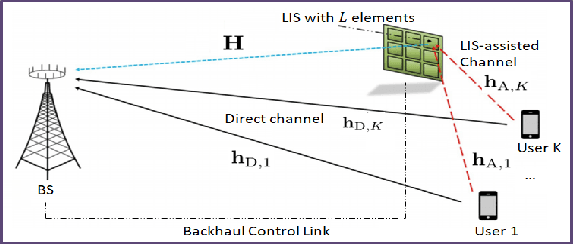

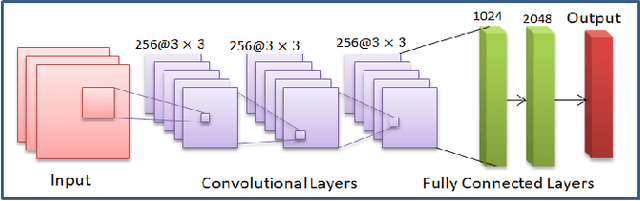

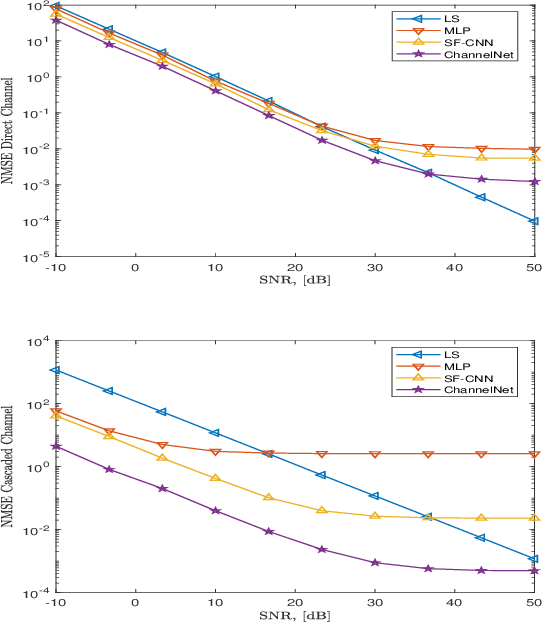

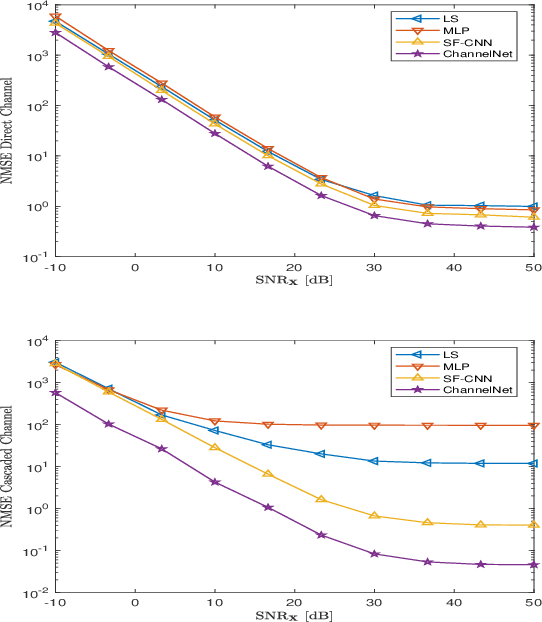

Deep Channel Learning For Large Intelligent Surfaces Aided mm-Wave Massive MIMO Systems

Jan 29, 2020

Abstract:This letter presents the first work introducing a deep learning (DL) framework for channel estimation in large intelligent surface (LIS) assisted massive MIMO (multiple-input multiple-output) systems. A twin convolutional neural network (CNN) architecture is designed and it is fed with the received pilot signals to estimate both direct and cascaded channels. In a multi-user scenario, each user has access to the CNN to estimate its own channel. The performance of the proposed DL approach is evaluated and compared with state-of-the-art DL-based techniques and its superior performance is demonstrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge