Ryan W. Stidham

Motion-based Camera Localization System in Colonoscopy Videos

Dec 04, 2020

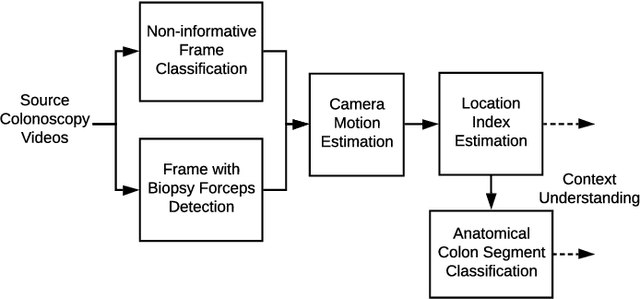

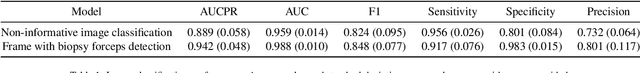

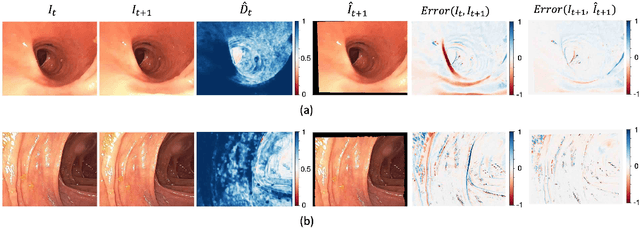

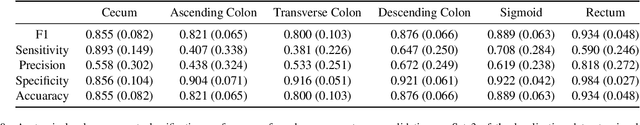

Abstract:Optical colonoscopy is an essential diagnostic and prognostic tool for many gastrointestinal diseases including cancer screening and staging, intestinal bleeding, diarrhea, abdominal symptom evaluation, and inflammatory bowel disease assessment. However, the evaluation, classification, and quantification of findings on colonoscopy are subject to inter-observer variation. Automated assessment of colonoscopy is of interest considering the subjectivity present in qualitative human interpretations of colonoscopy findings. Localization of the camera is an essential element to consider when inferring the meaning and context of findings for diseases evaluated by colonoscopy. In this study, we proposed a camera localization system to estimate the approximate anatomic location of the camera and classify the anatomical colon segment the camera is in. The camera localization system starts with non-informative frame detection to remove frames without camera motion information. Then a self-training end-to-end convolutional neural network was built to estimate the camera motion. With the estimated camera motion, the camera trajectory can be derived, and the location index can be calculated. Based on the estimated location index, anatomical colon segment classification was performed by building the colon template. The algorithm was trained and validated using colonoscopy videos collected from routine clinical practice. From our results, the average accuracy of the classification is 0.759, which is substantially higher than the performance of using the location index built from other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge