Rujing Wang

Context-LSTM: a robust classifier for video detection on UCF101

Mar 13, 2022

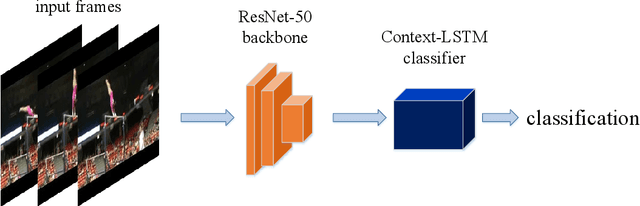

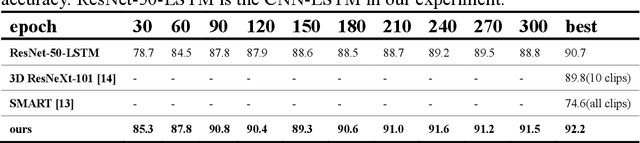

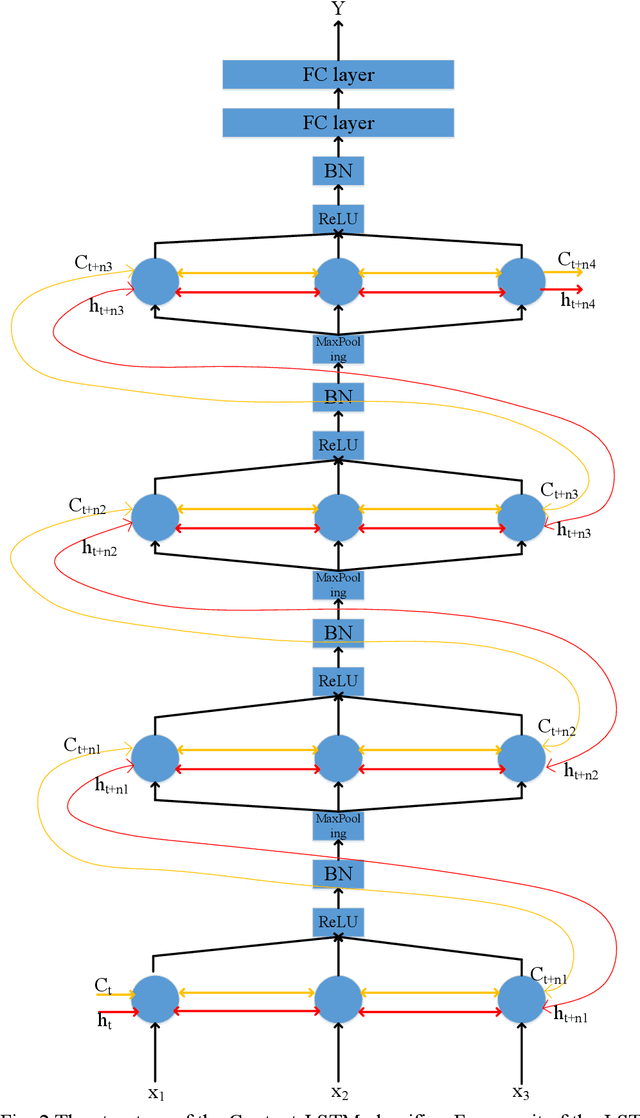

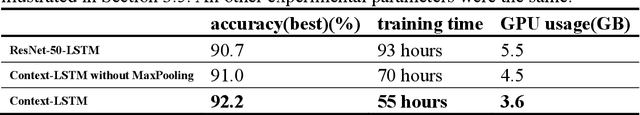

Abstract:Video detection and human action recognition may be computationally expensive, and need a long time to train models. In this paper, we were intended to reduce the training time and the GPU memory usage of video detection, and achieved a competitive detection accuracy. Other research works such as Two-stream, C3D, TSN have shown excellent performance on UCF101. Here, we used a LSTM structure simply for video detection. We used a simple structure to perform a competitive top-1 accuracy on the entire validation dataset of UCF101. The LSTM structure is named Context-LSTM, since it may process the deep temporal features. The Context-LSTM may simulate the human recognition system. We cascaded the LSTM blocks in PyTorch and connected the cell state flow and hidden output flow. At the connection of the blocks, we used ReLU, Batch Normalization, and MaxPooling functions. The Context-LSTM could reduce the training time and the GPU memory usage, while keeping a state-of-the-art top-1 accuracy on UCF101 entire validation dataset, show a robust performance on video action detection.

A stepped sampling method for video detection using LSTM

Jul 18, 2021

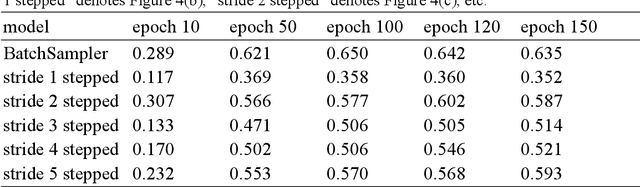

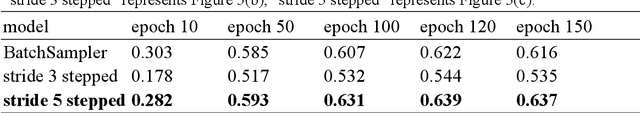

Abstract:Artificial neural networks that simulate human achieves great successes. From the perspective of simulating human memory method, we propose a stepped sampler based on the "repeated input". We repeatedly inputted data to the LSTM model stepwise in a batch. The stepped sampler is used to strengthen the ability of fusing the temporal information in LSTM. We tested the stepped sampler on the LSTM built-in in PyTorch. Compared with the traditional sampler of PyTorch, such as sequential sampler, batch sampler, the training loss of the proposed stepped sampler converges faster in the training of the model, and the training loss after convergence is more stable. Meanwhile, it can maintain a higher test accuracy. We quantified the algorithm of the stepped sampler.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge