Ruitong Sun

OUSAC: Optimized Guidance Scheduling with Adaptive Caching for DiT Acceleration

Dec 16, 2025

Abstract:Diffusion models have emerged as the dominant paradigm for high-quality image generation, yet their computational expense remains substantial due to iterative denoising. Classifier-Free Guidance (CFG) significantly enhances generation quality and controllability but doubles the computation by requiring both conditional and unconditional forward passes at every timestep. We present OUSAC (Optimized gUidance Scheduling with Adaptive Caching), a framework that accelerates diffusion transformers (DiT) through systematic optimization. Our key insight is that variable guidance scales enable sparse computation: adjusting scales at certain timesteps can compensate for skipping CFG at others, enabling both fewer total sampling steps and fewer CFG steps while maintaining quality. However, variable guidance patterns introduce denoising deviations that undermine standard caching methods, which assume constant CFG scales across steps. Moreover, different transformer blocks are affected at different levels under dynamic conditions. This paper develops a two-stage approach leveraging these insights. Stage-1 employs evolutionary algorithms to jointly optimize which timesteps to skip and what guidance scale to use, eliminating up to 82% of unconditional passes. Stage-2 introduces adaptive rank allocation that tailors calibration efforts per transformer block, maintaining caching effectiveness under variable guidance. Experiments demonstrate that OUSAC significantly outperforms state-of-the-art acceleration methods, achieving 53% computational savings with 15% quality improvement on DiT-XL/2 (ImageNet 512x512), 60% savings with 16.1% improvement on PixArt-alpha (MSCOCO), and 5x speedup on FLUX while improving CLIP Score over the 50-step baseline.

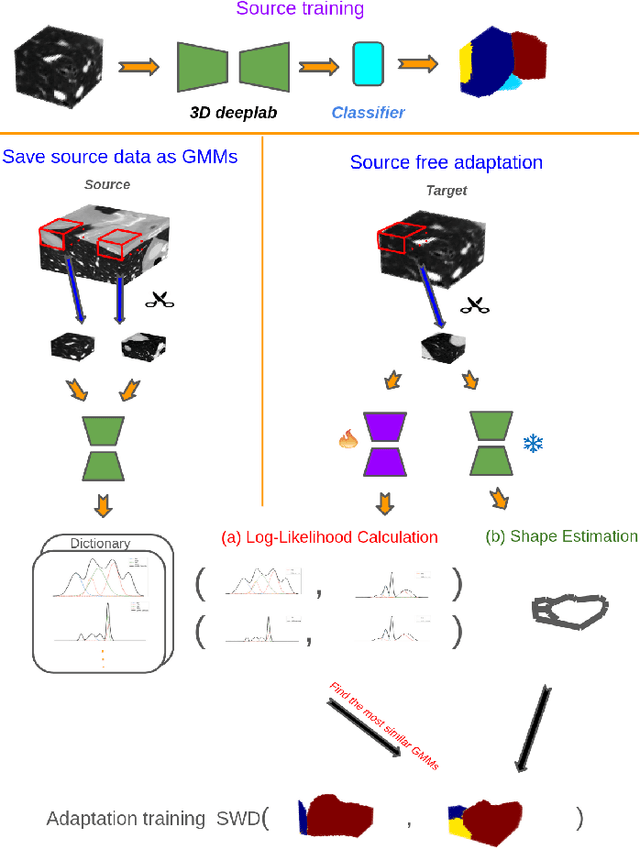

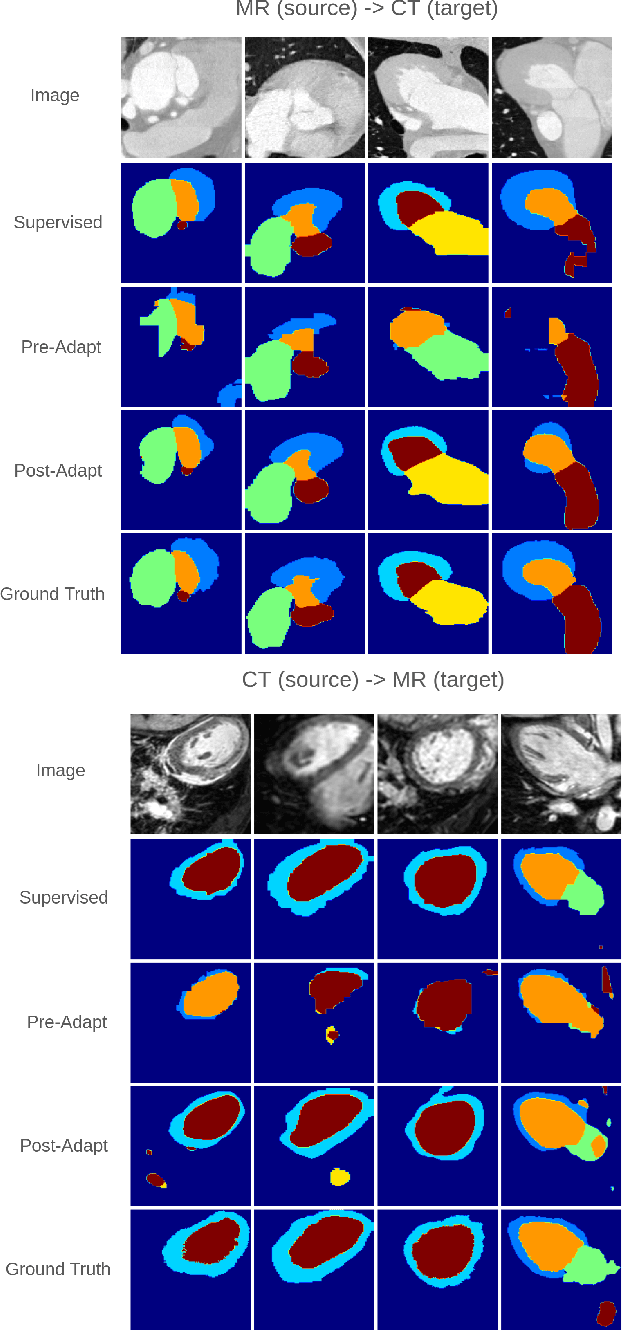

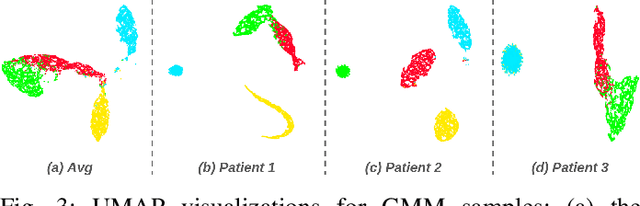

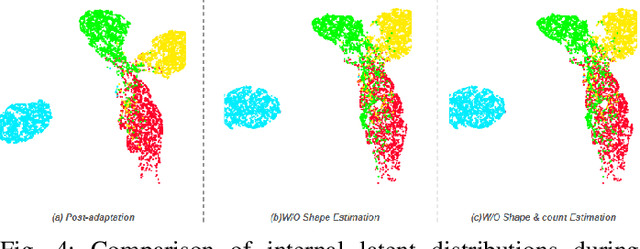

Cross-Domain Distribution Alignment for Segmentation of Private Unannotated 3D Medical Images

Oct 11, 2024

Abstract:Manual annotation of 3D medical images for segmentation tasks is tedious and time-consuming. Moreover, data privacy limits the applicability of crowd sourcing to perform data annotation in medical domains. As a result, training deep neural networks for medical image segmentation can be challenging. We introduce a new source-free Unsupervised Domain Adaptation (UDA) method to address this problem. Our idea is based on estimating the internally learned distribution of a relevant source domain by a base model and then generating pseudo-labels that are used for enhancing the model refinement through self-training. We demonstrate that our approach leads to SOTA performance on a real-world 3D medical dataset.

Explainable Artificial Intelligence Architecture for Melanoma Diagnosis Using Indicator Localization and Self-Supervised Learning

Mar 26, 2023Abstract:Melanoma is a prevalent lethal type of cancer that is treatable if diagnosed at early stages of development. Skin lesions are a typical indicator for diagnosing melanoma but they often led to delayed diagnosis due to high similarities of cancerous and benign lesions at early stages of melanoma. Deep learning (DL) can be used as a solution to classify skin lesion pictures with a high accuracy, but clinical adoption of deep learning faces a significant challenge. The reason is that the decision processes of deep learning models are often uninterpretable which makes them black boxes that are challenging to trust. We develop an explainable deep learning architecture for melanoma diagnosis which generates clinically interpretable visual explanations for its decisions. Our experiments demonstrate that our proposed architectures matches clinical explanations significantly better than existing architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge