Cross-Domain Distribution Alignment for Segmentation of Private Unannotated 3D Medical Images

Paper and Code

Oct 11, 2024

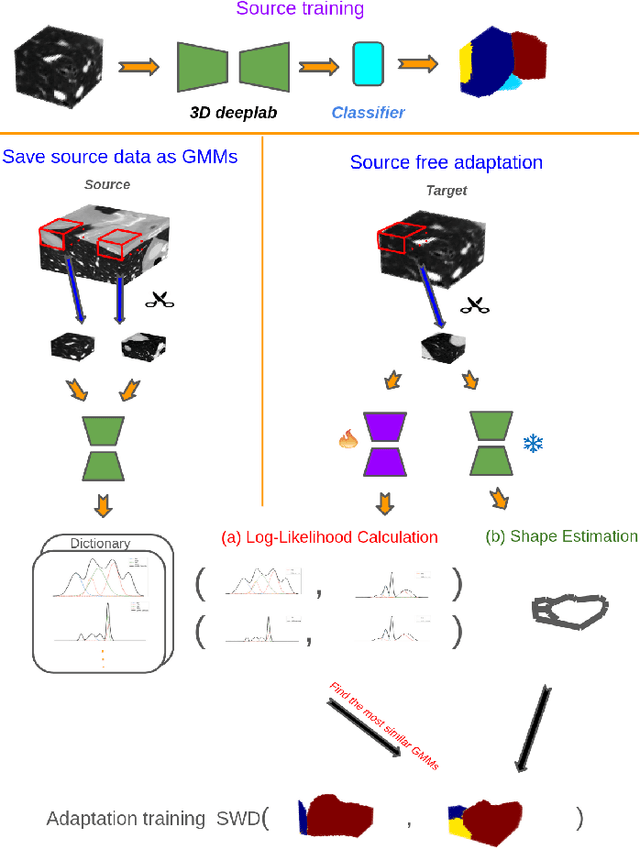

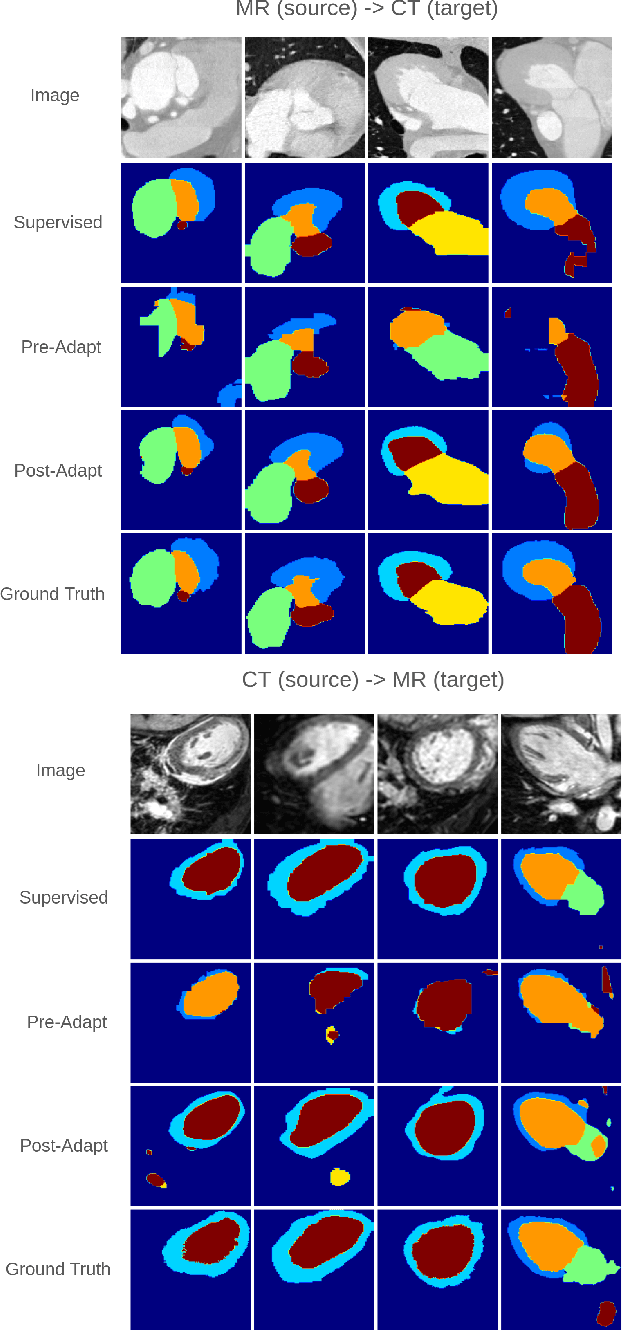

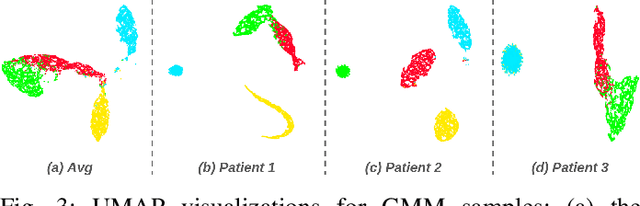

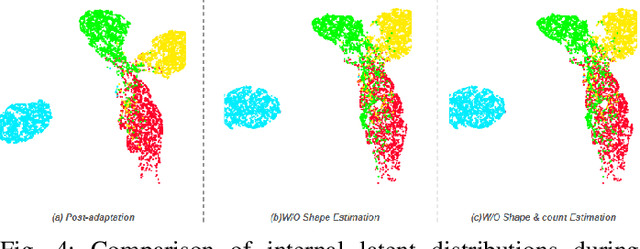

Manual annotation of 3D medical images for segmentation tasks is tedious and time-consuming. Moreover, data privacy limits the applicability of crowd sourcing to perform data annotation in medical domains. As a result, training deep neural networks for medical image segmentation can be challenging. We introduce a new source-free Unsupervised Domain Adaptation (UDA) method to address this problem. Our idea is based on estimating the internally learned distribution of a relevant source domain by a base model and then generating pseudo-labels that are used for enhancing the model refinement through self-training. We demonstrate that our approach leads to SOTA performance on a real-world 3D medical dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge