Rui Graca

Towards a physically realistic computationally efficient DVS pixel model

May 12, 2025

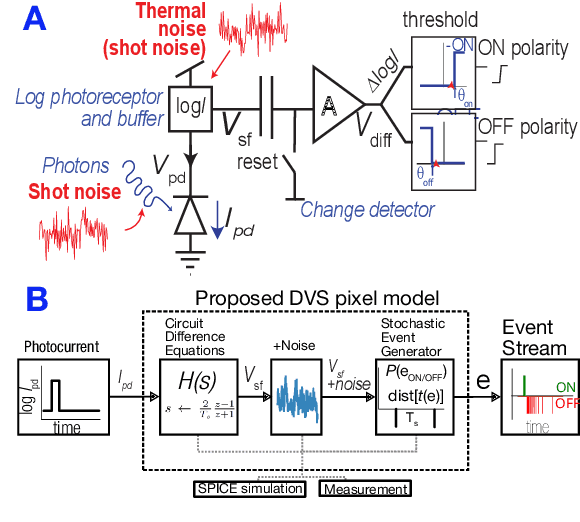

Abstract:Dynamic Vision Sensor (DVS) event camera models are important tools for predicting camera response, optimizing biases, and generating realistic simulated datasets. Existing DVS models have been useful, but have not demonstrated high realism for challenging HDR scenes combined with adequate computational efficiency for array-level scene simulation. This paper reports progress towards a physically realistic and computationally efficient DVS model based on large-signal differential equations derived from circuit analysis, with parameters fitted from pixel measurements and circuit simulation. These are combined with an efficient stochastic event generation mechanism based on first-passage-time theory, allowing accurate noise generation with timesteps greater than 1000x longer than previous methods

SciDVS: A Scientific Event Camera with 1.7% Temporal Contrast Sensitivity at 0.7 lux

Sep 15, 2024

Abstract:This paper reports a Dynamic Vision Sensor (DVS) event camera that is 6x more sensitive at 14x lower illumination than existing commercial and prototype cameras. Event cameras output a sparse stream of brightness change events. Their high dynamic range (HDR), quick response, and high temporal resolution provide key advantages for scientific applications that involve low lighting conditions and sparse visual events. However, current DVS are hindered by low sensitivity, resulting from shot noise and pixel-to-pixel mismatch. Commercial DVS have a minimum brightness change threshold of >10%. Sensitive prototypes achieved as low as 1%, but required kilo-lux illumination. Our SciDVS prototype fabricated in a 180nm CMOS image sensor process achieves 1.7% sensitivity at chip illumination of 0.7 lx and 18 Hz bandwidth. Novel features of SciDVS are (1) an auto-centering in-pixel preamplifier providing intrascene HDR and increased sensitivity, (2) improved control of bandwidth to limit shot noise, and (3) optional pixel binning, allowing the user to trade spatial resolution for sensitivity.

Re-Interpreting the Step-Response Probability Curve to Extract Fundamental Physical Parameters of Event-based Vision Sensors

Apr 11, 2024Abstract:Biologically inspired event-based vision sensors (EVS) are growing in popularity due to performance benefits including ultra-low power consumption, high dynamic range, data sparsity, and fast temporal response. They efficiently encode dynamic information from a visual scene through pixels that respond autonomously and asynchronously when the per-pixel illumination level changes by a user-selectable contrast threshold ratio, $\theta$. Due to their unique sensing paradigm and complex analog pixel circuitry, characterizing Event-based Vision Sensor (EVS) is non-trivial. The step-response probability curve (S-curve) is a key measurement technique that has emerged as the standard for measuring $\theta$. In this work, we detail the method for generating accurate S-curves by applying an appropriate stimulus and sensor configuration to decouple 2nd-order effects from the parameter being studied. We use an EVS pixel simulation to demonstrate how noise and other physical constraints can lead to error in the measurement, and develop two techniques that are robust enough to obtain accurate estimates. We then apply best practices derived from our simulation to generate S-curves for the latest generation Sony IMX636 and interpret the resulting family of curves to correct the apparent anomalous result of previous reports suggesting that $\theta$ changes with illumination. Further, we demonstrate that with correct interpretation, fundamental physical parameters such as dark current and RMS noise can be accurately inferred from a collection of S-curves, leading to more accurate parameterization for high-fidelity EVS simulations.

Exploiting Alternating DVS Shot Noise Event Pair Statistics to Reduce Background Activity

Apr 12, 2023Abstract:Dynamic Vision Sensors (DVS) record "events" corresponding to pixel-level brightness changes, resulting in data-efficient representation of a dynamic visual scene. As DVS expand into increasingly diverse applications, non-ideal behaviors in their output under extreme sensing conditions are important to consider. Under low illumination (below ~10 lux) their output begins to be dominated by shot noise events (SNEs) which increase the data output and obscure true signal. SNE rates can be controlled to some degree by tuning circuit parameters to reduce sensitivity or temporal response bandwidth at the cost of signal loss. Alternatively, an improved understanding of SNE statistics can be leveraged to develop novel techniques for minimizing uninformative sensor output. We first explain a fundamental observation about sequential pairing of opposite polarity SNEs based on pixel circuit logic and validate our theory using DVS recordings and simulations. Finally, we derive a practical result from this new understanding and demonstrate two novel biasing techniques to reduce SNEs by 50% and 80% respectively while still retaining sensitivity and/or temporal resolution.

Utility and Feasibility of a Center Surround Event Camera

Feb 26, 2022

Abstract:Standard dynamic vision sensor (DVS) event cameras output a stream of spatially-independent log-intensity brightness change events so they cannot suppress spatial redundancy. Nearly all biological retinas use an antagonistic center-surround organization. This paper proposes a practical method of implementing a compact, energy-efficient Center Surround DVS (CSDVS) with a surround smoothing network that uses compact polysilicon resistors for lateral resistance. The paper includes behavioral simulation results for the CSDVS (see sites.google.com/view/csdvs/home). The CSDVS would significantly reduce events caused by low spatial frequencies, but amplify the informative high frequency spatiotemporal events.

Feedback control of event cameras

May 02, 2021

Abstract:Dynamic vision sensor event cameras produce a variable data rate stream of brightness change events. Event production at the pixel level is controlled by threshold, bandwidth, and refractory period bias current parameter settings. Biases must be adjusted to match application requirements and the optimal settings depend on many factors. As a first step towards automatic control of biases, this paper proposes fixed-step feedback controllers that use measurements of event rate and noise. The controllers regulate the event rate within an acceptable range using threshold and refractory period control, and regulate noise using bandwidth control. Experiments demonstrate model validity and feedback control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge